Source: Bewater Community

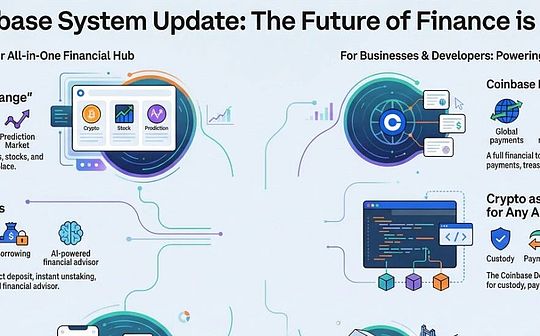

On June 14, the AO Foundation officially launched a decentralized super computer AO token economics.On the evening of June 12, AO released token economics and Near’s release of DA solutions, we invited Arweave and AO founders Sam Williams, Near Protocol co -founder Illia Polosukhin to conduct a combination of AI and blockchain.Talking in depth.SAM elaborates the underlying architecture of AO in detail. It is based on the paradigm -oriented ERLANG model for ACTOR. It aims to create a decentralized computing network with unlimited expansion and supporting heterogeneous process interaction.SAM also looks forward to the potential application of AO in the DEFI scenario. By introducing credible AI strategies, AO is expected to realize real “agency finance”.Illia shared the latest progress of Near Protocol in terms of scalability and AI integration, including the introduction of chain abstraction and chain signature functions, as well as point -to -point payment and AI reasoning router in development.In addition, the two guests also expressed their views on the priorities and research priorities in their respective ecology, as well as their optimistic innovation projects.Thank you @BlockBeatsasia @0xlogicrw to compile and finish the first time to bring the Chinese version of exciting content to the community.

How does Illia and SAM get involved in AI and encryption

Lulu: First of all, make a simple self -introduction, and talk about how you get involved in the two areas of AI and blockchain.

Illia:My background is machine learning and artificial intelligence. Before entering the field of encryption, I worked in this field for about 10 years.What I know most is the paper “Attention is All You Need”, which introduces the Transformer model and is now widely used in various modern machine learning, AI and deep learning technologies.However, before that, I also participated in many projects, including Tensorflow, which is a machine learning framework for Google’s open source in 2014-2015.I have also been engaged in research on Q & A systems, machine translations, etc., and actually applied some research results in Google.com and other Google products.

Later, Alex and I co -founded Near.ai, originally a AI company, dedicated to teaching machine programming.We believe that in the future, people will be able to communicate with computer through natural language, and computers will automatically programming.In 2017, this sounded like a science fiction, but we did conduct a lot of research.We obtain more training data through crowdsourcing. Students from China, Eastern Europe and other places complete small tasks for us, such as writing code and writing code annotations.But we encountered challenges when paying for pay, such as PayPal cannot transfer to Chinese users.

Some people recommend using Bitcoin, but the trading costs of Bitcoin at that time were already very high.So we started in -depth research.We have scalable backgrounds. In Google, everything must be particular about scale.My co -founder Alex created a shard database company to serve the Fortune 500 corporate.At that time, the status quo of the blockchain technology was strange. Almost everything was actually running on a single machine, which was limited by the ability of a single machine.

So we intend to build a new agreement, this is Near Protocol.It is a shard 1 protocol that focuses on scalability, ease of use and development convenience.We launched the main network in 2020 and have been strengthening the ecosystem.In 2022, Alex joined Openai, and in 2023, a company focused on basic models.Recently, we announced that he returned to the leader Near.ai team and continued our work of teaching machine programming in 2017.

Lulu: This is really a very wonderful story. I didn’t know before that NEAR started as an AI company, and now focuses on AI.Next, please introduce yourself and you project.

SAM:We started to get involved in this field about seven years ago. At that time, I had been paying attention to Bitcoin for a long time.We discovered an exciting but not in -depth idea: you can store data on a network, which will be copied all over the world without a single centralized fault point.This inspired us to create a file that never forgotten and was copied by multiple places, so that any single organization or even the government could not review these contents.

As a result, our mission has become expanded to Bitcoin, or the data storage on the Bitcoin -type chain reaches any scale so that we can create a knowledge base for human beings, store all history, and form a kind of non -tampering, no need to require no needThe historical log of trust, so that we will never forget how we step by step to this important background today.

We started this work 7 years ago, and now we have been online for more than 6 years.In the process, we realize that the permanent chain storage can provide the function that we first imagined.Initially, our idea was to store newspapers.But shortly after the main network, we realized that if you can store all these contents around the world, you actually plant the seeds of a permanent decentralized network.Not only that, we realized around 2020 that if you have a certain virtual machine and a permanent orderly log of a program, you can basically create a smart contract system.

We tried this system for the first time in 2020, when we called Smartweave.We borrowed the concept of inertia value in computer science. This concept is mainly promoted by programming language haskell.We know that this concept has been used for a long time in the production environment, but it has not been really applied in the blockchain field.Usually in the field of blockchain, smart contracts will execute when writing messages.However, we believe that the blockchain is actually a data structure that only increases. It has certain rules to include new information, without having to perform code with the data writing itself.Since we have any scalable data log, this is a natural way of thinking for us, but it was relatively rare at the time.The only other team is the team called Celestia (then called Lazyledger at the time).

This led to a Cambrian outbreak of the computing system on Arweave.There are about three or four main items, of which some of them have developed their own unique communities, function sets and security balances.In the process, we found that not only the availability of basic layer of data is used to store these logs, but also a mechanism is needed to entrust data availability guarantee.Specifically, you can submit the data to a packaging node or other people who represent you (now known as the dispatch unit). They will upload the data to the Arweave network and give you a guarantee of economic incentives to ensure that the data will be affected byWrite into the network.Once this mechanism is in place, you have a system that can be expanded horizontally.In essence, you have a series of processes that can see it as Rollup on Ethereum to share the same data set and communicate with each other.

The name of AO (Actor-Orient) comes from a paradigm in computer science. We have established a system that combines all these components. It has a native message transmission system, data availability provider and decentralized computing network.Therefore, the inertial value component has become a distributed collection, and anyone can open the node to resolve the contract status.Combining these together, you get a decentralized super computer.Its core is that we have any scalability diary, which records all the messages involved in calculation.I think this is particularly interesting because you can perform parallel computing, and your process will not affect the scalability or utilization rate of my process, which means that you can perform any depth calculation, such as running a large -scale AI AI inside the networkWorking load.At present, our ecosystem is vigorously promoting this concept and exploring what will happen when introducing market intelligence in the basic layer of smart contract systems.In this way, you have a smart agent to work for you. They are credible and verified, just like the underlying smart contract.

AO’s underlying concept and technical architecture

Lulu: As we know, Near Protocol and Arweave are now promoting the cross -integration of AI and cryptocurrencies.I want to discuss in depth. Since SAM has touched some of the underlying concepts and architectures of AO, I may start with AO and turn to AI later.The concepts you describe make me feel that those agents work, coordination, and allow AI agents or applications to work above AO.Can you explain in detail the parallel execution or independent agent inside the AO infrastructure?Is the metaphor of the decentralized Erlang accurate?

SAM:Before I started, I wanted to mention that I built an operating system based on the ERLANG system during my PhD.We call it running on the bare metal.Erlang’s exciting thing is that it is a simple and expressive environment. Each of them is expected to run parallel, not sharing state models, and the latter has become a specification in the encryption field.

The elegance is that it has a wonderful mapping with the real world.Just like we are working together now, we are actually independent characters, calculate in our own brains, and then listen, think and talk.Erlang’s agent or architecture for ACTOR is indeed excellent.At the AO Summit, I said immediately one of Erlang’s founders, and he told how they came up with this architecture around 1989.At the time they didn’t even realize the term “facing Actor”.But this is a wonderful concept, and many people come up with the same idea because it makes sense.

For me, if you want to build a truly scalable system, you must let them pass the message instead of sharing.In other words, when they are shared, like in Ethereum, Solana, and almost all other blockchains, Near is actually an exception.Near has fragments, so they do not share the global state, but have a local state.

When we build AO, the goal is to combine these concepts.We hope that there is a process of parallel execution, which can be calculated at all -scale scale. At the same time, the interaction of these processes is separated from its execution environment, and Erlang, which is decentralized version.For those who are not familiar with distributed technologies, the easiest way to understand is to imagine it as a decentralized super computer.Using AO, you can start a terminal inside the system.As a developer, the most natural way to use is to start your own local process, and then talk to it, just like you talk to the local command line interface.As we go to consumers, people are building UI and all what you expect.Fundamentally, it allows you to run personal computing in this decentralized computing device cloud and use a unified message format for interaction.When designing this part, we refer to the TCP/IP protocol running the Internet, trying to create a TCP/IP protocol that can be regarded as calculating itself.

The AO data protocol does not force the use of any specific type of virtual machine.You can use any virtual machine you want, we have achieved WASM32 and 64 -bit versions.Others in the ecosystem have achieved EVM.If you have this shared message layer (we use arweave), then you can allow all these height heterogeneous processes to interact in a shared environment, just like the calculated Internet.Once this infrastructure is in place, the next step is to explore what can be used to use intelligent, verified, and trustworthy calculations.The obvious application is AI or smart contracts, allowing agents to make smart decisions in the market, which may confront each other and may represent humans against humans.When we examine the global financial system, about 83% of Nasdaq’s transactions are performed by robots.This is how the world is operating.

In the past, we could not make the smart part chain and make it credible.But in the Arweave ecosystem, there is another parallel workflow, which we call RAIL, which is responsible AI ledger.It is essentially a way to create different models input and output records, and stores these records in a open and transparent manner, so you can query and say, “Hey, do the data I see comes from the AI model?If we can promote this, we think it can solve a fundamental problem we see today.For example, someone will send you a news article from the website you do not trust, which seems to have pictures or videos of a politician to do stupid things.Is this true?Rail provides a ledger. Many competitive companies can use them in a transparent and neutral way to store their output records, just like they use the Internet.And they can do this at extremely low costs.

Illia’s view on blockchain scalability

Lulu: I am curious about Illia’s view of AO method or model scalability.You have participated in the work of the Transformer model, and this model aims to solve the bottleneck of order processing.I want to ask, what is the scalability method of Near?In the previous AMA chat, you mentioned that you are studying a direction, that is, multiple small models form a system, which may be one of the solutions.

Illia:There are many different applications in the blockchain, and we can talk about along the topic of SAM.What you see now is that if you use a single large language model (LLM), it has some restrictions on reasoning.You need to prompt it in a specific way to run for a while.Over time, the model will continue to improve and become more common.But in any case, you are training these models (can be regarded as primitive intelligence) to perform specific functions and tasks in some way, and better reasoning in a specific context.

If you want them to perform more common work and processes, you need multiple models to run in different contexts and perform different aspects of tasks.For a very specific example, we are now developing a end -to -end process.You can say, “Hey, I want to build this application.” The final output is a fully built application that includes the correct, officially verified smart contract, and the user experience has been fully tested.In real life, there is usually no one to build all these things, and the same idea is also applicable to here.You actually hope that AI plays different roles and play a different role at different times, right?

First of all, you need an AI agent of the role of product manager. The actual collection needs are used to figure out what you want, what weighs, and what the user story and experience are.A AI designer may then be responsible for transforming these design into the front end.There may be a architect that is responsible for the architecture of the back -end and middleware.Then AI developers, compiled code and ensure that smart contracts and all front -end work have been formally verified.Finally, there may be another AI tester to ensure that everything runs normally and test it through the browser.In this way, a group of AI agents are formed. Although the same model may be used, it is fine -tuned by specific functions.In the process, they play their role independently, using prompts, structures, tools, and observed environments to build a complete process.

This is what SAM said, with many different agents that do their work asynchronously, observe the environment and figure out what to do.So you really need a framework and need to continue to improve them.From a user perspective, you send a request and interact with different agents, but they are like a single system to complete work.At the bottom, they may actually pay each other for exchange information, or different agents of different owners interact with each other to actively complete something.This is a new version of API, which is more intelligent and more natural.All these require a large number of framework structures and payment and settlement systems.

There is a new explanation method called AI business, and all these agents interact with each other to complete the task.This is the system that we are moving towards.If you consider the scalability of this system, you need to solve several problems.As I mentioned, Near design is to support billions of users, including human, AI agents, and even cats, as long as it can be traded.Each NEAR account or smart contract is running parallel, allowing continuing expansion and transactions.At a lower level, you may not want to send transactions every time you call AI agents or APIs, no matter how cheap Near is, it is unreasonable.Therefore, we are developing a point -to -point agreement to enable the agency nodes, clients (including humans or AI) to connect to each other, call API, data acquisition and other payment fees, and there will be encrypted economic rules to ensure that they will respond, otherwise some mortgage will be lostgold.

This is a new system that allows expansion beyond Near and provides micro -payment.We call YOCTONEAR, equivalent to 10^-24 of Near.In this way, you can actually exchange messages at the network level and attach the payment function, so that all operations and interactions can now be settled through this payment system.This solves a fundamental problem in the blockchain, that is, we do not have bandwidth and delay payment systems, and in fact, there are many problems with free to ride a car.This is a very interesting aspect of scalability. Not only is it limited to the scalability of the blockchain, but it can be applied to a world that may have billions of agents in the future.In this world, even on your device, multiple agents may be run at the same time, and various tasks are performed in the background.

AO’s application in DEFI scenario: Agent Finance

Lulu: This use case is very interesting.I believe that for AI payment, there are usually high -frequency payment and complex strategies, and these demand has not yet been realized due to performance restrictions.So I look forward to seeing how to achieve these needs based on better scalability options.In our hacker pine, SAM and the team mentioned that AO is also exploring the use of new AI infrastructure to support DEFI use cases.SAM, can you explain in detail how your infrastructure is applied in the new DEFI scenario?

SAM:We call it agent finance.This refers to two aspects we see.DEFI did very well in the first stage, decentralized various economic primitives and brought them to the chain. Users can use it without trusting any intermediaries.But when we consider the market, we think of the upper and lower fluctuations of numbers and the intelligence of these decisions.When you can bring this intelligence itself, you will get a financial instrument without trust, such as a fund.

A simple example is, suppose we want to build a Meme Coin transaction hedge fund.Our strategy is to buy Trump coins when we see Trump, and when we mentioned Biden, we bought Biden coins.In AO, you can use the prediction machine service like 0rbit to obtain all the contents of the webpage, such as the Wall Street Journal or the New York Times, and then enter it to your agent.How many times have been mentioned.You can also perform emotional analysis to understand the market trend.Then, your agent will buy and sell these assets based on this information.

Interestingly, we can make the proxy execution itself without trust.In this way, you have a hedge fund that can execute the strategy. You can invest funds in it without trusting the fund manager.This is another aspect of finance. The DEFI world has not really touched it, that is, make wise decisions, and then put it into action.If these decision -making processes can be credible, the entire system can be unified to form an economy that looks like a truly decentralized, not just settlement layers that involve primitive games with different economic games.

We think this is a huge opportunity, and some people in the ecosystem have begun to build these components.We have a team created a portfolio manager without trust, which will buy and sell assets according to the proportion you want.For example, you want 50% to be Arweave tokens and 50% are stable coins.When the price of these things changes, it will automatically execute the transaction.There is also an interesting concept behind this. There is a function we call Cron messages in AO.This means that the process can be awakened by itself and decide to do something independently in the environment.You can set your hedge fund smart contract every five seconds or five minutes to wake up, obtain data from the Internet, process data, and take action in the environment.This makes it completely autonomous because it can interact with the environment, in a sense, it is “live”.

Smart contracts on Ethereum require external triggers. People build a large number of infrastructure to solve this problem, but they are not smooth.In AO, these functions are built -in.Therefore, you will see the market that is constantly competing with each other on the chain.This will promote the use of networks in the way you have never seen before before the encryption field.

Near.ai’s overall strategy and development focus

Lulu: Near.ai is promoting some prospective use cases, can we tell us more about other levels or overall strategies and some points?

Illia:There are indeed many things that are happening at each level, and various products and projects can be integrated.All this is obviously began in the Near blockchain itself.Many projects require scalable blockchain and some form of authentication, payment and coordination.Near’s smart contracts are written in Rust and JavaScript, which is very convenient for many cases.An interesting thing is that Near’s recent agreement upgrade has launched the so -called yield/Resume pre -compilation.These pre -compilation allows smart contracts to suspend execution, waiting for external events, whether it is another smart contract or AI reasoning, and then resume execution.This is very useful for the input of input from LLM (such as ChatGPT) or verified reasoning.

We have also launched a chain abstraction and chain signature function, which is one of the unique features introduced by Near in the past six months.Any Near account can be traded on other chains.This is very useful for building agents, AI reasoning or other infrastructure, because you can now conduct cross -chain transactions through Near without worrying about transaction costs, tokens, RPCs and other infrastructure.These are all processed by the infrastructure of the chain.Ordinary users can also use this feature.There is a Hot Wallet based on Near on Telegram. In fact, Base integration has just been launched on the main network. About 140,000 users use Base through this Telegram wallet.

Furthermore, we intend to develop a point -to -point network, which will involve agents, AI reasoning nodes, and other storage nodes to participate in more proven communication protocols.This is very important because the current network stack is very limited and has no native payment function.Although we usually say that blockchain is “Internet currency”, in fact, we have not solved the problem of sending money with money at the network level.We are solving this problem, which is very useful for all AI use cases and wider web3 applications.

In addition, we are developing the so -called AI reasoning router, which is actually a place that can insert all cases, middleware, decentralized reasoning, chain and under -chain data providers.This router can be used as a framework to truly interconnect all the projects built in the Near ecosystem, and then all these are provided to NEAR groups.Near has more than 15 million monthly active users in different models and applications.

Some applications are exploring how to deploy models to user devices, the so -called marginal computing.This method includes storing data locally, as well as the use of related protocols and SDK for operation.From the perspective of privacy protection, this is very potential.In the future, many applications will run on user devices, generate or compile the user experience, and use only local models to avoid data leakage.As developers, we have a lot of research. The purpose is to allow anyone to easily build and publish applications on Web3 and formally verify on the back end.This will become an important topic in the future, because the OLLM model is becoming more and more powerful in discovering the vulnerability of the code library.

In short, this is a complete technology stack, from the underlying blockchain infrastructure to the abstraction of the web3 chain, and then to the point -to -point connection, it is very suitable for participants under the chain and the chain.Next is an application for AI reasonable routing centers and local data storage. It is particularly suitable for the need to access private data without leaking to the outside.Finally, developers integrate all research results, the goal is to allow future applications to be built by AI.In the middle and long term, this will be a very important development direction.

AO’s priority matters and research focus

Lulu: I want to ask SAM, what are the current priority and research focus of AO?

SAM:One of the thoughts I personally interested in is to use the expansion function provided by AO to create a certain CUDA subset and an abstract GPU driver.Under normal circumstances, the GPU calculation is not certain, so it cannot be used as a safe use on AO, at least not safely, so no one will trust these processes.If we can solve this problem, it is theoretically possible, and we only need to deal with uncertainty of equipment level.There are already some interesting studies, but it is necessary to deal with this problem in a way that can always be 100% certain, which is essential for the implementation of smart contracts.We already have a plug -in system that supports this function as a driver within AO.The framework is already available, we just need to figure out how to achieve it accurately.Although there are many technical details, it is basically to be predictable to load the operations in the GPU environment to be used for such calculations.

Another thing I am interested in is whether we can use the ability of this chain to be able to decentralize or at least open and distributed model training, especially fine -tuning models.The basic idea is that if you can set a clear criterion for a mission, you can train the model for this standard.Can we create a system that allows people to invest tokens to encourage miners to compete to build a better model?Although this may not attract very diverse miners, it is not important because it allows model training in an open manner.Then, when the miners upload the model, they can add a general data license label to specify that anyone can use these models, but if it is used for commercial use, it must pay a specific version of the tax.Edition tax can be assigned to the contributor through the tokens.In this way, by combining all these elements, an incentive mechanism can be created to train the open source model.

I also think that the Rail plan mentioned earlier is also very important.We have discussed the possibility of supporting this plan with some major AI providers or reasoning providers, and they did show strong interest.If we can let them truly implement and write these data on the Internet, then the user can click on any picture of the Internet by right -click, and query the picture generated by the Stable Diffusion, or it is generated by DALL E.These are the very interesting areas we are currently exploring.

Illia and SAM are optimistic projects

Lulu: Please nominate a recently favorite AI or encryption project, which can be any project.

Illia:I plan to take a coincidence.We will hold AI Office Hours every week to invite some projects. Recently, MASA and Compute Labs are invited.Both projects are very good, I use Compute Labs as an example.Compute Labs basically converts the actual computing resources (such as GPU and other hardware) into a real asset that can be available for economic participation. Users can obtain benefits from these devices.At present, the computing market in the encrypted field is hot, and they seem to be a natural place for cryptocurrencies to promote the market.But the problem is that these markets lack the moat and network effects, leading to fierce competition and profit compression.Therefore, calculating the market is just a supplement to other business models.Compute Labs provides a very encrypted business model, namely capital formation and asset dental carbonization.It creates an opportunity that usually needs to establish a data center to participate.The computing market is only part of it, and the main purpose is to provide access to computing resources.This model also fits a broader decentralized AI ecosystem. By providing underlying computing resources, it provides opportunities for wider investor groups to participate in innovation.

SAMTheI have a lot of great projects in the AO ecosystem. I don’t want to favor any of them, but I think the underlying infrastructure that Autonomous Finance is constructed makes “agent finance” possible.This is very cool, they are really at the forefront in this regard.I also want to thank the extensive open source AI community, especially the Meta open source LAMA model, which has promoted many other people’s models.Without this trend, when OpenAI becomes Closedai after GPT-2, we may fall into a dark, especially in the field of encryption, because we will not be able to touch these models.Everyone can only rent these closed sources from one or two main providers.This situation does not happen, which is very good.Despite the irony, it still wants to like the King of Web2.