Source: AI style

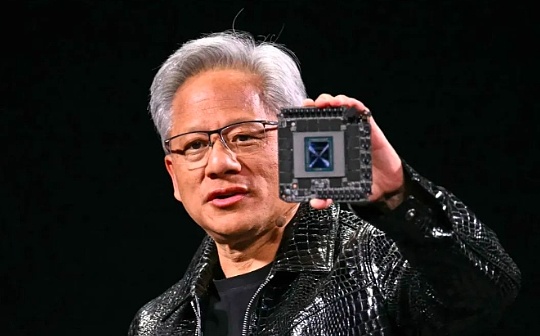

Nvidia CEO Jensen Huang said that the company’s AI chip performance has improved faster than Moore’s Law – a principle that once dominated the development of computing technology for decades.

“Our system is developing far faster than Moore’s Law,” Huang said in an interview the morning after giving a keynote speech to 10,000 viewers at the CES conference in Las Vegas.

Moore’s Law was proposed in 1965 by Intel co-founder Gordon Moore.The number of transistors on the chip is predicted to double about each year, thus double the chip performance.This forecast was basically realized in the following decades, driving a rapid increase in computing power and a significant decline in costs.

Although Moore’s Law’s momentum has slowed down in recent years, Huang Renxun said Nvidia’s AI chips are developing at a faster pace.The company says its latest data center super chips are more than 30 times better in AI inference performance than the previous generation.

Huang Renxun explained that by simultaneously innovating at various levels such as architecture, chips, systems, program libraries and algorithms, the limitations of Moore’s Law can be broken.

Nvidia CEO made this bold claim at a time when many questioned whether AI development has stalled.Top AI labs such as Google, OpenAI and Anthropic are all using Nvidia’s AI chips to train and run their models, and the improvement in chip performance is likely to lead to further breakthroughs in AI capabilities.

This is not the first time that Huang Renxun has shown that Nvidia surpasses Moore’s Law.He mentioned in a podcast in November that the field of AI is undergoing a “supermoore’s law” development.

He refuted the statement that AI progress has slowed down and pointed out that there are currently three laws of AI development: pre-training (learning patterns from massive data), post-training (fine-tuning through human feedback, etc.) and test-time calculation (giving AI more”Thinking” time).

Huang Renxun said that just like Moore’s Law has promoted the development of computing technology by reducing computing costs, improving AI inference performance will also reduce its usage costs.

While Nvidia’s H100 was the chip of choice for tech companies to train AI models, as these companies turned to the inference phase, some began to question whether Nvidia’s expensive chips could continue to maintain their advantages.

Currently, the AI model calculated when using tests is very expensive to run.Take OpenAI’s o3 model for example, which is a human-level performance in general intelligence testing, but costs nearly $20 per task, while the ChatGPT Plus subscription fee is $20 a month.

In Monday’s keynote, Huang Renxun presented the latest data center super chip, the GB200 NVL72.This chip has improved AI inference performance by 30-40 times compared to the previous best-selling H100.He said this performance leap will reduce the cost of using models like OpenAI o3 that require a lot of inference computing.

Huang Renxun emphasized that their focus is to improve chip performance, because in the long run, stronger performance means lower prices.

He said that improving computing power is a direct solution to the performance and cost problems of computing during testing.In the long run, AI inference models can also provide better data for both pre-training and post-training stages.

The price of AI models has indeed dropped significantly over the past year thanks to computing breakthroughs from hardware companies such as Nvidia.Although OpenAI’s latest inference model is expensive, Huang Renxun expects this price cut trend to continue.

He also said,Nvidia’s AI chip performance is 1,000 times higher than 10 years ago, which is far beyond Moore’s Law’s development speed.Moreover, there is no sign of a stop in this rapid development momentum.