Why AI Needs to be Open

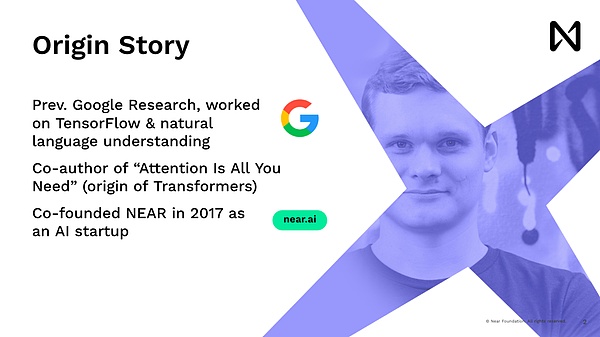

Let’s discuss “Why artificial intelligence needs to be open”.My background is Machine Learning, and I have been working in various machine learning jobs for about ten years in my career.But before getting involved in Crypto, natural language understanding, and founding NEAR, I worked at Google.We now develop a framework that drives most of modern artificial intelligence called Transformer.After leaving Google, I started a Machine Learning company so that we can teach machines to program, which changes how we interact with computers.But we didn’t do this in 2017 or 2018, it was too early and there was no computing power and data to do this at that time.

What we did at that time was to attract people from all over the world to do the work of labeling data for us, mostly students.They are in China, Asia and Eastern Europe.Many of them do not have bank accounts in these countries.The United States is not willing to send money easily, so we started to want to use blockchain as a solution to our problem.We want to pay people around the world in a programmatic way, no matter where they are, to make this easier.By the way, the current challenge of Crypto is that although NEAR solves a lot of problems now, usually you need to buy some Crypto first before you can make transactions on the blockchain to earn. This process goes the opposite way.

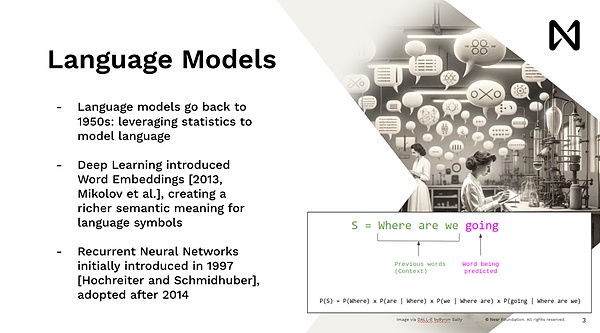

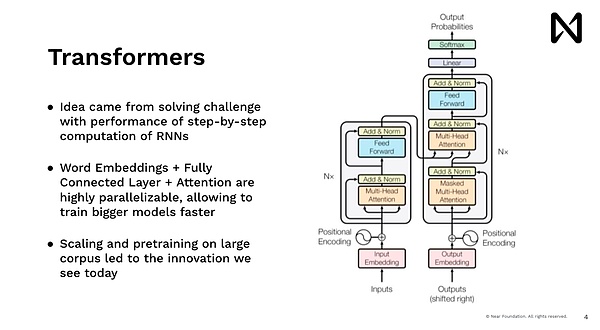

Like businesses, they would say, hey, first of all, you need to buy some equity in the company to use it.This is one of the many problems we NEAR is solving.Now let’s discuss the artificial intelligence aspect a little in-depth.Language models are not new, they existed in the 1950s.It is a statistical tool that is widely used in natural language tools.For a long time, starting in 2013, a new innovation has begun as deep learning is rebooted.This innovation is that you can match words, add them to multi-dimensional vectors and convert them into mathematical forms.This works well with deep learning models, they are just a lot of matrix multiplication and activation functions.

This allows us to start doing advanced deep learning and train our models to do a lot of fun things.Looking back now, what we were doing at the time was neuronal neural networks, which largely mimicked human models, and we could read one word at a time.So, it’s very slow to do this, right.If you try to show some content to users on Google.com, no one will wait to read Wikipedia, say, five minutes before giving the answer, but you want to get the answer right away.Therefore, the Transformers model, that is, the model that drives ChatGPT, Midjourney and all recent progress, comes from the same idea, and all hope that there is a way to process data in parallel, infer and give answers immediately.

So a major innovation in this idea here is that every word, every token, every image block is processed in parallel, leveraging our GPU and other accelerators with highly parallel computing power.By doing so, we are able to reason about it on a scale.This scale can scale up the training scale and thus process automatic training data.So after this we see Dopamine, which did amazing work in a short time, achieving explosive training.It has a large amount of text and begins to achieve amazing results in reasoning and understanding the language of the world.

The direction now is to accelerate innovative artificial intelligence, which was previously a tool that data scientists and machine learning engineers would use, and then somehow explain what is in their products or can discuss data with decision makers.Now we have this model of AI directly communicating with people.You may not even know that you are communicating with the model because it is actually hidden behind the product.So we went through this shift from those who understood how AI worked before, to understanding and being able to use it.

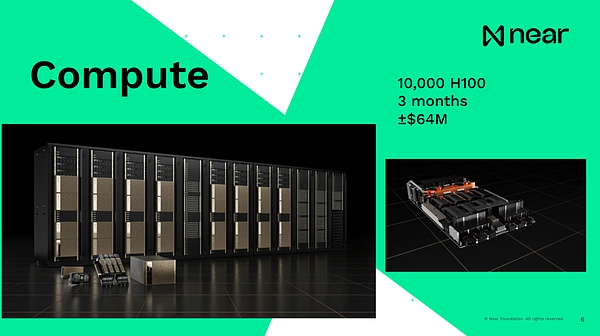

So, I’m here to give you some background, when we say we’re using GPUs to train models, this is not the kind of gaming GPU we use when playing video games on our desktop.

Each machine is usually equipped with eight GPUs, all of which are connected to each other through a motherboard and then stacked into racks, each with about 16 machines.All of these racks are now also connected to each other via dedicated network cables to ensure that information can be transmitted directly and quickly between GPUs.Therefore, the information is not suitable for the CPU.In fact, you won’t handle it on the CPU at all.All calculations happen on the GPU.So this is a supercomputer setup.Again, this is not the traditional “Hey, this is a GPU thing”.So the model with a scale of GPU4 used 10,000 H100s for training in about three months, and the cost was $64 million.Everyone knows what the current cost is and how much it will cost to train some modern models.

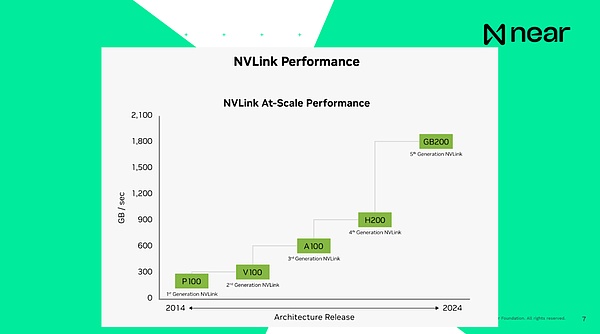

What’s important is that when I say that the systems are connected to each other, the current connection speed of H100, that is, the previous generation product, is 900GB per second, and the connection speed between the CPU and RAM inside the computer is 200GB per second, which is all local to the computer..Therefore, sending data from one GPU to another in the same data center is faster than your computer.Your computer can basically communicate on its own in the box.The connection speed of the new generation of products is basically 1.8TB per second.From a developer’s point of view, this is not an individual computing unit.These are supercomputers that have a huge memory and computing power that provides you with extremely large-scale computing.

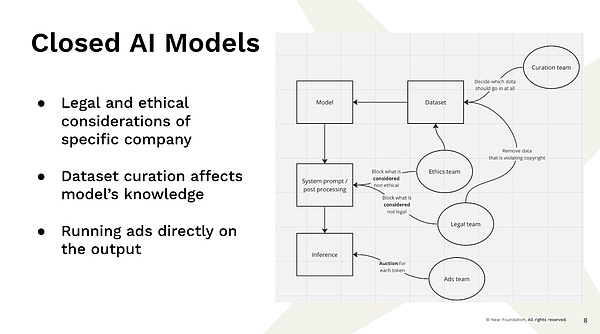

Now, this leads to the problem we face, that these big companies have the resources and the ability to build these models that are almost already providing us with that kind of service now, and I don’t know how much work there is in there, right?So this is an example, right?You go to a completely centralized company provider and enter a query.The result is that there are several teams that are not software engineering teams, but teams that determine how the results are displayed, right?You have a team that decides which data goes into the dataset.

For example, if you just crawl data from the internet, the number of times Barack Obama was born in Kenya and Barack Obama was born in Hawaii is exactly the same because people like to speculate on controversy.So you have to decide what to train on.You have to decide to filter out some information because you don’t believe it is true.Therefore, if an individual like this has decided which data will be adopted and that data exists, these decisions are largely influenced by the person who made them.You have a legal team that decides we cannot view what content is copyrighted and what is illegal.We have a “moral team” that determines what is immoral and what we should not show.

So to some extent, there are a lot of such filtering and manipulation behaviors.These models are statistical models.They will be picked from the data.If there is nothing in the data, they won’t know the answer.If there is something in the data, they will most likely treat it as a fact.Now, when you get an answer from AI, this can be worrying.Right.Now, you should be getting answers from the model, but there is no guarantee.You don’t know how the results are generated.A company may sell your specific session to the highest bidder to actually change the results.Imagine if you ask which car you should buy, Toyota decided to think it should be biased towards Toyota, and Toyota would pay the company 10 cents to do this.

So even if you use these models as a knowledge base that should be neutral and represent the data, there are actually a lot of things that will bias the results in a very specific way before you get them.This has caused a lot of problems, right?This is basically a week of different legal lawsuits between big companies and the media.SEC, now almost everyone is trying to sue each other because these models bring so much uncertainty and power.And, if you look forward, the problem is that big tech companies will always have a motivation to continue to increase their revenue, right?For example, if you are a public company, you need to report revenue and you need to continue to grow.

To achieve this, if you already occupy the target market, for example, you already have 2 billion users.There are no more new users on the Internet.You don’t have many options, other than maximizing average revenue, which means you need to extract more value from users, and they may have little value at all, or you need to change their behavior.Generative AI is very good at manipulating and changing user behavior, especially if people think it comes in the form of all intellectual intelligence.So we face this very dangerous situation where regulatory pressure is high and regulators do not fully understand how this technology works.We have little to protect our users from manipulation.

Manipulative content, misleading content, even without ads, you can just take screenshots of something, change the title, post it on Twitter, and people will go crazy.You have an economic incentive mechanism that causes you to continuously maximize your income.And, it’s not actually like you’re doing evil things inside Google, right?When you decide which model to start, you will do A or B test to see which one will bring more revenue.So you will continuously maximize your revenue by extracting more value from your users.Moreover, users and the community have no input on the content of the model, the data used, and the goals actually attempted to achieve.This is the case with the application user.This is a kind of adjustment.

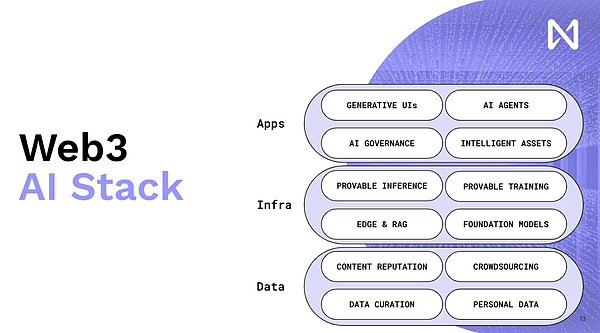

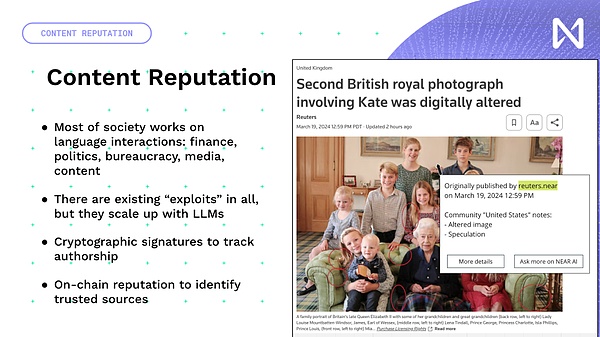

This is why we are constantly promoting the integration of WEB 3 and AI. Web 3 can be an important tool that allows us to have new incentives and also motivate us to produce better software in a decentralized form.and products.This is the general direction of the entire web 3 AI development. Now, in order to help understand the details, I will briefly talk about the specific part. First, the first part is Content Reputation.

Again, this is not a purely AI problem, although language models have brought great influence and scaled up for people to manipulate and utilize information.What you want is a traceable, traceable crypto reputation that will appear when you look at different content.So imagine you have some community nodes that are actually encrypted and can be found on every page of each website.Now, if you go beyond that, all of these distribution platforms will be disrupted, as these models will now read almost all of this and give you personalized summary and personalized output.

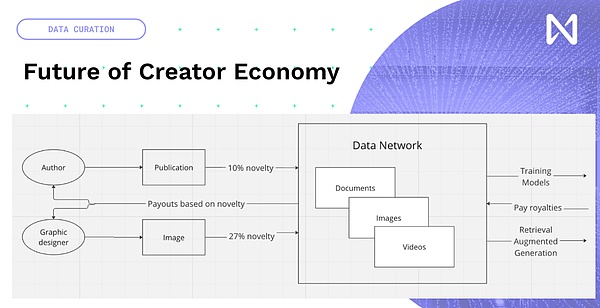

So we actually have the opportunity to create new creative content instead of trying to reinvent it, let’s add blockchain and NFTs to existing content.The economy of new creators around model training and inference time, the data people create, whether it’s new publications, photos, YouTube, or music you create, will enter a network based on how much it contributes to model training.So, based on this, some compensation can be obtained globally based on the content.So we transition from the eye-catching economic model now driven by the advertising network to the one that truly brings innovative and interesting information.

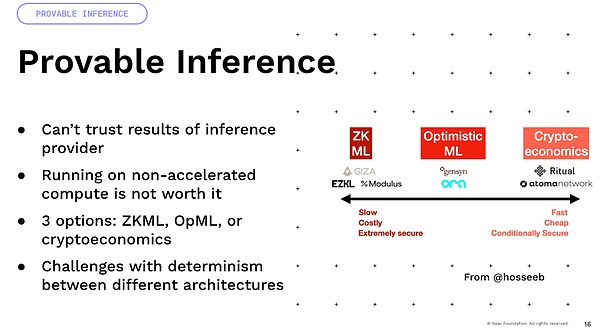

One important thing I want to mention is that a lot of uncertainty comes from floating point operations.All of these models involve a lot of floating point operations and multiplication.These are all uncertain operations.

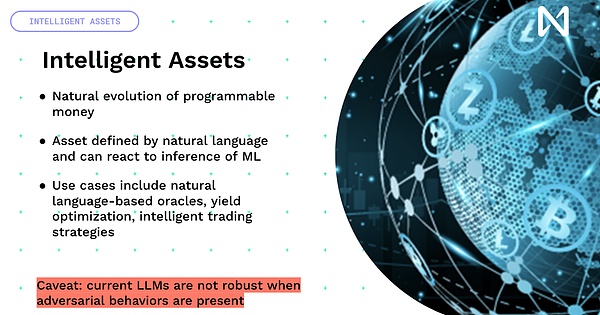

Now if you multiply them on GPUs of different architectures.So if you take an A100 and an H100, the result will be different.Therefore, many methods that rely on certainty, such as crypto economy and optimism, actually encounter a lot of difficulties and require a lot of innovation to achieve this.Finally, there is an interesting idea that we have been building programmable currencies and programmable assets, but if you can imagine that you add this intelligence to them, you can have smart assets that are not defined by code now,Instead, it is defined by the ability of natural language to interact with the world, right?This is how we can have a lot of interesting earnings optimization and DeFi, and we can conduct trading strategies within the world.

The challenge now is that all current events do not possess strong Robust behavior.They are not trained to be adversarially powerful, because the purpose of training is to predict the next token.So it will be easier to convince a model to give you all the money.Before proceeding, it is actually very important to solve this problem.So I’ll leave you this idea, we’re at a crossroads, right?There is a closed AI ecosystem that has extreme incentives and flywheels because when they launch a product, they generate a lot of revenue and then put that revenue into the construction product.However, the product is born to maximize the company’s revenue and thus maximize the value extracted from users.Or we have this open, user-owned method, and users control the situation.

These models are actually good for you, trying to maximize your interests.They provide you with a way to truly protect you from many dangers on the internet.So that’s why we need AI x Crypto for more development and applications.Thank you everyone.