Author: Lita Foundation, Translation: Bit Chain Vision Xiaozou

“In the future5During the year, we will talk about the application of zero -knowledge protocols like the application of the blockchain protocol.The potential released by breakthroughs in the past few years will sweep the mainstream of encryption.“

————Espresso Systems CSO JILL,,2021Year5moon

Since 2021, the zero -knowledge certificate (ZK) pattern has evolved into a diverse ecosystem composed of primary, networks, and applications across multiple fields.However, despite the gradual development of ZK, the launch of ZK -driven Rollup (such as StarkNet and ZKSYNC ERA) marked the latest progress in the field, for ZK users and the entire encryption field, most of the ZK is still a mystery.

But the times are changing.We believe that zero -knowledge encryption is a powerful, universal tool that can be used to expand and protect software security.To put it simply, ZK is a bridge used in large -scale encryption.If you quote Jill again, whether it is web2 or web3, anything involving zero -knowledge proof (ZKP) will create huge value (including basic value and speculative value).The best talents in the encryption field are trying to update iteration, making the ZK economy feasible and ready.Even so, before the model we envisioned became a reality, there were still many in the industry.

Comparing the use of ZK with Bitcoin, one reason for the Internet currency of the Bitcoin from the edge enthusiast forum into one reason for the “digital gold” approved by Blackrock is that developers and community creative content has increased, and the cultivation has been cultivated.People’s interests.At present, ZK exists in the bubbles in bubbles.The information is decentralized. The article is either filled with obscure terms, or is too layman. Except for reused examples, there is no meaningful information.It seems that everyone (experts and laymen) know what zero -knowledge is proved, but no one can describe how it actually works.

As one of the teams that contribute to the zero -knowledge paradigm, we hope to unveil the mystery of our work and help a wider range of audiences establish a standardized foundation for the ZK system and applications to promote education and publicity between relevant parties andDiscussion allows the relevant information to spread and spread.

In this, we will introduce the basic knowledge of zero -knowledge proof and zero -knowledge virtual machine, summarize the operation process of ZKVM, and finally analyze ZKVM’s evaluation standards.

1, Zero Knowledge Proof of Basic Knowledge

What is zero -knowledge certificate (Zkp)?

In short, ZKP enables one party (Prover proof) to prove to the other side (Verifier verifier) that they know something, but do not need to disclose the specific content or any other information of the matter.More specifically, ZKP proves a paragraph of data or awareness of the calculation results without disclosing the data or input content.The process of creating zero -knowledge certification involves a series of mathematical models, and converting the calculation results into the successful execution of the proof code. In other cases, this information will be verified later.

In some cases, the workload required to verify that the proof of multiple algebraic conversion and cryptographic construction is less than the workload required for operation calculations.It is this unique combination of security and scalability that has made zero knowledge encryption a powerful tool.

ZKSNARK: Zero knowledge simplicity Non -interactive knowledge demonstration

· Relying on the initial (trustworthy or unbelievable) setting process to establish parameters for verification

· At least one interaction between the required proof and the verification

· Prove less and easy to verify

· Rollup like ZKSYNC, Scroll and Linea uses SNARK -based proof

zkstark: Zero -knowledgeable expansion and transparent knowledge demonstrationBleak

· No need to be trusted

· Create a verifiable system that does not need to be trusted by using the randomness that can be publicly verified, that is, to generate random parameters that can be proven to prove and verify, thereby providing high transparency.

· Highly scalable, because they can generate and verify proof quickly (not always), even if the underlying testimony (data) is large.

· No interaction between proof and verification

· The price is that Stark will generate greater proof, which is more difficult to verify than SNARK.

· Proof is more difficult to verify than some ZKSNARK proof, but it is easier to verify compared to other proofs.

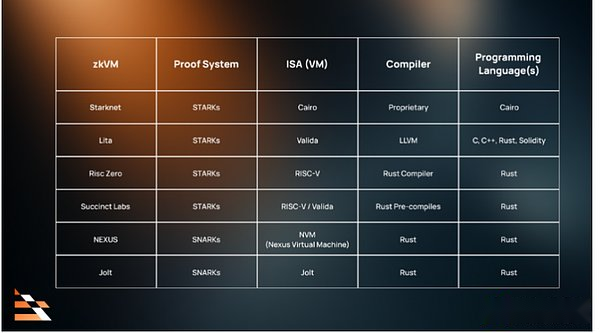

· StarkNet and ZKVM (such as Lita, RISC ZERO, and Succinct Labs) use Stark.

(Notice:Succinct BridgeuseSnark,butSP1Based onStarkAgreement)

It is worth noting that all the starks are snark, but not all snarks are stark.

2What iszkvmIntersection

Virtual machine (VM) is a program for running programs.In the context, ZKVM is a virtual computer, which is implemented as a system, general circuit or tool that generates zero -knowledge proof to generate ZKVM for any program or calculation.

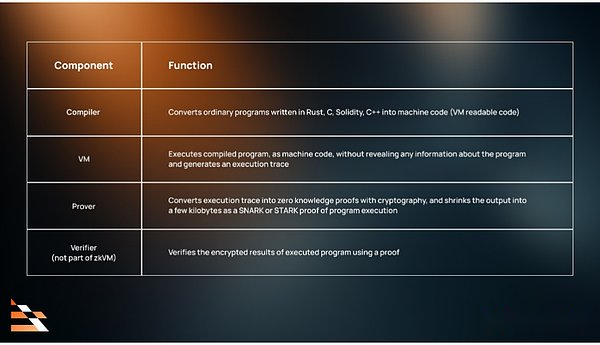

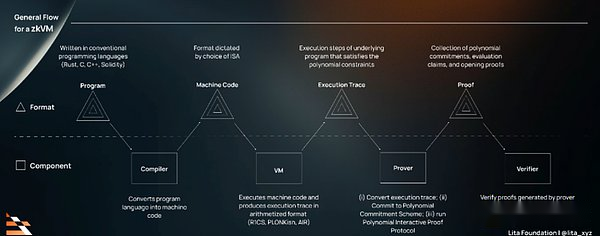

ZKVM does not require learning complex mathematics and cryptographic design and encoding ZK, so that any developer can perform programs written in their favorite language and generate ZKP (zero -knowledge certificate), which is easier to interact and interact with zero knowledge.In a broad sense, most ZKVM means that the compiler tool chain and certification system that includes virtual machines attached to the execution program, not just the virtual machine itself.Below, we summarize the main components and functions of ZKVM:

>

The design and implementation of each component are controlled by the choice of ZKVM’s proof (SNARKS or Starks) and instruction set architecture (ISA).Traditionally, ISA specifies the operation order of the CPU’s capabilities (data type, register, memory, etc.) and the CPU executed when performing a program.In the context, ISA determines the machine code that can be explained and executed by VM.Choose ISA can generate fundamental differences in the accessability and availability of ZKVM, and prove the speed and efficiency of the processing process, and support the construction of any ZKVM.

Here are some examples of ZKVM and its components, for reference only.

>

Now, we will focus on the high -level interaction between each component so that we can provide a framework in the subsequent articles to understand the algebra and encryption process and the design balance of ZKVM.

3Abstractzkvmprocess

The figure below is an abstract and general ZKVM flowchart. When the program moves between the ZKVM components, the format (input/output) is split and classified.

>

The process of ZKVM is generally as follows:

(1) Compilation phase

The compiler first compiles the program written by traditional languages (C, C ++, Rust, Solidity) into a machine code.The format of the machine code is determined by the selected ISA.

(2.VMstage

VM executes the machine code and generates the execution tracking, which is a series of steps of the underlying program.Its format is determined by the selection and polynomial constraints of the algorithm.Common algorithms include R1CS in Groth16, the Plonkish algorithm in Halo2, and AIR in Plonky2 and Plonky3.

(3) Verification phase

The proofer receives the tracking and expresses it as a set of polynomas restricted by a set of constraints, which is essentially converted to the algebra by the fact that the facts of mathematical mapping are converted.

Provers use the polynomial commitment scheme (PCS) to submit these polynomials.The commitment plan is an agreement that allows the proofer to create some data X fingerprints, which is called a commitment to X, and then uses the promise to X to prove the facts about X without leaking the content of X.PCS is the fingerprint, the “pre -processing” concise version of the calculated restraint.This allows proofers to use the random value proposed by the verified by the verified by the verification person to prove the facts of the calculation, and now it is represented by polynomial equations.

The proofer runs a polynomial interaction ORACLE proof (PIOP) to prove that the polynomial submitted by the proof represents the execution trajectory that meets the given constraints.PIOP is an interactive proof protocol that sends a commitment to the polynomial. Verifications use random field values to respond. The proofer provides a polynomial assessment, similar to the use of random value “solve” polynomial equations to persuade the verified as a probability manner.

Fiat-shamir inspirational application; proofers run PIOP in non-interactive mode. In this mode, the behavior of the verifications is limited to sending anonymous random challenge points.In cryptography, Fiaat-SHAMIR inspiration converts interactive knowledge to prove to digital signatures for verification.This step is encrypted to prove and make it a zero -knowledge proof.

The proofer must persuade the verification person, and the polynomial assessment claimed to be sent to the verificationer is correct.To do this, the proofer generates a “Evaluation” or “Opening” certificate, which is provided by the polynomial commitment scheme (fingerprint).

(4) Verification stage

Verifins check the provenance by following the verification agreement of the proof system, either using constraints or promises.Verifications accept or reject the results based on the validity of the proof.

In short, ZKVM has proved that there are some inputs for given procedures, given results, and the initial conditions of given, so that the program generates a given result from the given initial conditions.We can combine this statement with the process to get the following description of ZKVM.

zkvmProve that it will prove that for givenVMThe program and the given output have some inputs, resulting in a given program inVMA given output during execution.

4,Evaluatezkvm

We evaluate what is the standard of ZKVM?In other words, what circumstances should we say that one ZKVM is better than another?In fact, the answer depends on the use case.

LITA’s market research shows that for most commercial use cases, the most important attributes between speed, efficiency and simplicity are either speed or core time efficiency, depending on the application.Some applications are sensitive to price and hopes to optimize the proof process into low energy consumption and low cost. For these applications, kernel time efficiency may be the most important optimization indicator.Other applications, especially financial or transaction -related applications, are very sensitive to delay and need to optimize speed.

Most public performance is more concerned about speed. Of course, the speed is very important, but it is not a comprehensive measure of performance.There are also some important attributes that can measure the reliability of ZKVM, most of them do not meet the production standards, even the market -leading old -fashioned enterprises.

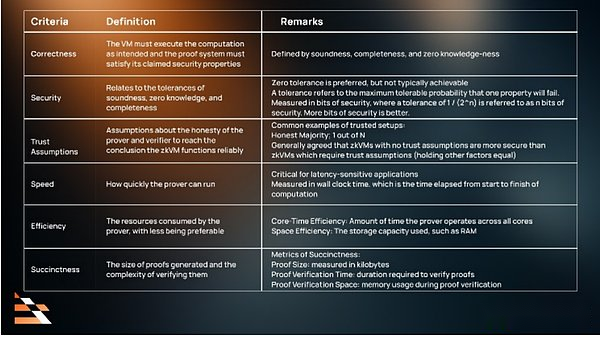

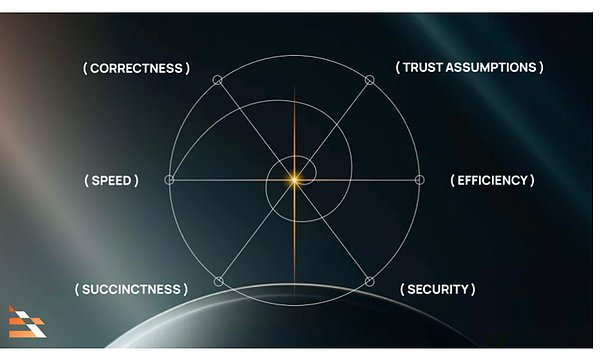

We suggest that ZKVM will be evaluated according to the following standards, and it is divided into two small categories:

>

Base line: Used to measurezkvmReliability

· Correctness

· Security

· Trust assumption

Performance: Used to measurezkvmAbility

· efficiency

· speed

· Simple

(1) Base line: correctness, security and trust assumptions

When evaluating the ZKVM of the key task application, correctness and security should be used as the benchmark.There are enough reasons to have confidence in correctness and have sufficient statement security.In addition, for applications, trust assumptions need to be weak enough.

Without these attributes, ZKVM may be more useless for applications than useless, because it may not be able to execute according to the specified way, and make users be exposed to hacking and vulnerability attacks.

Accuracy

· VM must be calculated as expected

· Prove that the system must meet the security attributes they claim

The correctness contains three major attributes:

· Stability: The proof system is real, so everything it proves is true.Verifters refused to accept evidence of false statements, and only accepted the calculation results of the actual calculation results.

· Completion: Prove that the system is complete and can prove all real statements.If the proofer claims that it can prove the calculation result, it must be able to generate acceptable proofs that verifications.

· Zero -knowledge proof: Compared with the results itself, having a proof cannot reveal more information about calculating input.

You may have integrity without stability. If the system proves that everything, including false statements, obviously it is complete, but not sound.And you may also have a sound but not completeness. If the system proves the existence of a program, but it cannot be proven to calculate, obviously it is sound (after all, it has never proved any false statements), but it is not complete.

Security

· Related tolerance, integrity, and zero -knowledge certificate

In practice, all three correct attributes have non -zero public differences.This means that all proofs are statistical probability of correctness, not absolutely certainty.Tolerance refers to the maximum tolerance probability of an attribute failure.Zero Gong difference is of course ideal, but in practice, ZKVM has not achieved zero -public difference in all these attributes.Perfect stability and completeness seem to be compatible with simplicity, and there are no known methods to achieve perfect zero -knowledge proof.A common method of measuring safety is based on security BIT, where the tolerance of 1 / (2^n) is called N BIT security.The higher the BIT, the better the safety.

If a ZKVM is completely correct, it does not necessarily mean that it is reliable.Circularity only means that ZKVM meets the security attributes within the scope of the tolerance of its claim.This does not mean that the tolerance is low enough to enter the market.In addition, if ZKVM is safe enough, it does not mean that it is correct. Safety refers to the tolerance of claiming, not the tolerance of actual implementation.Only when ZKVM is completely correct and safe, can ZKVM be reliable within the claimed tolerance range.

Assumption

· Assume the integrity of the proof and the verified as the verification, to draw the conclusion of ZKVM reliable operation.

When ZKVM has the assumptions of trust, the form of trusted settings is usually adopted.Before using the proof system to generate the first proof, the setting process of the ZK proof system will run once to generate some information called “setting data”.During the credit setting process, one or more individuals generate some randomness. These randomness is merged into the settings data, and it is necessary to assume that at least one of these individuals delete the randomness that they merge into the settings data.

There are two common trust assumptions models in practice.

The hypothetical assumptions of the “honest” trust show that more than half of the people with N, more than half of the people perform honestly in some specific interactions with the system. This is the trust assumption of trust used by blockchain.

The “1 / N” trust assumes that among a group of people with N, at least one of these people shows honesty in some specific interactions with the system.The assumption of trust.

It is generally believed that under other conditions, ZKVM, which does not trust assumptions than ZKVM, which needs to be trusted.

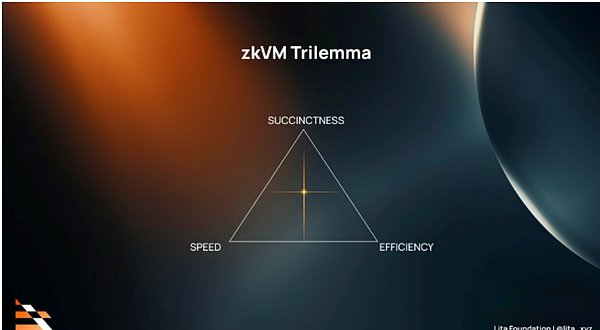

(2.zkvmThree dilemma:zkvmMiddle speed, efficiency and simplicity balance

>

Speed, efficiency and simplicity are scalable attributes.All these factors will increase the end user cost of ZKVM.How to weigh them in the evaluation depends on the application.Generally, the fastest solution is not the most effective or simplest, and the simplest solution is not the fastest or most effective, and so on.Before explaining the relationship between them, let’s define each attribute.

speed

· How fast is the proof of the proof of the proof

· Calculate at the clock time, that is, calculate the time from the beginning to the end

The speed should be defined and measured according to the specific test program, input and system to ensure that the speed can be quantitatively evaluated.This indicator is crucial for delayed applications. In these applications, the timely availability of proof is essential, but it also brings higher expenses and greater proofs.

efficiency

· The less resource consumed by the proofer, the better.

· It is similar to the user time, that is, the computer time spent on the program code.

Proofers consume two resources: kernel time and space.Therefore, efficiency can be subdivided into core time efficiency and spatial efficiency.

Core time efficiency: Prover is multiplied by the average time that runs all kernels to run the core of the PROVER.

For a single -core Prover, the time consumption and speed of the kernel are the same thing.For a multi -core function ProVer running in a multi -core system, kernel time consumption and speed are not the same.If a program makes full use of 5 kernel or threads within 5 seconds, this will be a 25 -second user time and 5 seconds of the clock.

Space efficiency: refers to the storage capacity used, such as RAM.

It is very interesting to use user time as the energy consumed by running computing.With almost all kernels being fully used, the energy consumption of the CPU should be relatively constant.In this case, the user time restricted by the CPU, which is mainly used by the user mode, should be roughly the linear proportion of the Wattime (energy) consumed by the code execution.

From the perspective of any enough proof operation, saving energy consumption or use of computing resources should be an interesting problem, because the proven energy cost (or cloud computing costs) is a considerable operating cost.For these reasons, user time is an interesting measure.Low -proof cost allows service providers to pass the lower prices to cost -sensitive customers.

Both efficiency are related to the amount of funds used in the proof process and the amount of funds used in the proof process, and the amount of funds is related to the financial cost of the proof.In order to make the definition of measurement efficiency, this definition must be related to one or more test procedures, one or more test inputs of each program, and one or more test systems.

Simplicity

· The size of the proof and the complexity of the verification

Simplicity is a combination of three different indicators. The complexity of the verification verification is further subdivided into:

· Proved size: The physical size of the proof is usually united by thousands of bytes.

· Proof Verification time: the time required to verify the proof.

Prove the verification space: proof of the memory usage during verification.

Verification is usually a single -core operation, so in this situation, speed and kernel time efficiency are usually one thing.Like speed and efficiency, the definition of conciseness requires specifying test procedures, test input and testing systems.

After defining each performance attribute, we will demonstrate the impact of optimizing a attribute rather than other attributes.

· Speed: Fast proof of proof causes greater proof, but the proved verification speed is slower.The more resources are generated, the lower the efficiency.

· Simple: Prover needs more time to compress the proof.But proof of verification is fast.The more concise the proof is, the higher the calculation expenses.

· Efficiency: To maximize the use of resource use, it will slow down the speed of proof and reduce the simplicity of proof.

Generally speaking, optimization means that on the other hand, it is not optimized, so multi -dimensional analysis is needed to select the best solution on the basis of cases.

A good way to weigh these attributes in the assessment may be to define acceptable degree for each attribute, and then determine which attributes are the most important.The most important attribute should be optimized, while maintaining a good level on all other attributes.

Below we summarize the various attributes and its key considerations:

>