summary

Product competition in the AI era is inseparable from the resource end (computing power, data, etc.), especially the stable resource end to support.

Model training/iteration also requires a huge user (IP) to help feed data to produce qualitative changes to the model efficiency.

Combining with Web3 can help small and medium -sized AI startup teams to achieve curve overtaking to traditional AI giants.

For the DEPIN ecology, the resource end of the computing power, bandwidth and other resources determine the lower limit (simply computing power integration does not protect the city); the application and depth optimization of AI models (similar to Bittensor), Render, Hivemaper, and effective use of data determine the dimensions of the dimensions determineProject limit.

In the context of AI+DEPIN, model reasoning & amp; fine -tuning, and the mobile AI model market will be valued.

AI market analysis & amp; three questions

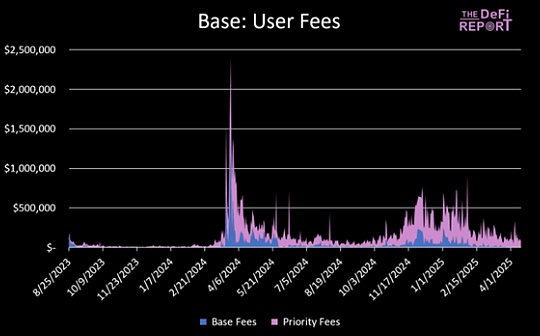

According to statistics, from the birth of ChatGPT in September 2022 to August 2023, the global TOP 50 AI products generated more than 24 billion visits, with an average monthly growth of 236.3 million.

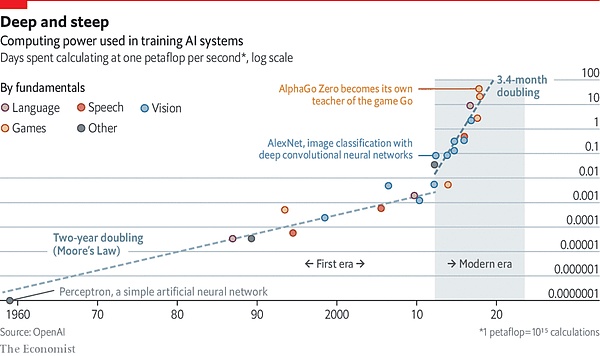

The prosperity of AI products is exacerbated to the dependence of computing power.

>

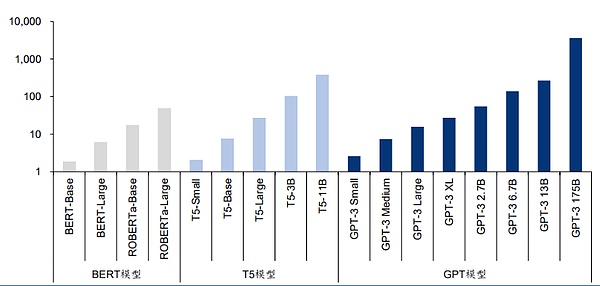

Source: “Language Models Are Few-Shot Learners” “

A paper from the University of Massachusetts University of Amoster states that “training a artificial intelligence model, the carbon emission emitted in his life is equivalent to the carbon emissions of five cars.” However, this analysis involves only one training.When the model is improved by repeated training, the use of energy will be greatly increased.

The latest language model contains billions or even trillions of weights.A popular model GPT-3 has 175 billion machine learning parameters.If you use A100 to use 1024 GPUs, 34 days and 4.6 million US dollars to train the model.

The competition in the AI era has gradually extended into a resource -oriented war in computing power.

>

Source: AI is Harming Our Planet: Addressing AI ’s Staggering Energy Cost

This extends three questions:First, whether an AI product has sufficient resource end (computing power, bandwidth, etc.), especially the stable resource end to support.This reliability requires decentralization with sufficient computing power.In the traditional field, due to the gap at the chip demand side, coupled with the world wall based on policies and ideology, the chip manufacturer is naturally in an advantageous position, and it can greatly drive up prices.For example, the NVIDIA H100 model has increased from 36,000 US dollars in April 2023 to $ 50,000, which further increased the cost of the AI model training team.

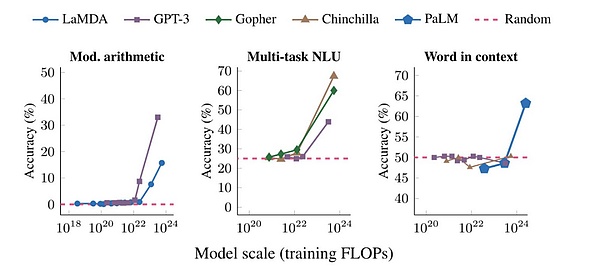

The second problem is that the satisfaction of the resource side condition helps the AI project to solve the hardware just need, but the model training/iteration also requires a huge user standard (IP) to help feed the data.After the size of the model exceeds a certain threshold, the performance on different tasks shows a breakthrough growth.

The third question is: It is difficult for small and medium -sized AI to start a team.The monopoly of traditional financial market computing power has also led to the monopoly of the AI model scheme. Large AI model manufacturers represented by OpenAI, Google DeepMind, etc. are further building their own moats.Small and medium -sized AI teams need to seek more differentiated competition.

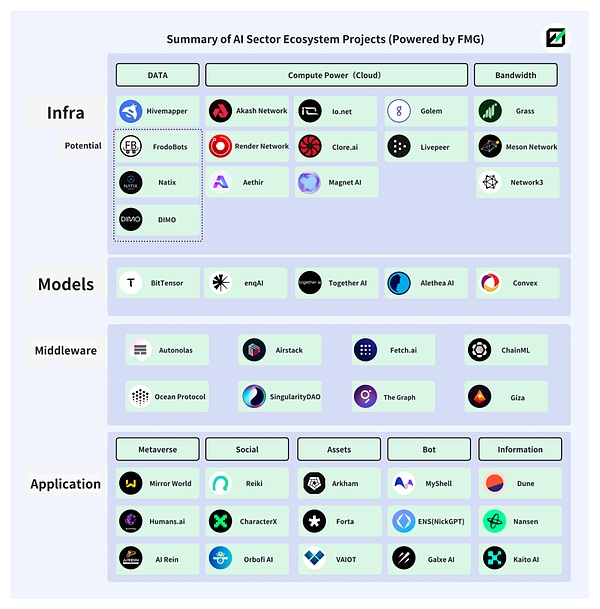

The above three questions can be found from the web3.In fact, the combination of AI and Web3 has a long history and the ecology is more prosperous.

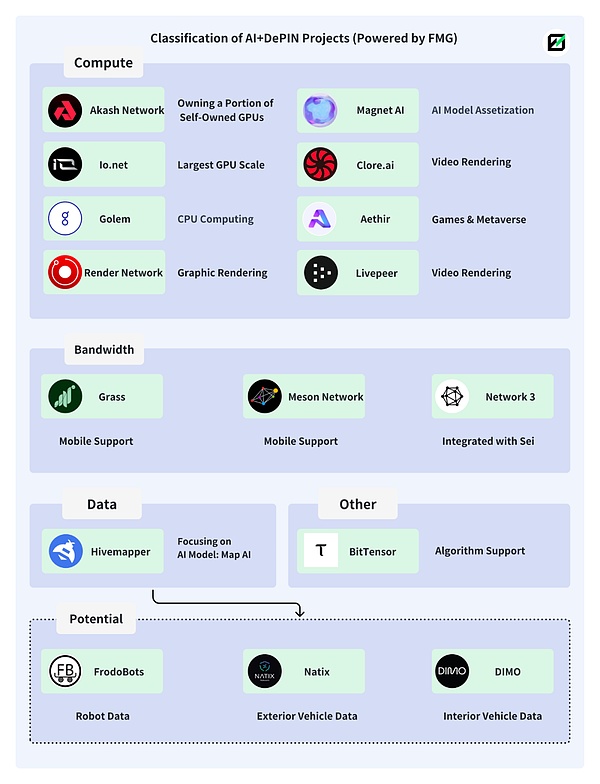

The picture below is some of the AI+Web3 ecosystems made of Future Money Group.

>

AI+DEPIN

1. DEPIN solution

DEPIN is an abbreviation of decentralized physical infrastructure networks, and it is also a collection of production relations between people and equipment. It organically combines users and equipment through the combination of tokens and hardware equipment (such as computers, car cameras, etc.).At the same time, the orderly operation of the economic model is achieved.

Compared with a wider definition web3, because DEPIN naturally has a deeper relationship with hardware equipment and traditional enterprises, DEPIN has natural advantages in attracting off -site AI teams and related funds.

DEPIN Ecology’s pursuit of distributed computing power and the incentives of contributions just only solves the needs of AI products for computing power and IP.

-

DEPIN has used tokens to promote the entry of world computing power (computing power center & amp; idle personal computing power), reducing the centralized risk of computing power and reducing the cost of calling computing power by the AI team.

-

The huge and diversified IP composition of DEPIN ecosystem helps AI models can realize the diversity and objectivity of data acquisition channels. Enough data providers can also ensure the improvement of the performance of the AI model.

-

The overlap of DEPIN ecological users and Web3 users on the character portrait can help the AI projects settled in the settlement develop more AI models with Web3 characteristics to form differentiated competition. This is not available in the traditional AI market.

In the field of web2, the collection of AI model data usually comes from the public data set or model producer. This will be limited by cultural background and region, and there is a subjective “distortion” in the content of the AI model output.The traditional data collection method is limited to the efficiency and cost of collection, and it is difficult to obtain a larger model scale (number of parameters, training time, and data quality).For the AI model, the larger the size of the model, the easier the performance of the model to cause qualitative changes.

>

Source: Large Language Models ’Emerurs Abilities: How they solve proplems they are too not to address?

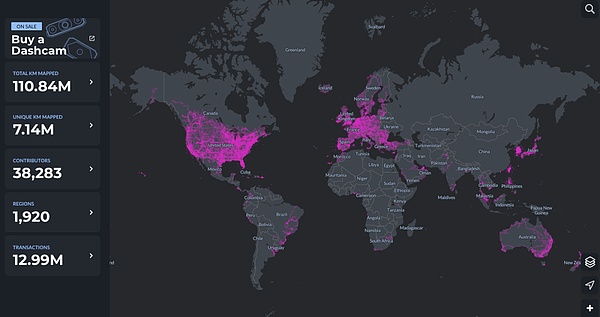

DEPIN happens to have natural advantages in this field.Taking HiveMapper as an example, it is distributed in 1920 regions around the world, and nearly 40,000 contributors are providing data for MAP AI (Map AI model).

>

The combination of AI and DEPIN also means that the fusion of AI and Web3 has risen a new height.The current AI project in Web3 is extensively outbreak on the application end, and has hardly got rid of the direct dependence on the web2 infrastructure. It is about to rely on the existing AI models of the traditional computing power platform into the web3 project.Rarely involved.

The web3 element has been in the downstream of the food chain and cannot get a real excess return.This is also the case for the distributed computing power platform. The simple AI+computing power cannot really excavate the potential of the two. In this relationship, the computing provider cannot obtain more excess profits, and the ecological structure is too single.Therefore, it is impossible to promote the operation of the flywheel through token economics.

However, the concept of AI+DEPIN is breaking this inherent relationship and transferring the attention of Web3 to a wider AI model.

2. Summary of AI+DEPIN project

DEPIN has internal equipment (computing power, bandwidth, algorithm, data), users (model training data providers), and ecological incentive mechanisms (token economics).

We can boldly define it: provide AI with complete objective conditions (computing power/bandwidth/data/IP), provide AI model (training/reasoning/fine -tuning) scenarios, and items that are given token economics can be defined as defined.For AI+DEPIN.

FUTURE MONEY Group will list the classic paradigms of AI+DEPIN.

>

We are divided into computing power, bandwidth, data, and other sections according to the different categories of resources, and try to sort out projects of different sectors.

2.1 computing power

The computing power side is the main composition of the AI+DEPIN sector, and it is also the largest part of the project composition.For computing power project, the main composition of computing power is GPU (graphic processor), CPU (central processor) and TPU (professional machine learning chip).Among them, TPUs are mainly created by Google due to the difficulty in manufacturing, and only cloud -to -algorithm rental services are performed. Therefore, the market size is small.The GPU is a hardware component similar to a CPU, but more professional.Compared with ordinary CPUs, it can handle complex mathematical operations that run parallel operations more efficiently.The initial GPU was dedicated to handling graphics rendering tasks in games and animations, but now their purpose is far exceeding that.Therefore, the GPU is the main source of the current computing power market.

Therefore, many of the AI+DEPIN projects we can see, many of them are specialized in graphics and video rendering, or related games, which are caused by the characteristics of GPU.

From a global perspective, computing power category AI+DEPIN products, the main provider of its computing power consists of three parts: traditional cloud computing power service providers; idle personal computing power; own computing.Among them, cloud computing power service providers accounted for relatively large, idle for personal computing power.This means that such products are more often played as computing agents.The demand side is a variety of AI model development teams.

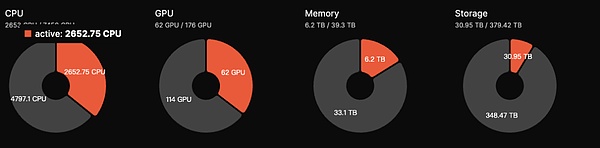

At present, in this category, the computing power can hardly be 100%used in reality, and more often in an idle state.For example, Akash Network, currently in the state of use, is about 35%, and the remaining computing power is idle.IO.NET is also similar.

This may be caused by the current number of AI model training requirements, and it is also why AI+DEPIN can provide cheap computing power costs.With the expansion of the AI market, this situation will improve.

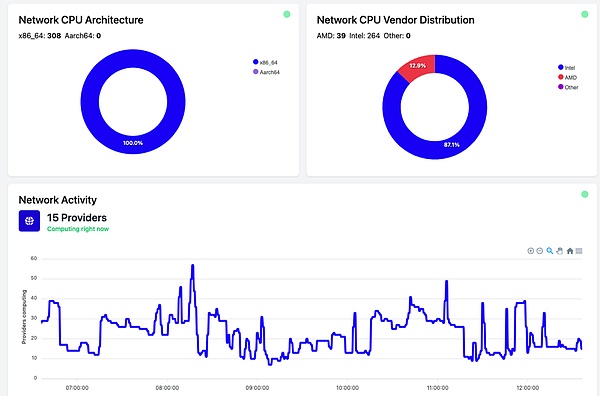

Akash network: decentralized point pair cloud service market

The Akash network is a decentralized point -to -point cloud service market, which is usually referred to as Airbnb, which is called cloud service.Akash network allows users of different sizes to use their services quickly, stable and economically.

Similar to Render, Akash also provides users with GPU deployment, leasing, and AI model training.

In August 2023, Akash launched SuperCloud, allowing developers to set the price they are willing to pay to deploy their AI models, and providers with additional computing power to host users.This function is very similar to Airbnb, allowing providers to rent unused capacity.

By public bidding, the provider of the resource provider opens its idle computing resources in its network. Akash Network has achieved more effective use of resources, thereby providing more competitive prices for resource demanders.

>

At present, the total amount of Akash ecological GPU is 176 GPUs, but the number of activity is 62, with a degree of activity of 35%, which is less than 50%in September 2023.The estimated daily income is about $ 5,000.AKT tokens have pledge function. Users can obtain an annualized income of about 13.15%by pledged tokens to participate in network security.

AKASH has more high -quality data in the current AI+DEPIN sector, and the $ 700 million FDV has a large room for increase in Rener and Bittensor.

Akash also accesss the Subnet of Bittersor to expand its own development space.Overall, Akash projects, as one of the several high -quality projects of the AI+DEPIN track, have excellent fundamentals.

IO.NET: AI+DEPIN with the largest number of GPUs

IO.NET is a decentralized computing network that supports the development, execution and expansion of ML (machine learning) applications on the Solana blockchain, and uses the world’s largest GPU cluster to allow machine learning engineersA small part of the cost to rent and access the distributed cloud service computing power.

According to official data, IO.NET has more than 1 million GPUs in standby.In addition, the cooperation between IO.NET and RENDER has also extended the GPU resources available for deployment.

IO.NET ecology has more GPUs, but almost all come from cooperation with various cloud computing manufacturers and the access to personal nodes, and the idle rate is high. Taking the largest number of RTX A6000 as an example, among the 8426 GPUs, only there are only GPUs.11%(927) is in use, while more models of GPUs are used.However, a major advantage of current IO.NET products is that the pricing is cheap. Compared with AKASH 1.5 US dollars an hour of GPU calling costs, the minimum cost on IO.NET can achieve a minimum of 0.1-1 US dollars.

>

Subsequent IO.NET also considers that GPU providers allowing IO ecosystems to improve their chances of being used by mortgaged native assets.The more assets are invested, the greater the selected opportunity.At the same time, AI engineers who pledge native assets can also use high -performance GPUs.

In terms of GPU access scale, IO.NET is the largest of 10 projects listed in this article.In addition to the idle rate, the number of GPUs in the use state is also first.In terms of token economics, IO.NET native token and agreement token IO will be launched in the first quarter of 2024, with a maximum supply of 22,300,000.The user will charge a 5%fee when using the network, which will be used for BURN IO tokens or provides incentives for new users of supply and demand.The token model has obvious lifting characteristics. Therefore, although IO.NET has not issued coins, the market is very popular.

GOLEM: The computing power market mainly CPU

Golem is a decentralized computing power market that supports anyone to share and aggregate computing resources by creating a network of shared resources.Golem provides users with the scene of computing power rental.

The GoleM market consists of a three -party market, which are computing power suppliers, computing power requirements, and software developers.The computing power demand party submits the computing task, and the Golem network will allocate the computing task to the appropriate computing power supplier (provide RAM, hard disk space, and CPU nuclear numbers, etc.). After the computing task is completed, the two parties will pay for settlement through the Token.

>

Golem mainly uses a CPU for computing power stacking. Although the cost will be lower than the GPU (the Inter i9 14900K price is about $ 700, and the A100 GPU price is $ 12,000-25,000).But the CPU cannot perform high concurrency operations, and energy consumption is higher.Therefore, the CPU for computing power leases may be slightly weaker than the GPU project.

Magnet AI: AI model asset -based

Magnet AI provides model training services for different AI model developers by integrating GPU computing power providers.Unlike other AI+DEPIN products, Magent AI allows different AI teams to publish ERC-20 tokens based on their own models. Users can get different models of token airdrops and additional rewards by participating in different models interaction.

In Q2, 2024, Magent AI will launch Polygon Zkevm & amp; Arbrium.

It is a bit similar to IO.NET. It is mainly integrated for the GPU computing power and provides model training services for the AI team.

The difference is that IO.NET focuses on the integration of GPU resources, encouraging different GPU clusters, enterprises and personal contributions to GPUs, and at the same time, it is a computing power driver.

MAGENT AI looks more focused on the AI model. Because the existence of the AI model tokens may complete the user’s attraction and retention around the tokens and airdrops.Essence

Simple summary: Magnet is equivalent to building a market with GPU. Any AI developer and model deployment can issue ERC-20 tokens on it. Users can get different tokens or actively hold different tokens.

Render: Graphic rendering type AI model professional player

Render Network is a decentralized GPU rendering solution provider. It aims to connect creators and idle GPU resources through blockchain technology to eliminate hardware restrictions, reduce time and cost, and provide digital copyright management at the same time, further promote the Yuan universeDevelopment.

According to the content of Render’s white paper, based on RENDER, artists, engineers and developers can create a series of AI applications, such as AI auxiliary 3D content generation, AI acceleration all -series rendering, and using Render’s 3D scene diagram data for related AI model training.

Render provides AI developers with a render network SDK. Developers will be able to use Render’s distributed GPUs to perform AI computing tasks from NERF (neural reflex field) and Lightfield to generate AI tasks.

According to Global Market Insights, the global 3D rendering market size is expected to reach $ 6 billion.And FDV’s US $ 2.2 billion has room for development.

At present, it is not available for Render’s specific data based on the GPU, but because the OTOY company behind RENDER shows the correlation with Apple several times; coupled with the wide business, OTOY’s star rendering device Octnernder, supports VFX, games, dynamic design designAll industries in the fields of architectural vision and simulation areas, including the native support for Unity3D and Unreal engines.

And Google and Microsoft added the RNDR network.Render handled nearly 250,000 rendering requests in 2021, and artists in the ecology generated about $ 5 billion in sales through NFT.

Therefore, the reference valuation of RENDER should be rendering market potential (about 30 billion US dollars).Coupled with the economic model of BME (burning and casting balance), RENDER still has a certain amount of room for Rener in terms of the price of simple token or FDV.

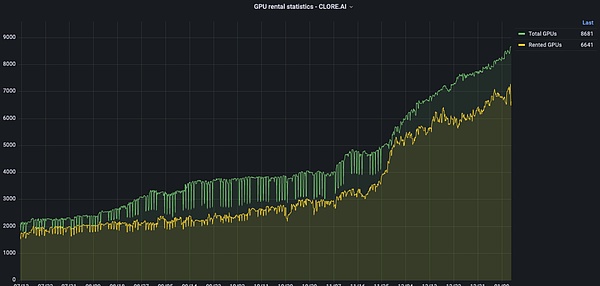

CLORE.AI: Video rendering

CLORE.AI is a platform that provides GPU computing power rental services based on POW.Users can rent their own GPUs for AI training, video rendering, and cryptocurrency mining. Others can obtain this ability at low prices.

The scope of business includes: artificial intelligence training, film rendering, VPN, cryptocurrency mining, etc.When there is a specific computing power service demand, complete the task of network distribution; if there is no compassion service demand, the network finds the cryptocurrency with the highest mining yield at that time and participate in mining.

>

In the past six months, the number of GPUs has risen from 2000 to about 9,000, but from the perspective of GPU integrated, CLORE.AI exceeds Akash.But its secondary market FDV is only about 20%of Akash.

On the token model, the CLORE uses POW mining mode without pre -mining and ICO. 50%of each block is assigned to the miners, 40%is allocated to the tenant, and 10%is allocated to the team.

The total amount of tokens was 1.3 billion yuan. Since June 2022, mining has been began, and it has basically entered the full circulation in 2042. The current circulation is about 220 million.At the end of 2023, the circulation volume was about 250 million, accounting for 20%of the total tokens.Therefore, the current FDV is $ 31 million. In theory, the CLORE.AI is seriously undervalued, but due to its token economics, the distribution ratio of miners is 50%and the proportion of selling sales is too high. Therefore, the increase in currency prices is large.resistance.

Livepeer: video rendering, reasoning

Livepeer is based on Ethereum’s decentralized video protocol to issue rewards to all parties to handle video content safely at reasonable prices.

According to the official, Livepeer has thousands of GPU resources per week for millions of video transcoding.

Livepeer may use the “main network”+”subnet” method to allow different node operators to generate subnets and perform these tasks by fulfilling payment on Livepeer’s main network.For example, the introduction of AI models for AI video subnets for AI model training specifically for video rendering.

Livepeer has since expanded the parts related to AI from simple model training to reasoning & amp; fine -tuning.

AETHIR: Focus on Cloud Games and AI

AETHIR is a cloud game platform that is decentralized cloud infrastructure (DCI) built for game and artificial intelligence companies.It helps to deliver a heavy GPU computing load instead of players to ensure that gamers can get an ultra -low delayed experience anywhere and any equipment.

At the same time, AETHIR provides deployment services including GPU, CPU, disk and other elements.On September 27, 2023, AETHIR officially provided global customers with commercial services for cloud games and AI computing power, and provided computing power support for their own platforms and AI models by integrated decentralized computing.

By transferring the computing power requirements of computing rendering to the cloud, the cloud game eliminates the limitations of the hardware and operating system of the terminal equipment, which significantly expands the potential player’s basic scale.

2.2 Bandwidth

Bandwidth is one of the resources provided by DEPIN to AI. In 2021, the global bandwidth market size exceeds 50 billion US dollars, and it is predicted that it will exceed 100 billion in 2027.

Due to the increasingly more and more complicated AI models, model training usually adopts a variety of parallel computing strategies, such as data parallel, pipeline parallel and tensor parallel.Under these parallel computing modes, the importance of collective communication operations between multiple computing devices is increasingly prominent.Therefore, when building a large -scale training cluster of large AI models, the role of network bandwidth is highlighted.

More importantly, a stable and reliable bandwidth resources can ensure that the different nodes are correspondingly correspondingly avoided the emergence of single -point control (such as Falcon adopt a low latency+high -bandwidth relay network mode to seek delay and delay andThe balance between bandwidth), finally ensure the trust and anti -review of the entire network.

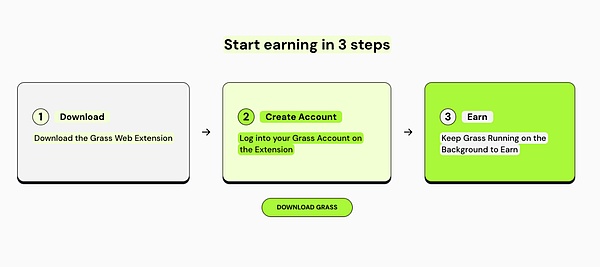

Grass: Band -wide mining products on the mobile terminal

Grass is the flagship product of WYND Network. WYND focuses on opening up Diaolian data and raised $ 1 million in 2023.GRASS allows the households to obtain passive tadpoles through the connection of the interconnecting ⽹ by selling unsuspecting puppet resources.

Users can sell Internet bandwidth on Grass to provide bandwidth services for the AI development team in need to help AI model training to obtain token returns.

At present, GRASS is about to launch a mobile version. Because the mobile terminal and the PC have different IP addresses, this means that Grass users will provide more IP addresses to the platform at the same time, and GRASS will collect more IP addresses to as so on as a result.AI model training provides better data efficiency.

At present, Grass has two IP addresses to provide: PC downloads the expansion program, and the mobile app download.(PC and mobile terminals need to be in different networks)

>

As of November 29, 2023, the Grass platform has been downloaded 103,000 times and 1,450,000 unique IP addresses.

The demand for AI on the mobile and PCs is different, so the applicable AI model training category is different.

For example, the mobile terminal has a lot of data on picture optimization, face recognition, real -time translation, voice assistant, and equipment performance optimization.These are difficult for the PC to provide.

At present, Grass is in a relatively advanced position in the mobile AI model training.Considering the huge potential of the mobile market, the prospects of Grass are worthy of attention.

However, GRASS has not yet provided more effective information in the AI model. It is speculated that early in the early stage may use ore currency as the main operating method.

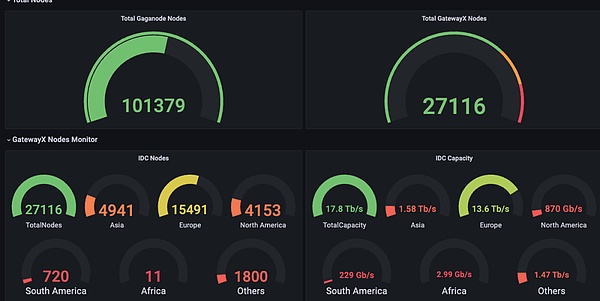

Meson Network: Layer 2 Compatible mobile terminals

MESON Network is the next -generation storage acceleration network based on the blockchain Layer 2. It aggregates idle servers through the form of mining, schedules bandwidth resources and serves it with files and streaming media to accelerate the market, including traditional websites, videos, live broadcasts and blocksChain storage scheme.

We can understand Meson Network as a bandwidth resource pool, and both sides of the pool can be regarded as both supply and demand.The former contributes bandwidth, and the latter uses bandwidth.

Among the specific product structures of MESON, there are two products (GatewayX and Gaganode) are responsible for receiving bandwidth contributing to different nodes in the world, and one product (IPCOLA) is responsible for monetizing these convergent bandwidth resources.

GatewayX: It is mainly integrated business idle bandwidth, mainly aiming at the IDC center.

From MESON’s data board, it can be found that the currently access IDC has more than 20,000 nodes worldwide, and the data transmission capacity of 12.5tib/s is formed.

Gaganode: It mainly integrates the idle bandwidth of residential and personal equipment, and provides edge computing assistance.

IPCOLA: MESON monetization channels and other tasks such as IP and bandwidth distribution.

>

At present, Meson revealed that half a year of income is more than one million US dollars.According to official website statistics, MESON has 27116 IDC nodes, and IDC capacity is 17.7TB/s.

>

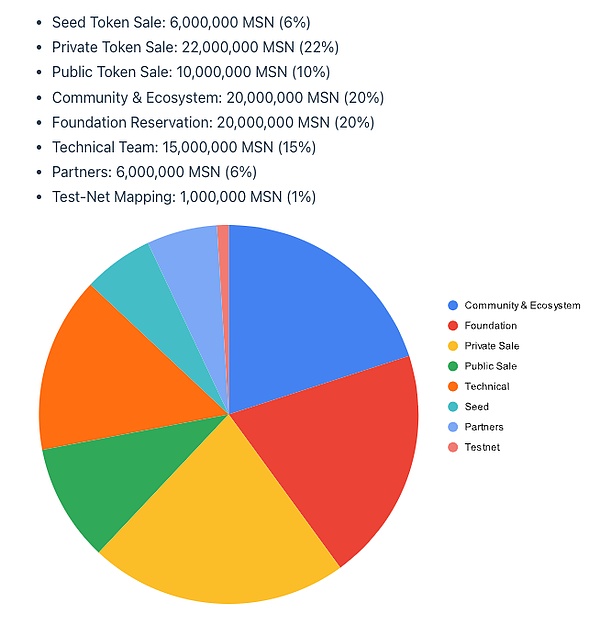

At present, Meson is expected to issue tokens from March to April 2024, but token economics is announced.

Token name: MSN, the initial supply of 100 million pieces, the inflation rate of mining in the first year was 5%, a decrease of 0.5%per year.

Network 3: integrated with SEI network integration

Network3 is an AI company that built a special AI Layer 2 and integrated with SEI.Optimize and compress the AI model algorithm, marginal computing and privacy computing to provide services to AI developers worldwide to help developers train and verify the model quickly, conveniently, and high -efficiency.

According to official website data, there are currently more than 58,000 active nodes of Network3, providing 2PB bandwidth services.Cooperate with 10 blockchain ecosystems such as Alchemy Pay, EthSign, IOTEX.

2.3 Data

Different from computing power and bandwidth, the current market supply is relatively niche.And with distinctive professionalism.The demand group is usually the AI model development team of the project itself or related categories.For example, hivemapper.

Training your own map model through your own data, this paradigm is not logically difficult, so we can try to relax the field of vision to the DEPIN projects similar to Hivemapper, such as DIMO, Natix, and Frodobots.

HiveMapper: Focus on its own MAP AI product empowerment

HiveMapper is one of the DEPIN concept TOP on Solana, and is committed to creating a decentralized “Google Map”.The user can get Honey tokens by buying the driving recorder launched by HiveMapper to use and share real -time images with HiveMapper.

Regarding HiveMapper, Future Money Group has been described in detail in the “FMG Research Report: Thirty -day rising 19 times, understanding the automotive DEPIN format represented by HiveMapper”, which is not expanded here.The reason why HiveMapper is included in the AI+DEPIN sector is because HiveMapper has launched MAP AI. It is an AI map making engine that can generate high -quality map data based on the data collected by the driving recorder.

MAP AI sets a new role, AI trainer.The character’s previous driving recorder data contributor and MAP AI model trainer.

>

HiveMapper’s requirements for AI model trainers did not deliberately specialize. Instead, they used low -participation thresholds such as remote tasks, guessing geographical location, etc., allowing more IP addresses to participate.The richer the IP resources of the DEPIN project, the higher the efficiency of AI obtaining data.And users participating in AI training can also get the Honey token reward.

AI’s application scenarios in HiveMapper are relatively niche, and HiveMapper does not support third -party model training. MAP AI purpose is to optimize its own map products.Therefore, the investment logic of HiveMapper will not change.

POTENTIAL

DIMO: Collect the internal data of the car

Dimo is a car IoT platform based on Polygon, enabling drivers to collect and share their vehicle data. The recorded data include cars driving, driving speed, location tracking, tire pressure, battery/engine health status.

By analyzing vehicle data, the DIMO platform can predict when to maintain and remind users in time.The driver can not only understand his own vehicle, but also contribute data to Dimo’s ecosystem, so as to get the DIMO tokens as a reward.As a data consumer, data can be extracted from the protocol to understand the performance of components such as batteries, autonomous driving systems, and controls.

Natix: Privacy Empowerment Map Data Collection

NATIX is a decentralized network built with AI privacy patents.The aim is based on AI privacy patents, combines global cameras (smartphones, drones, cars), creates a China -insurance camera network, and collects data under the premise of privacy compliance, and on decentralized dynamic maps(DDMAP) Fill in content.

Users participating in data can get tokens and NFT for motivation.

Frodobots: Decentralized network application of robots as a carrier

FrodoBots is a DEPIN game with a mobile robot as a carrier that affects data through cameras and has a certain social attribute.

Users to participate in the game through purchasing robots and interact with global players.At the same time, the camera that comes with the robot will also collect and summarize roads and map data.

The above three projects have two elements with data collection and IP. Although they have not yet conducted relevant AI model training, they all provide necessary conditions for the introduction of the AI model.These projects include HiveMapper, which requires to collect data through the camera and form a complete map.Therefore, the adapted AI models are also limited to the field of map construction.The empowerment of the AI model will help help the project build a higher moat.

It should be noted that through cameras collection, two -way privacy violations are often encountered: such as the definition of external images to collect external images for passers -by; and users attach importance to their own privacy.For example, Natix operates AI for privacy protection.

2.4 algorithm

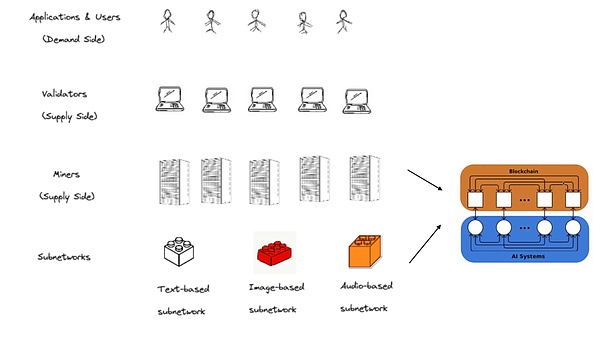

Computing power, bandwidth, and data focus on the distinction of the resource end, and the algorithm focuses on the AI model.Taking Bittersor as an example, Bittersor not only does not contribute data or contributes calculations directly. Instead, through the blockchain network and incentive mechanism, different algorithms can be usedModel market for knowledge sharing.

Similar to OpenAI, Bittersor is the purpose of maintaining the decentralization characteristics of the model to achieve the reasoning performance that matches traditional model giants.

The algorithm track has a certain advancement, and similar items are rare.When AI models, especially the emergence of AI models based on Web3, competition between models will become normal.

At the same time, competition between models will also make the downstream of the AI model industry: the importance of reasoning and fine -tuning will increase.The AI model training is only the upstream of the AI industry. A model needs to be trained first, with initial intelligence, and on this basis, a more careful model reasoning and adjustment of the model (can be understood as optimization).Edge deployment.These processes require more complex ecological architecture and computing power support.It also means that potential development potential is huge.

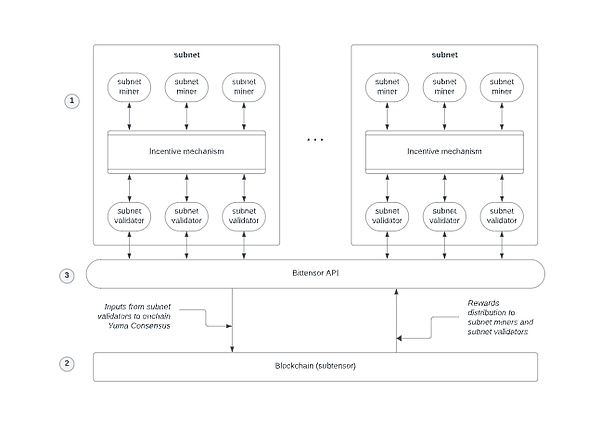

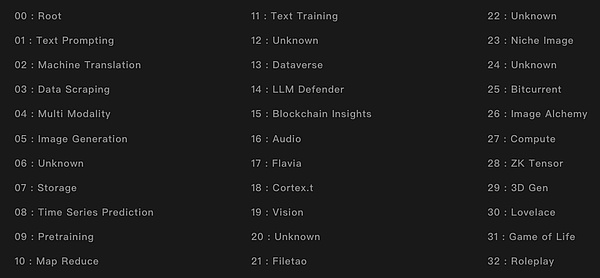

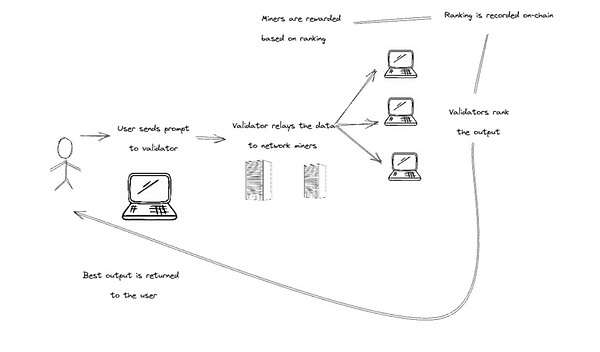

Bittensor: AI model prophecy machine

Bittensor is a decentralized machine learning ecology similar to Polkadot’s main network+subnet.

Working logic: Ziwang passes the activity information to the Bittensor API (the character similar to the prophet), and then the API will pass the useful information to the main network, and the main network will distribute Rewards.

>

>

Bittersor 32 sub -nets

Bittensor Ecological Internal Character:

Miners: It can be understood as providers of various AI algorithms and models around the world. They host AI models and provide them to the Bittersor network; different types of models form different subnets.

>

Verification: Appraiser in the Bittersor network.Evaluate the quality and effectiveness of the AI model, and rank the AI model based on the performance of specific tasks to help consumers find the best solution.

User: The AI model provided by Bittensor is finally used.It can be an individual or the developers who seek AI models to apply.

Nominee: Entrust the tokens to specific authenticants to express support, or they can also change different authenticants to entrust.

>

Open AI supply and demand chain: Some people provide different models, some people evaluate different models, and some people use the results of the best models.

Unlike Akash and Render, which is similar to “computing power agencies”, Bittensor is more like a “labor market”, using existing models to absorb more data to make the model more reasonable.Miners and verifications are more like the role of “construction party” and “supervisor”.The user raises questions, the miners output the answer, the verifications will evaluate the quality of the answer, and finally return to the user.

Bittersor token is TAO.The market value of TAO is currently second only to RNDR, but due to the long -term release mechanism of 4 years, the ratio of the market value to the completely diluted value is the lowest of several projects, which also means that the overall circulation of TAO is relatively relatively high.Low, but the unit price is high.Therefore, the actual value of TAO is undervalued.

At present, it is more difficult to find the appropriate valuation standard. If you start from the architecture, Polkadot (about $ 12 billion) is a reference object, and TAO has nearly 8 times the room for rising.

If you start according to the “prediction machine” attribute, Chainlink ($ 14 billion) is the object of reference, TAO has nearly 9 times the increase.

If you start with business similarities, OPENAI (get about 30 billion US dollars from Microsoft) for reference, TAO’s rising hard top may be about 20 times.

in conclusion

Overall, AI+DEPIN has promoted the paradigm transfer of the AI track in the Web3 context, allowing the market to jump out of the inherent thinking of “AI can do in Web3?”What? “

If the Nvidia CEO Huang Renxun will release the release of a large -scale model as the “iPhone”, then the combination of AI and DEPIN means that the web3 really ushered in the “iPhone” moment.

DEPIN, as the most easily accepted and mature use case in the real world, is making Web3 more acceptable.

Due to the partial coincidence of IP nodes in the AI+DEPIN project, the combination of the two is also the combination of the two, and at the same time, it is also helping the industry to spawn the model and AI products that belong to the Web3’s own.This will help the overall development of the Web3 industry, and open up new tracks for the industry, such as reasoning and fine -tuning of AI models, and the development of mobile AI models.

An interesting point is that the AI+DEPIN products listed in the text seem to be able to go to the development path of the nested public chain.In the previous cycle, various new public chains have emerged, using their own TPS and governance methods to attract the entry of various developers.

The current AI+DEPIN product is the same. Based on its own computing power, bandwidth, data, and IP advantages to attract various AI model developers to settle in.Therefore, we currently see that AI+DEPIN products have a tendency to biased homogeneous competition.

The key is not the amount of computing power (although this is a very important prerequisite), but how to use these computing power.The current AI+DEPIN track is still in the early days of “barbaric growth”, so we can have an expectations of AI+DEPIN’s future pattern and product form.

Reference

1.https://www.techopedia.com/decentralized-Physical-infrastruction-networks-depin-brings-nd-Crypto-Together

2.https://medium.com/meson-network/with-increasing-ai-ndepin-TR 9BD

3.https://medium.com/cudos/the-rise-as-defin-unveiling-the-about-of-ai-andaverse-compute-requirements-213F7B5B117171

4.https://www.numenta.com/blog/2022/05/24/ai-timing-er-planet/

5.https://www.techflowpost.com/article/detail_15398.html

6.https://www.numenta.com/blog/2022/05/24/ai-hrming-er-planet/

7.https://mirror.xyz/livepeer.eth/7yjb5osz28aj9xva54bz4T2Hupnm5o9rrpv-Zmgwdz4444444444444444444