Author: Vitalik, founder of Ethereum; compiled by Deng Tong, Bitchain Vision

Note: This article is the fifth part of the series of articles recently published by Vitalik, founder of Ethereum,Possible futures of the Ethereum protocol, part 5: The Purge”, see the fourth part”Vitalik: The Verge of Ethereum”. See “Vitalik: Key objectives of the Ethereum The Scourge phase”, see the second part “Vitalik: How should the Ethereum protocol develop in the Surge stage”, see the first part”What else can be improved on Ethereum PoS”. The following is the full text of the fifth part:

One of the challenges facing Ethereum is that by default, the bloat and complexity of any blockchain protocol will increase over time.This happens in two ways:

-

Historical data:Any transaction and any account created at any historical moment will need to be permanently stored by all clients and downloaded by any new client that is fully synchronized with the network.This causes client load and synchronization time to increase over time, even if the chain’s capacity remains the same.

-

Protocol functions:Adding new features is much easier than removing old features, resulting in code complexity increasing over time.

In order for Ethereum to last for a long time, we need to put intensified counter-pressure on both trends, reducing complexity and bloat over time.But at the same time, IWe need to retain one of the key attributes of blockchain: persistence.You can put NFTs, love letters in transaction call data, or smart contracts containing a million dollars on the chain, enter a cave for ten years, and find it is still there waiting for you to read and interact.In order for dapps to be fully decentralized and remove upgrade keys with confidence, they need to be sure that their dependencies are not upgraded in a way that breaks them—especially L1 itself.

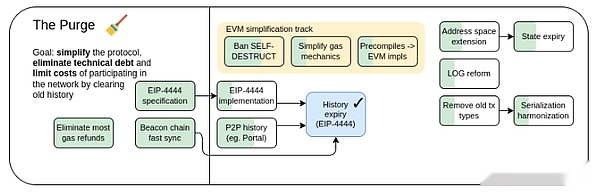

The Purge, 2023 roadmap.

If we focus on and balance these two needs, minimizing or reversing expansion, complexity, and recession while maintaining continuity, is absolutely possible.Organisms can do this: While most organisms age over time, luckily a few organisms do not age.Even social systems can have extremely long lifespans.In some cases, Ethereum has been successful: the proof of work has disappeared, the SELFDESTRUCT opcode has basically disappeared, and the beacon chain node has stored old data for up to six months.Finding this path for Ethereum in a more general way and moving towards a long-term stable end result is the ultimate challenge for Ethereum’s long-term scalability, technological sustainability and even security.

The Purge: Main objectives

Reduce client storage requirements by reducing or eliminating the need for each node to permanently store all history, and may even finalize it

Reduce protocol complexity by eliminating unwanted features

Historical data expired

What problems does it solve?

As of this writing, a fully synchronized Ethereum node requires approximately 1.1 TB of disk space to execute the client, and a few hundred GB of space to be used for the consensus client.Most of them are historical data: data about historical blocks, transactions and receipts, most of which are from years ago.This means that even if the gas limit does not increase at all, the size of the node increases by hundreds of GB per year.

what is it?How does it work?

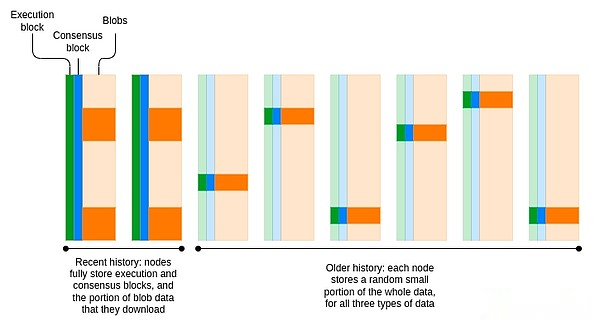

A key simplified feature of the historical storage problem is that since each block points to the previous block through hash links (and other structures), reaching consensus on the current is enough to reach consensus on history.As long as the network agrees on the latest block, any historical block, transaction or status (account balance, random number, code, storage) can be provided by any single participant with Merkle proof, and the proof allows anyone else to verify that it is correctsex.Although the consensus is the N/2-of-N trust model, history is the 1-of-N trust model.

This opens up a lot of options for how we store history.A natural choice is a network where each node stores only a small portion of data.This is how the torrent network has worked for decades: While the network stores and distributes millions of files in total, each participant stores and distributes only a few of them.Perhaps counterintuitively, this approach may not even reduce the robustness of the data.If we can implement a network of 100,000 nodes by reducing the cost of node operation, where each node randomly stores 10% of the history, then each piece of data will be copied 10,000 times—with 10,000 of the content stored per nodeThe replication factor of each node network is exactly the same.

Today, Ethereum has begun to get rid of the model where all nodes permanently store all history.The consensus block (i.e., the portion related to the Proof of Stake Consensus) is stored for only about 6 months.Blobs are stored for only about 18 days.EIP-4444 is designed to introduce a one-year storage period for historical blocks and receipts.The long-term goal is to have a coordinated period (probably about 18 days), during which each node is responsible for storing everything, and then there is a peer-to-peer network of Ethereum nodes that store old data in a distributed way.

Erasing coding can be used to improve robustness while keeping the replication factor unchanged.In fact, to support data availability sampling, blobs have adopted erasure codes.The easiest solution might be to reuse this erasure code and put the execution and consensus block data into the blob as well.

What research are available?

EIP-4444:https://eips.ethereum.org/EIPS/eip-4444

Torrents and EIP-4444:https://ethresear.ch/t/torrents-and-eip-4444/19788

Portal Network:https://ethereum.org/en/developers/docs/networking-layer/portal-network/

Portal Network and EIP-4444:https://github.com/ethereum/portal-network-specs/issues/308

Distributed storage and retrieval of SSZ objects in Portal:https://ethresear.ch/t/distributed-storage-and-cryptographically-secured-retrieval-of-ssz-objects-for-portal-network/19575

How to increase gas limit (parameter):https://www.paradigm.xyz/2024/05/how-to-raise-the-gas-limit-2

What are the rest and what trade-offs are there?

The remaining main work involves building and integrating a concrete distributed solution to store history—at least execution history, but ultimately also includes consensus and blobs.The easiest solution is (i) simply introducing the existing torrent library, and (ii) an Ethereum native solution called the Portal network.Once any of these are introduced, we can enable EIP-4444.EIP-4444 itself does not require a hard fork, but it does require a new version of the network protocol.So it is valuable to enable it for all clients at the same time, because otherwise the clients may fail due to connecting to other nodes that expect to download the full history but are not actually implemented.

The main trade-off involves how we work to make “previous” historical data available.The easiest solution is to stop storing previous data tomorrow and rely on existing archive nodes and various centralized providers for replication.This is easy, but it weakens Ethereum’s position as recording permanent data.A harder but safer approach is to build and integrate a torrent network first to store history in a distributed way.Here there are two dimensions:

-

How much effort do we need to ensure that the maximum number of nodes does store all the data?

-

How deeply do we integrate historical storage with protocols?

For (1), the most rigorous approach would involve custody proof: In fact, each proof of stake verifier is required to store a certain percentage of history and regularly check whether they do so through encryption.A more modest approach is to set a voluntary standard for the percentage of history stored per client.

For (2), the basic implementation only involves what has been done today: Portal has stored an ERA file containing the entire Ethereum history.A more thorough implementation would involve actually connecting it to the synchronization process so that if someone wants to sync the full history storage node or archive node, even if no other archive nodes are online, they can do this by syncing directly from the Portal network.

How does it interact with the rest of the roadmap?

If we want to make the node running or starting extremely simple, reducing historical storage requirements can be arguably more important than stateless: of the 1.1 TB required by the node, about 300 GB is state, and the remaining about 800 GB is history.The vision of the Ethereum node running on a smartwatch and setting in just a few minutes is achieved only if stateless and EIP-4444 are achieved simultaneously.

Limiting historical storage also makes newer Ethereum node implementations more feasible to support only the latest versions of the protocol, making them much simpler.For example, since all empty storage slots created during the 2016 DoS attack have been deleted, many lines of code can be safely deleted.Now that switching to Proof of Stake is history, the client can safely delete all code related to Proof of Work.

Status data expired

What problems does it solve?

Even if we eliminate the need for client storage history, the client storage demand will continue to grow, about 50 GB per year, as the state continues to grow: account balances and random numbers, contract codes and contract storage.Users can pay a one-time fee, which will always put a burden on the current and future Ethereum clients.

State is harder to “expirate” than history, because the basic design assumption of EVM is that once a state object is created, it will exist forever and can be read by any transaction at any time.If we introduce stateless, some people think this problem may not be that bad: only one class of specialized block builders requires actual storage of state, and all other nodes (even including list generation!) can run statelessly.However, some people think we don’t want to rely too much on statelessness, and eventually we may want the state to expire to keep Ethereum decentralized.

what is it?How does it work?

Today, when you create a new state object (can be done in one of three ways: (i) send ETH to a new account, (ii) create a new account with the code, and (iii) set up previously untouched storage) The state object will always be in that state.Instead, what we want is that the object automatically expires over time.The key challenge is to do this in a way that achieves three goals:

-

efficiency:No large amount of extra calculation is required to run the expiration process.

-

User Friendliness:If someone comes back five years into the cave, they shouldn’t lose access to their ETH, ERC20, NFT, CDP positions…

-

Developer Friendliness:Developers don’t have to switch to completely unfamiliar thinking models.Furthermore, applications that are now rigid and unupdated should continue to run reasonably.

Without meeting these goals, the problem is easy to solve.For example, you can have each state object also store a counter to record its expiration date (which can be extended by destroying ETH, which can automatically occur when read or write), and have a loop through the state to delete the expiration stateThe process of the object.However, this introduces additional compute (even storage requirements) and certainly does not meet user-friendliness requirements.It is also difficult for developers to reason about extreme cases involving stored values sometimes reset to zero.If you set the expiration timer to within the contract scope, this technically makes the work of the developer easier, but makes the economy more difficult: developers have to consider how to “pass” on the ongoing storage costs toTheir users.

These are issues that the Ethereum core development community has been working hard to solve for years, including proposals such as “blockchain rental” and “regeneration”.Ultimately, we combine the best parts of the proposal and focus on two categories of “the least bad solutions known”:

-

Some state expiration solutions.

-

State expiration proposal based on address cycle.

Some status expired

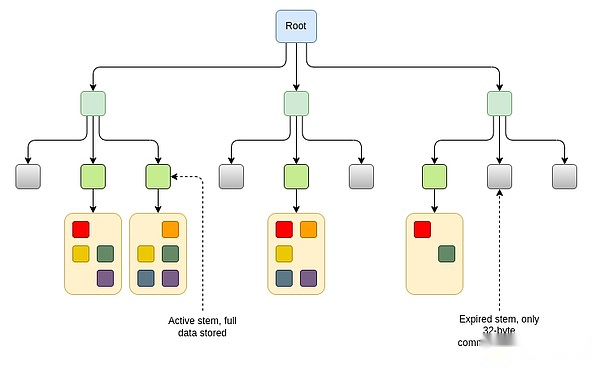

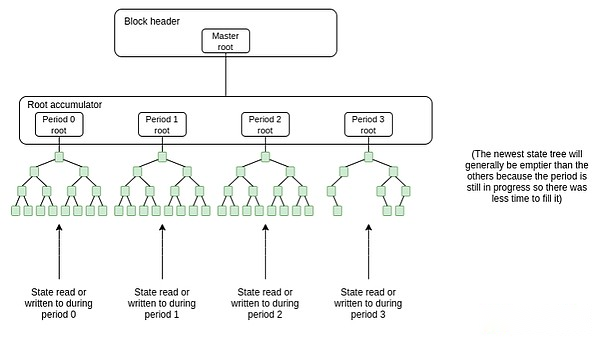

Some state expiration proposals follow the same principles.We divide the state into chunks.Everyone permanently stores the “top-level map” and which blocks are empty or non-empty.The data in each block is stored only if it has been recently visited.There is a “resurrection” mechanism where if a block is no longer stored, anyone can recover that data by providing a proof of what it is.

The main differences between these proposals are: (i) how do we define “recently”, and (ii) how do we define “blocks”?A specific proposal is EIP-7736, which builds on the “stem and leaf” design introduced for Verkle trees (although compatible with any form of stateless trees, such as binary trees).In this design, headers, codes and storage slots adjacent to each other are stored under the same “stem”.Data stored under the stem can be up to 256 * 31 = 7,936 bytes.In many cases, the entire title and code of the account, as well as many key storage slots, will be stored under the same backbone.If the data under a given backbone is not read or written within 6 months, the data is no longer stored, but only the 32-byte commitment to the data (“stub”).Future transactions accessing this data will require “resurrecting” the data and provide proof of checking with the stub.

There are other ways to implement similar ideas.For example, if the account interval is not sufficient, we can develop a plan where each 1/232 part of the tree is controlled by a similar stem and leaf mechanism.

This is even more tricky due to motivational factors: an attacker can “update the tree” by putting a large amount of data into a single subtree and sending a single transaction every year, forcing the client to permanently store a large amount of state.If you make the update cost proportional to the tree size (or the update duration inversely proportional to the tree size), someone might hurt another user by putting a lot of data into the same subtree as them.You can try to limit these two issues by dynamically regulating account intervals based on the size of the subtree: For example, each consecutive 2^16 = 65536 state objects can be treated as a “group”.However, these ideas are more complex; stem-based approaches are simple and can coordinate incentives because usually all the data under the stem is relevant to the same application or user.

State expiration proposal based on address cycle

What if we want to avoid any permanent state growth altogether, even a 32-byte stub?Here is a puzzle: what if the state object is deleted, and later the EVM execution puts another state object in the exact same position, but then the person who cares about the original state object comes back and tries to restore it?For some state expiration, the stub prevents new data from being created.For full status expiration, we can’t even store stubs.

Address cycle-based design is the best way to solve this problem.Instead of storing the entire state with a state tree, we have a growing list of state trees, and any read or written states are saved in the latest state tree.Add a new empty state tree every cycle (think: 1 year).Older state trees are fixed.The full node only needs to store the two closest trees.If the state object is not touched within two cycles and therefore falls into the expired tree, it can still be read or written, but the transaction needs to prove it Merkle proof – once done, the copy will be saved again in the latest treemiddle.

A key idea to make this all user- and developer-friendly is the concept of address cycles.The address cycle is a number that is part of the address.A key rule is that addresses with an address period N can only be read or written during or after period N (i.e. when the status tree list reaches length N).If you want to save a new state object (for example, a new contract or a new ERC20 balance), if you make sure to put the state object into a contract with an address period of N or N-1, you can save it immediately without providing itNo proof of anything before.On the other hand, any state addition or editing in the old address cycle requires proof.

This design retains most of the current properties of Ethereum, with very small additional computation, allowing applications to be written almost as they are now (ERC20 needs to be rewrited to ensure that the balance of addresses with address period N is stored in itself with address period N) and solved the problem of “user entering the cave for five years”.However, it has a big problem:The address needs to be extended to more than 20 bytes to fit the address cycle.

Address space extension

One proposal is to introduce a new 32-byte address format that includes version number, address period number, and extended hash value.

0x01000000000157aE408398dF7E5f4552091A69125d5dFcb7B8C2659029395bdF

Red is the version number.Here the four orange zeros represent blank spaces and can accommodate shard numbers in the future.Green is the address period number.Blue is a 26-byte hash.

The key challenge here is backward compatibility.Existing contracts are designed around 20-byte addresses and are often used with tight byte packaging techniques, making it clear that the address is exactly 20-byte-long.One idea to solve this problem is to use a transformation graph where an old-style contract that interacts with a new-style address will see a 20-byte hash of the new-style address.However, it takes considerable effort to ensure its safety.

Address space contraction

The other way around: we immediately disable some address subranges of size 2128 (such as all addresses starting with 0xffffffffff), and then use that range to introduce addresses with address periods and 14-byte hash.

0xffffffff000169125d5dFcb7B8C2659029395bdF

The key sacrifice of this approach is that it introduces security risks to counterfactual addresses: addresses that hold assets or permissions but whose code has not been published to the chain.The risk involves someone creating an address that claims to have a (not yet published) code, but there is another valid code with the hash value pointing to the same address.Calculating such collisions today requires 280 hashes; address space shrinkage will reduce this number to 256 hashes that are very easy to obtain.

The critical risk area, not the counterfactual address of a wallet held by a single owner, is a relatively rare situation today, but as we enter the multi-L2 world, this situation may become more common.The only solution is to simply accept this risk, but identify all common use cases where problems may arise and come up with effective solutions.

What research are available?

早期提案

区块链租用费:https://github.com/ethereum/EIPs/issues/35

Ethereum state size management theory:https://hackmd.io/@vbuterin/state_size_management

Several possible paths for stateless and state expiration:https://hackmd.io/@vbuterin/state_expiry_paths

Some status expiration proposals

EIP-7736:https://eips.ethereum.org/EIPS/eip-7736

Address Space Extended Document

原始提案:https://ethereum-magicians.org/t/increasing-address-size-from-20-to-32-bytes/5485

Ipsilon Comments:https://notes.ethereum.org/@ipsilon/address-space-extension-exploration

Blog post comments:https://medium.com/@chaisomsri96/statelessness-series-part2-ase-address-space-extension-60626544b8e6

What happens if we lose the resistance to collision:https://ethresear.ch/t/what-would-break-if-we-lose-address-collision-resistant/11356

What else is left to do?What to weigh?

I think there are four viable paths in the future:

-

We practice statelessness and never introduce state expiration.State is growing (although slower: we may not see it over 8 TB in decades), but only needs to be held by relatively professional user categories: even PoS validators don’t need state.

One feature that requires access to partial state is to include list generation, but we canImplement this in a decentralized way:Each user is responsible for maintaining the status tree part that contains their own account.When they broadcast transactions, they broadcast proof of the status object accessed during the verification step (this applies to EOA and ERC-4337 accounts).The stateless validator can then combine these proofs into the entire proof containing the list.

-

We implement partial state expiration and accept a much lower but still non-zero permanent state size growth rate.This result may be similar to a historical expiration proposal involving peer-to-peer networks, which accepts a permanent historical storage growth rate that each client must store a lower but fixed percentage of historical data, but is much lower, but stillNot zero.

-

We do declare the expiration date and extend the address space.This will involve a multi-year process to ensure that address format conversion methods are effective and secure, including for existing applications.

-

We did declare the expiration date and shrink the address space.This will involve a multi-year process to ensure that all security risks involving address conflicts, including cross-chain situations, are handled.

An important point is that whether or not a state expiration scheme that relies on address format changes is implemented, the problem of address space expansion and shrinkage must ultimately be solved.Today, about 2^80 hashes are required to generate address conflicts, and this compute load is already feasible for extremely resource-rich participants: the GPU can do about 2^27 hashes, so it can be run for a year.2^52 are calculated, so all around 2^30 GPUs in the world can calculate conflicts in about 1/4 of the year, and FPGAs and ASICs can further speed up this process.In the future, such attacks will be open to more and more people.Therefore, the actual cost of implementing a full state expiration may not be as high as it seems, as we have to solve this very challenging address problem anyway.

How does it interact with the rest of the roadmap?

Execution of state expiration may make the conversion from one state tree format to another easier because there is no need for the conversion process: you can simply start making a new tree with a new format and then harden it laterFork to convert the old tree.So while state expiration is complex, it does help simplify other aspects of the roadmap.

Functional cleaning

What problems does it solve?

One of the key prerequisites for security, accessibility and trustworthiness is simplicity.If the protocol is beautiful and simple, the possibility of errors is reduced.It increases the chance that new developers can join and use any part of it.It is more likely to be fair and more likely to resist special interests.Unfortunately, protocols, like any social system, become more complex by default over time.If we don’t want to get Ethereum into an increasingly complex black hole, we need to do one of two things:(i) Stop making changes and rigidizing the protocol, (ii) Be able to actually remove features and reduce complexity.Intermediate routes, i.e. making fewer changes to the protocol while eliminating at least a little complexity over time, are also possible.This section discusses how to reduce or eliminate complexity.

what is it?How does it work?

There is no large single fix that reduces protocol complexity; the essence of the problem is that there are many small fixes.

A basically done example that can be used as a blueprint for how to deal with other problems, namely, deleting the SELFDESTRUCT opcode.The SELFDESTRUCT opcode is the only opcode that can modify an unlimited number of storage slots within a single block, requiring greater complexity from clients to avoid DoS attacks.The original purpose of this opcode is to achieve voluntary state clearing, allowing the state size to decrease over time.In fact, few people end up using it.This opcode is weakened, allowing only self-destruct accounts created in the same transaction in the Dencun hard fork.This solves DoS issues and allows significant simplification of client code.In the future, it may make sense to eventually remove the opcode completely.

Some key examples of protocol simplification opportunities identified to date include the following.First, some examples outside of EVM; these examples are relatively non-invasive, so they are easier to reach consensus and implement in a shorter time.

-

RLP → SSZ conversion:Initially, Ethereum objects were serialized using an encoding called RLP.Today, beacon chains use SSZ, which is significantly better in many ways, including not only supporting serialization but also hashing.Ultimately, we want to get rid of RLP completely and move all data types to the SSZ structure, which in turn makes upgrading easier.The EIPs currently proposed for this include [1] [2] [3].

-

Delete old transaction types:There are too many types of transactions today, and many of them may be deleted.A more modest alternative to completely deletion is the account abstraction feature, through which smart accounts can contain codes to process and verify legacy transactions (if they wish).

-

LOG Reform:Logs create bloom filters and other logic, adding complexity to the protocol, but because it’s too slow, the client doesn’t actually use it.We can remove these features and instead devote our energy to alternatives, such as out-of-protocol decentralized log reading tools using modern technologies such as SNARK.

-

Finally, the beacon chain synchronization committee mechanism will be cancelled:The synchronization committee mechanism was originally introduced to enable light client authentication for Ethereum.However, it adds the complexity of the protocol.Ultimately, we will be able to use SNARK to directly verify the Ethereum consensus layer, which will eliminate the need for a dedicated light client verification protocol.By creating a more “native” light client protocol that involves validating signatures from a random subset of the Ethereum consensus validator.

-

Data format coordination:Today, execution state is stored in the Merkle Patricia tree, consensus state is stored in the SSZ tree, and blobs are submitted as KZG promises.In the future, it makes sense to create a single unified format for block data and state.These formats will cover all important needs: (i) simple proof of stateless clients, (ii) serialization and erase encoding of data, and (iii) standardized data structures.

-

Delete Beacon Chain Committee:This mechanism was initially introduced to support specific versions of execution sharding.Instead, we end up sharding through L2 and blob.Therefore, the committee is unnecessary and is working to remove it.

-

Delete mixed byte order:EVM is a big-endian byte order, and the consensus layer is a little-endian byte order.It might make sense to re-coordinate and make everything big-endian endian (probably big-endian endian, as EVM is harder to change).

Now, some examples inside EVM:

-

Simplify the gas mechanism:The current gas rules have not been well optimized yet, and it is impossible to explicitly limit the number of resources required to verify the block.Key examples of this include (i) storage read/write costs, designed to limit read/write times in a block, but are currently very arbitrary, and (ii) memory fill rules, which are currently difficult to estimate the maximum memory consumption of the EVM.Recommended fixes include stateless gas cost changes, reconciling all storage-related costs into a simple formula, and memory pricing recommendations.

-

Remove precompilation:Many of the precompilations that Ethereum has today are both unnecessary and relatively unused, and account for a large proportion of consensus failure risks, but are not actually used by any application.Two ways to deal with this problem are (i) directly removing the precompilation, and (ii) replacing it with (and inevitably more expensive) EVM code that implements the same logic.This EIP draft recommends that you do this first for identity precompilation; later, RIPEMD160, MODEXP, and BLAKE may be deleted.

-

Remove gas observability:Makes EVM execution no longer able to see how much gas it has left.This breaks some applications (most obviously sponsored deals), but can be upgraded more easily in the future (for example, for more advanced multidimensional gas versions).The EOF specification has made gas unobservable, but to facilitate protocol simplification, EOF needs to be mandatory.

-

Improvements to static analysis:Today’s EVM code is difficult to perform static analysis, especially since jumps can be dynamic.This also makes it more difficult to make an optimized EVM implementation (precompile EVM code into other languages).We can solve this problem by removing dynamic jumps (or making them more expensive, for example, gas costs are linearly related to the total number of JUMPDESTs in the contract).This is what EOF does, but getting the benefits of protocol simplification from it requires making EOF mandatory.

What research are available?

Next steps for Purge:https://notes.ethereum.org/I_AIhySJTTCYau_adoy2TA

SELFDESTRUCT:https://hackmd.io/@vbuterin/selfdestruct

SSZ-ification EIPS: [1] [2] [3]

Stateless gas cost changes:https://eips.ethereum.org/EIPS/eip-4762

Linear memory pricing:https://notes.ethereum.org/ljPtSqBgR2KNssu0YuRwXw

Precompiled and deleted:https://notes.ethereum.org/IWtX22YMQde1K_fZ9psxIg

Bloom filter removal:https://eips.ethereum.org/EIPS/eip-7668

A method for off-chain secure log retrieval using incremental verifiable calculations (read: recursive STARK):https://notes.ethereum.org/XZuqy8ZnT3KeG1PkZpeFXw

What else should I do and what trade-offs are there?

The main tradeoff for such functional simplification is (i) the degree and speed of our simplification with (ii) backward compatibility.The value of Ethereum as a chain is that it is a platform where you can deploy applications and be sure that it will still work properly after many years.At the same time, it is also possible to put this ideal too far, in the words of William Jennings Brian, “nail Ethereum on the cross of backward compatibility.”If only two apps in the entire Ethereum use a feature, one of which has no users for years and the other has almost no use and has received a total of $57 worth, then we should remove the feature, if needed, canPay $57 out of your own pocket to the victim.

The broader social problem is to create a standardized pipeline for non-urgent backward compatibility disruptive changes.One way to solve this problem is to check and extend existing precedents, such as the SELFDESTRUCT process.The pipe looks like this:

-

Step 1:Start discussing the deletion function X.

-

Step 2:Perform analysis to determine how much destructive the application is to be done by deleting X, choose (i) to abandon the idea, (ii) to proceed as planned, or (iii) to determine a modified “minimum destructive” deleting XMethod and continue.

-

Step 3:Create a formal EIP to deprecate X.Make sure popular advanced infrastructures (such as programming languages, wallets) respect this and stop using the feature.

-

Step 4:Finally, actually delete X.

There should be a years-long process between Steps 1 and 4, and clearly state which projects are in which step.At this point, there is a trade-off between the strength and speed of the feature removal process and the more conservative and other areas of investing more resources into protocol development, but we are still far from the forefront of Pareto.

EOF

One of the main changes proposed for EVM is the EVM Object Format (EOF).EOF introduces a lot of changes, such as prohibiting gas observability, code observability (i.e. no CODECOPY), and allowing only static jumps.The goal is to allow EVMs to perform more upgrades to have more powerful properties while maintaining backward compatibility (because EVMs before EOF still exist).

The benefit of this is that it creates a natural path to adding new EVM capabilities and encouraging migration to stricter EVMs with stronger guarantees.Its disadvantage is that it significantly increases the complexity of the protocol unless we can find ways to ultimately deprecate and delete old EVMs.A major problem is:What role does EOF play in EVM simplification proposals, especially if the goal is to reduce the complexity of the entire EVM?

How does it interact with the rest of the roadmap?

Many of the “improvements” proposals in the rest of the roadmap are also opportunities to simplify old features.Repeat some examples above:

-

Switching to single slot finality gives us the opportunity to cancel committees, re-engineer economics, and make other simplifications related to proof of stake.

-

Fully implementing account abstraction allows us to delete many existing transaction processing logic by moving it into a “default account EVM code” that all EOAs can be replaced with.

-

If we move the Ethereum state to the binary hash tree, this can be coordinated with the new version of SSZ so that all Ethereum data structures can be hashed in the same way.

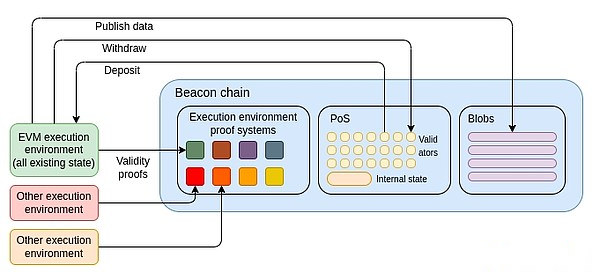

A more radical approach: convert most of the protocol into contract code

A more radical Ethereum simplification strategy is to keep the protocol as it is, but to transform much of the protocol from protocol functionality to contract code.

The most extreme version is to make Ethereum L1 “technically” just beacon chains and introduce a minimal VM (such as RISC-V, Cairo or simpler VMs specifically used to prove the system) that allow anyone else to create themselvesSummary of .Then, the EVM will be the first of these summaries.Ironically, this is exactly the same result as the 2019-20 implementation environment proposal, although SNARK makes it more feasible to actually implement.

A more modest approach is to keep the relationship between the beacon chain and the current Ethereum execution environment unchanged, but to exchange EVMs in-place.We can choose RISC-V, Cairo, or other VMs as the new “official Ethereum VM” and then cast all EVM contracts into new VM code that interprets the original code logic (by compilation or interpretation).In theory, it is even possible to do this with the “target VM” as the EOF version.

Special thanks to Justin Drake, Tim Beiko, Matt Garnett, Piper Merriam, Marius van der Wijden and Tomasz Stanczak for their feedback and comments.