Send 13 tweets in a row!

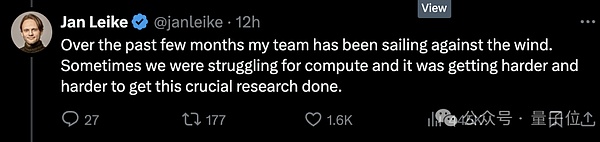

OpenAI Super Alignment LeaderJan Leike, that is, the one who just followed Ilya to leave the company, revealed the real reason for his resignation and more inside information.

First of all, there is not enough computing power, promising to give a super-aligned team a shortage of 20%, causing the team to go against the current, but it is becoming increasingly difficult.

Secondly, safety is not taken seriously, the priority of the security governance issues of AGI is not as good as launching a “shiny product”.

Immediately afterwards, more gossip was dug out by others.

For example, all members of OpenAI have to sign an agreement.Ensure you don’t say bad things about OpenAI after leaving your job, if you do not sign, it is deemed to automatically give up the company’s shares.

But there are still hard bones that refuse to sign out and put out heavy news (laughing to death), saying that the core leadership has long been divided on priorities of security issues.

Since the palace battle last year, the conflict between the two factions has reached the critical point, and then it seems to collapse quite decently.

Therefore, although Ultraman has sent co-founders to take over the Super Alignment team, it is still not favored by the outside world.

The Twitter netizens who were on the front line thanked Jan for having the courage to speak out this amazing melon and sighed:

I’ll just pick it up, it seems that OpenAI really doesn’t pay much attention to this security!

But looking back, Ultraman, who is now in charge of OpenAI, can still sit still for the time being.

He stood up to thank Jan for his contribution to OpenAI’s super alignment and security, and said that he was actually very sad and reluctant to leave.

Of course, the point is actually this sentence:

Wait, I will post a longer tweet in two days.

The promised 20% computing power actually has the ingredients of painting big cakes

From the OpenAI palace battle last year to the present, the soul figure and former chief scientist Ilya has almost never made public appearances or made public voices.

Before he publicly claimed to resign, there were many different opinions.Many people think that Ilya has seen some terrible things, such as the AI system that may destroy humans.

△Netizen: The first thing I do every day when I wake up is thinking about what Ilya sees

This time, Jan was just out of the blue, and the core reason was that the priority levels of technical parties and market party security had different opinions.

The differences are very serious, and the consequences are currently… everyone has seen them too.

According to Vox, a source familiar with OpenAI revealed thatEmployees who focus more on safety have lost confidence in Ultraman, “This is a process of trust collapse little by little.”

But as you can see, on public platforms and occasions, not many employees are willing to talk about this matter publicly.

Part of the reason is that OpenAI has always beenThe tradition of having employees sign a resignation agreement with non-depreciation agreements.If you refuse to sign, it means you have given up the OpenAI option you have obtained before.This means that employees who come out to speak may lose a huge sum of money.

However, the domino still fell one by one-

Ilya’s resignation has intensified OpenAI’s recent resignation wave.

The announcement of his resignation was followed by at least five security team members, except for Jan, the head of the Super Alignment Team.

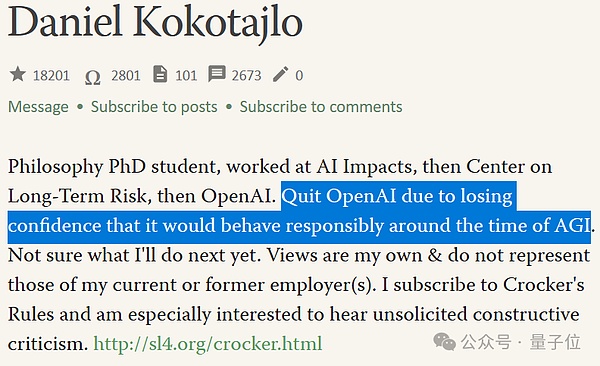

Among them, there is another hard bone that has not signed a non-depreciation agreement, Daniel Kokotajlo (hereinafter referred to as DK Brother).

△Last year, DK wrote that he believes that the possibility of survival disaster in AI is 70%

Brother DK joined OpenAI in 2022 and worked in the governance team. His main work is to guide OpenAI to safely deploy AI.

But he also resigned recently and was interviewed:

OpenAI is training more powerful AI systems with the goal of ultimately surpassing human intelligence.

This may be the best thing that has ever happened to mankind, but it may be the worst if we act accidentally.

Brother DK explained that when he joined OpenAI, he was full of revenge and hope for security governance, and hoped that the closer OpenAI was to AGI, the more responsible he could be.But in the teamMany peopleI gradually realized that OpenAI will not be like this.

“I gradually lost confidence in the OpenAO leadership and their ability to handle AGI responsibly.” This is why Brother DK resigned.

Disappointment with the future AGI safety work is part of the reason why everyone left in the intensified wave of departures by Ilya.

Another reason is thatThe super-aligned team is probably not as capable of conducting research with abundant resources as the outside world imagines.

Even if the super-aligned team works at full capacity, the team can only get 20% of the computing power promised by OpenAI.

And some requests from the team are often denied.

Of course, it is because computing resources are extremely important to AI companies, and every point must be allocated reasonably; it is also because the work of the super-aligned team is to “solve different types of security problems if the company successfully builds AGI.”

In other words, the super alignment team corresponds to the future security issues that OpenAI needs to face – the key points are in the future, and I don’t know if it will appear.

As of press time, Ultraman has not released his “longer tweet (than Jan’s insider)”.

But he briefly mentioned that Jan’s concerns about security issues are correct.“We have a lot to do; we are committed to doing that.”

In this regard, everyone can set up a small stool first, so let’s eat the melons together as soon as possible.

In summary, the super aligned team has left many people, especially the resignation of Ilya and Jan, which has put this team in the storm in a difficult situation of being in a leader.

The follow-up arrangement isCo-founder John Schulma takes over, but there is no longer a dedicated team.

The new Super Alignment Team will be the looser-connected team with members spread across the company’s various departments, an OpenAI spokesperson described it as “more integration.”

This is also questioned by the outside world, becauseJohn’s original full-time job was to ensure the security of current OpenAI products.

I wonder if John can be busy after suddenly having more responsibilities and lead the two teams that pay attention to the present and future security issues?

The battle between Ilya-Altman

If the time line is lengthened, today’s fall apart is actually a sequel to the battle between OpenAI’s “palace fight” Ilya-Altman.

Back in November last year, when Ilya was still there, he worked with the OpenAI board of directors to try to fire Ultraman.

The reason given at that time was that he was not sincere enough in communication.In other words, we don’t trust him.

But the final result is obvious. Ultraman threatened to join Microsoft with his “allies”, but the board surrendered and the removal failed.Ilya leaves the board.Ultraman, on the other hand, chose members who were more beneficial to him to join the board of directors.

After that, Ilya disappeared from the social platform again until she officially announced her resignation a few days ago.And it is said that it has not appeared in the OpenAI office for about 6 months.

He left an intriguing tweet at the time, but it was quickly deleted.

I have learned a lot of lessons over the past month.One of the lessons is that the phrase “beating will continue until morale improves” applies more often than it should.

However, according to insiders,Ilya has been co-leading the super alignment team remotely.

On Ultraman’s side, the biggest accusation from the employees of him was that he was disagreeing with words and deeds. For example, he claimed that he wanted to give priority to safety, but his behavior was contradictory.

Except for the originally promised computing resources are not given.There are also some questions like asking Saudi Arabia and others to raise funds to build cores a while ago.

Those safety-oriented employees were confused.

If he really cares about building and deploying artificial intelligence in a way that is as safe as possible, then wouldn’t he accumulate chips so frantically to accelerate the development of technology?

Earlier, OpenAI also ordered chips from a startup invested by Ultraman.The amount is as high as US$51 million (approximately RMB 360 million).

In the report letter from former OpenAI employees during the palace fighting, the description of Ultraman seemed to be confirmed again.

It’s because of thisFrom beginning to end, “different words and deeds”The operation has gradually lost confidence in OpenAI and Ultraman.

Ilya is like this, Jan Laike is like this, and so is the Super Align Team.

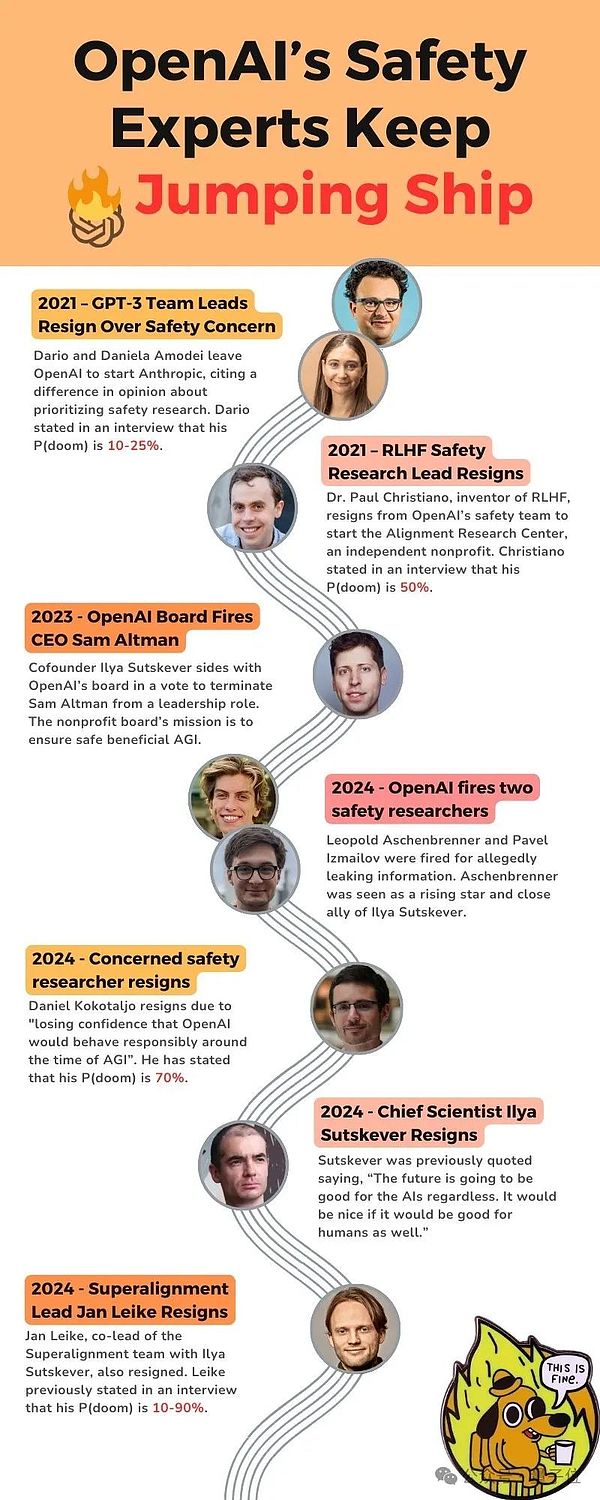

Some considerate netizens have sorted out important nodes of related things that have happened in the past few years – first give a thoughtful reminder, as mentioned belowP(doom), refers to the “possibility of AI triggering a doomsday scenario.”

In 2021, the head of the GPT-3 team left OpenAI due to “safety” issues and founded Anthropic; one of them believed that P (doom) was 10-25%;

In 2021, the head of RLHF security research resigned, with P (doom) being 50%;

In 2023, OpenAI’s board of directors fired Ultraman;

In 2024, OpenAI fired two security researchers;

In 2024, an OpenAI researcher who is particularly concerned about security left, and he believes that P (doom) is already at 70%.

In 2024, Ilya and JAN Laike resigned.

Technical or market?

Since the development of the big model, “How to implement AGI?” can actually be attributed to two routes.

Technical schoolI hope that the technology can be mature and controllable and then applied;MarketIt is believed that while opening up, the application of “gradual” approaches has reached the end.

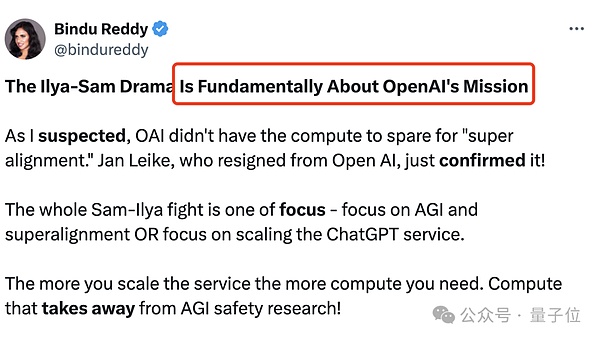

This is also the fundamental difference between the Ilya-Altman dispute, namely the mission of OpenAI:

Focus on AGI and Super Alignment, or focus on extending ChatGPT services?

The larger the ChatGPT service, the greater the amount of computation required; this will also take up time for AGI security research.

If OpenAI is a nonprofit dedicated to research, they should spend more time on super alignment.

Judging from some external measures by OpenAI, the result is obviously not.They just want to take the lead in the big model competition and provide more services to enterprises and consumers.

In Ilya’s opinion, this is a very dangerous thing.Even if we don’t know what will happen as the scale grows,In Ilya’s opinion, the best way is to be safe first.

Open and transparent so that we humans can ensure that AGI is built safely, not in some secret way.

But under Ultraman’s leadership, OpenAI seems to pursue neither open source nor super alignment.Instead, it was all about running towards the AGI while trying to build a moat.

So in the end, AI scientist Ilya’s choice was correct, or could Ultraman, a Silicon Valley businessman, go to the end?

There is no way to know now.But at least OpenAI is facing a key choice now.

Some industry insiders have summarized two key signals.

oneIt is ChatGPT, the main revenue of OpenAI. Without better model support, GPT-4 will not be provided to everyone for free;

anotherThat is, if the resigned team members (Jan, Ilya, etc.) are not worried about the possibility of more powerful features soon, they will not care about alignment… If AI stays at this level, it basically doesn’t matter.

But the fundamental contradiction of OpenAI has not been resolved. On the one hand, AI scientists are worried about the responsible development of AGI, and on the other hand, the Silicon Valley market is urged to promote technological sustainability through commercialization.

The two sides are no longer irreconcilable, and the scientific faction is completely eliminated from OpenAI, and the outside world still doesn’t know where GPT has reached?

The people who were eager to know the answer to this question were a little tired.

A sense of powerlessness surged in my heart, as Hinton, the teacher of Ilya and one of the three Turing Awards, said:

I’m old and I’m worried, but I can’t do anything about it.

Reference link:

[1]https://www.vox.com/future-perfect/2024/5/17/24158403/openai-resignations-ai-safety-ilya-sutskever-jan-leike-artificial-intelligence

[2]https://x.com/janleike/status/1791498174659715494

[3]https://twitter.com/sama/status/1791543264090472660