author:mo Source: X, @no89thkey Translation: Shan Oppa, Bitchain Vision

Let me try to answer this question with a number:

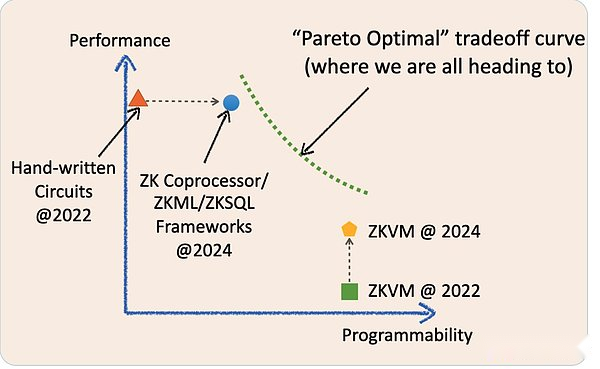

Is it possible that we can converge to just one magical best point in the tradeoff plane?No, the future of off-chain verifiable computing is a continuous curve that blurs the boundaries between dedicated and general-purpose ZK.Please allow me to explain how these terms evolved historically and how they will merge in the future.

Two years ago, the “professional” ZK infrastructure meant low-level circuit frameworks such as circcom, Halo2 and arkworks.The ZK application built with these is essentially a handwritten ZK circuit.They are fast and inexpensive for very specific tasks, but are often difficult to develop and maintain.They are similar to various application-specific integrated circuit chips (physical silicon) in the IC industry today, such as NAND chips and controller chips.

However, over the past two years,The “specialized” ZK infrastructure has evolved into a more “general” infrastructure.

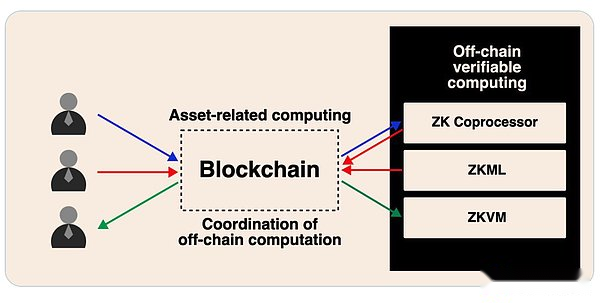

We now have ZKML, ZK coprocessors, and ZKSQL frameworks that provide easy-to-use and highly programmable SDKs that can build different categories of ZK applications without writing a single line of ZK circuit code.For example, the ZK coprocessor allows smart contracts to access historical blockchain states/events/transactions without trust and run arbitrary calculations on this data.ZKML enables smart contracts to reliably leverage AI inference results to enable a wide range of machine learning models.

These evolved frameworks significantly improve programmability within their target domains while still maintaining high performance and low cost as the abstraction layer (SDK/API) is thin and close to bare metal circuits.They are similar to GPUs, TPUs and FPGAs in the IC market: they are experts in the field of programmability.

ZKVM has also made great progress in the past two years.It is worth noting that all general purpose ZKVMs are built on a low-level, dedicated ZK framework.The idea is that you can write ZK applications in high-level languages (even more user-friendly than SDK/API) that can be compiled into a combination of dedicated circuits for instruction sets (RISC-V or WASM classes).In our analogy to the IC industry, they are like CPU chips.

ZKVM is an abstract layer above the low-level ZK framework, just like the ZK coprocessor, etc., although a thicker layer.

As a wise man once said, a layer of abstraction can solve every computer science problem, but at the same time create another problem.My friend, the trade-off is the name of the game here.Fundamentally, for ZKVM, we make a trade-off between performance and versatility.

Two years ago, ZKVM’s “bare metal” performance was really bad.However, in just two years, ZKVM’s performance has improved significantly.Why?

Because these “universal” ZKVMs have become more “professional”!A key area of performance improvement comes from “precompilation”.These precompilers are specialized ZK circuits that compute commonly used advanced programs such as SHA2 and various signature verifications, much faster than the normal process of breaking them down into instruction circuits.

Therefore, the trend is already very obvious now.

Specialized ZK infrastructure is becoming more and more general, and generalized ZKVM is becoming more specialized!

For both solutions over the past few years, optimization is to achieve a better tradeoff than before: do better at one point without sacrificing the other.That’s why both sides feel that “we are definitely the future.”

However, computer science wisdom tells us all that at some point we will encounter a “Pareto optimal wall” (green dotted line), in which case we cannot without sacrificing another featureImprove a feature.

So, a million dollar question arises: Will one of them completely replace the other in due course?

If the IC industry analogy can help: CPU market size is $126 billion, and the entire IC industry, plus all “dedicated” ICs, is $515 billion.I do believe that on a micro level, history will rhyme here and will not replace each other.

Having said that, no one today said, “Hey, I’m using a computer that’s completely powered by a general purpose CPU” or “Hey, look at this weird robot powered by a dedicated IC.”

Yes, we should indeed look at this from a macro perspective. The future is to provide a trade-off curve that allows developers to make flexibly choices based on their personal needs.

In the future, domain experts ZK infrastructure and general-purpose ZKVM can and will work together.This can happen in many forms.

Nowadays, the easiest way is possible.For example, you might use the ZK coprocessor to generate some calculation results in the long history of blockchain transactions, but the computing business logic on top of this data is so complex that you can’t express it easily in the SDK/API.

All you can do is get high-performance and low-cost ZK proofs of data and intermediate computations, and then pool them into a generalized VM by proof recursively.

While I do think these types of debates are interesting, I know we are all building the future of asynchronous computing for blockchains powered by off-chain verifiable computing.When we see the use cases that are adopted by large-scale users in the coming years, I believe this debate can be easily resolved.