Author: Cynic, Shigeru

Guide:Using the power of algorithms, computing power and data, the advancement of AI technology is redefining the boundary between data processing and intelligent decision -making.At the same time, DEPIN represents the centralized and blockchain -based network paradigm on the centralized infrastructure.

As the world moves towards digital transformation, AI and DEPIN (decentralized physical infrastructure) have become the basic technologies to promote reform of all walks of life.The fusion of AI and DEPIN can not only promote the rapid iteration and application of technology, but also open a more secure, transparent and efficient service model to bring far -reaching changes to the global economy.

DEPIN: decentralization and deficiency, the mainstay of the digital economy

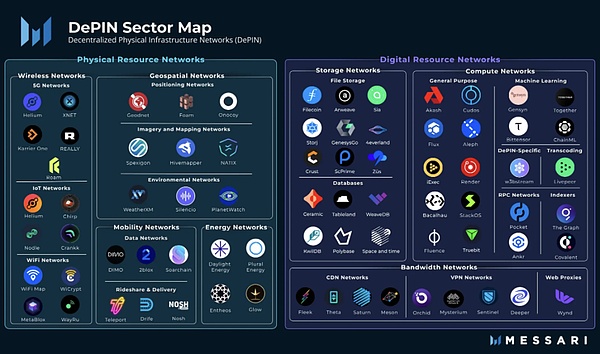

DEPIN is an abbreviation of decentralized physical infrastructure.In a narrow sense, DEPIN mainly refers to the distributed network of traditional physical infrastructure supported by distributed ledger technology, such as power networks, communication networks, and positioning networks.In a broad sense, all distributed networks supported by physical equipment can be called DEPIN, such as storage networks and computing networks.

>

From: Messari

If Crypto has brought decentralized changes at the financial level, then DEPIN is a decentralized solution in the real economy.It can be said that POW mining machine is a depin.From the first day, DEPIN is the core pillar of Web3.

AI three elements -algorithm, computing power, data, depin monopolize it

The development of artificial intelligence is usually considered to depend on three key elements: algorithms, computing power and data.The algorithm refers to the mathematical model and program logic of the drive AI system. The computing power refers to the computing resources required by these algorithms. Data is the basis for training and optimizing the AI model.

>

Which of the three elements is the most important?Before the appearance of ChatGPT, people usually think that it is an algorithm, otherwise academic conferences and journal papers will not be filled with fine -tuning by algorithms.After the appearance of ChatGPT and LLM, which supports its intelligent LLM, people began to realize the importance of the latter two.Massive computing power is the prerequisite for the production of the model. Data quality and diversity are essential for establishing a strong and efficient AI system. In contrast, the requirements of the algorithm are no longer refined as usual.

In the era of large models, AI has changed from fine carving to vigorously flying bricks. The demand for computing power and data is increasing, and DEPIN can exactly provide it.Tokens are inspired to leverage the long -tail market. Massive consumer computing power and storage will become the best nutrients for large models.

AI’s decentralization is not an option, but a compulsory option

Of course, some people will ask, computing power and data are available in AWS’s computer rooms, and they are better than DEPIN in terms of stability and experience. Why choose DEPIN instead of centralized services?

This statement naturally makes sense. After all, looking at the moment, almost all large models are developed directly or indirectly by large Internet companies. Behind the ChatGPT is Microsoft. Behind Gemini is Google.Big model.Why?Because only large Internet companies have enough high -quality data and the computing power supported by strong financial resources.But this is wrong, people no longer want to be manipulated by Internet giants.

On the one hand, the centralized AI has data privacy and security risks, which may be reviewed and controlled; on the other hand, AI created by Internet giants will enable people to further strengthen their dependence and lead to centralized markets and improve innovative barriers.

>

From: https://www.gensyn.ai//

Human beings should not need a AI Age Martin Luther, and people should have the right to directly talk to God.

Look at DEPIN: cost reduction and efficiency is the key

Even if you put aside the dispute between decentralization and centralized values, from a business perspective, the use of DEPIN for AI is still desirable.

First of all, we need to clearly realize that although the Internet giants have mastered a large number of high -end graphics card resources, the combination of consumer graphics cards scattered into the people can also constitute a very considerable computing power network, that is, the long -tail effect of computing power.This type of consumer graphics card is actually very high.As long as the incentives given by DEPIN can exceed the electricity bill, the user has the motivation to contribute to the network.At the same time, all physical facilities are managed by the user itself. The DEPIN network does not need to afford the operating cost that the supplier cannot avoid, and only needs to pay attention to the design itself.

For data, the DEPIN network can release the availability of potential data through edge computing and other methods, and reduce transmission costs.At the same time, most distributed storage networks have automatically de -heavy functions, reducing the work of cleaning AI training data.

Finally, Crypto economics brought by DEPIN enhances the system’s fault tolerance space, which is expected to achieve a win -win situation of providers, consumers, and platforms.

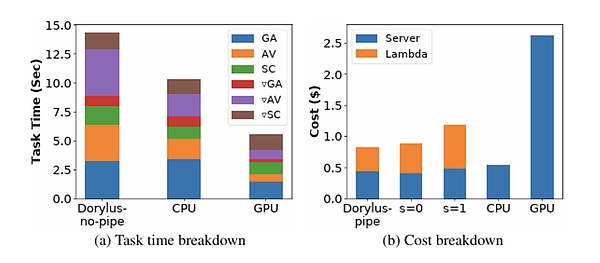

>

From: UCLA

In case you do n’t believe, UCLA ’s latest research shows that the use of decentralized calculations under the same cost has achieved 2.75 times performance compared to the traditional GPU cluster. Specifically, it is 1.22 times faster and 4.83 times cheaper.

What challenges will AixDepin encounter?

We Choose to go to the moon in this decade and do the other thing, not beCAUSE theY are easy, but becape they are hart.

——John Fitzgerald Kennedy

The use of DEPIN distributed storage and distributed computing to build an artificial intelligence model without trust still has many challenges.

Work verification

In essence, computing deep learning models and POW mining are generally calculated, and the bottom layer is signal changes between door circuits.In macro, POW mining is a “useless calculation”. Through countless random number generation and hash function calculation, try to obtain a hash value of n 0 in front of the prefix; and deep learning calculations are “useful calculations”.A forward derivation and reverse derivation calculate the parameter values of each layer in deep learning, thereby building an efficient AI model.

The fact is that “useless computing” such as POW mining uses hash functions. It is easy to calculate from the original image. It is difficult to calculate the original image.For the calculation of deep learning models, due to the hierarchical structure, the output of each layer is used as the input of the latter layer. Therefore, the validity of the verification and calculation requires all the previous tasks and cannot be verified simply and effectively.

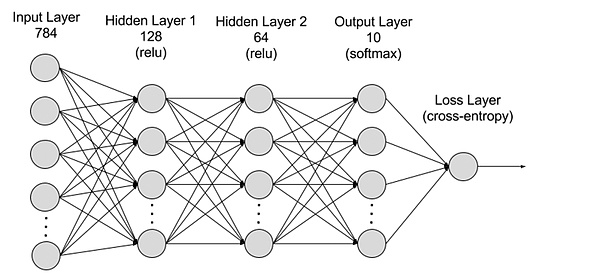

>

From: AWS

Work verification is very critical. Otherwise, the calculated provider can be submitted to a randomly generated result without calculation.

One type of idea is to allow different servers to perform the same computing task, and to verify the effectiveness of the work by repeating and testing whether the same is the same.However, most model calculations are not certain, and the same results cannot be reproduced even in an exactly the same calculation environment, and they can only be similar in statistically.In addition, repeated calculations will lead to a rapid rise in costs, which is inconsistent with the key goals of DEPIN cost reduction and efficiency.

Another type of idea is the Optimistic mechanism. Firstly, I believe that the result is effectively calculated, and at the same time allow anyone to test the calculation results. If there are errors, you can submit a Fraud Proof.The reward is given.

Parallelization

As mentioned earlier, Depin’s prying is mainly the long -tail consumer -level computing market, which is destined that the computing power that a single device can provide is relatively limited.For large AI models, the training time on a single device will be very long. It must be shortened by parallelization to shorten the time required for training.

The main difficulty of the parallelization of deep learning training is the dependence between the tasks of the front and rear. This dependent relationship can make it difficult to achieve parallelization.

At present, the parallelization of deep learning training is mainly divided into parallel and model parallel.

Data parallel refers to the distribution of data on multiple machines. Each machine saves all the parameters of a model, uses local data for training, and finally aggregates the parameters of each machine.Data parallel is effective when the amount of data is large, but it needs to be synchronized to aggregate parameters.

The model parallel is when the model size cannot be put into a single machine, and the model can be divided on multiple machines, and each machine saves part of the parameters of the model.Different machines are required to communicate between forward and reverse communication.The model parallel has an advantage when the model is large, but the communication overhead when it spreads is large.

For gradient information between different layers, it can be divided into synchronous updates and asynchronous updates.Synchronous update is simple and direct, but it will increase the waiting time; asynchronous update algorithm waiting time is short, but the problem of stability will be introduced.

>

From: Stanford University, Parallel and Distributed Deep Learning

privacy

The world is setting off the wave of protection of personal privacy, and governments of various countries are strengthening the protection of personal data privacy and security.Although AI uses a large number of public data sets, it is also a proprietary user data of different AI models.

How to get the benefits of proprietary data during the training process without exposing privacy?How to ensure that the constructed AI model parameters are not leaked?

These are two aspects of privacy, data privacy and model privacy.Data privacy is protected by users, and the model privacy protection is to build a model organization.In current cases, data privacy is much more important than model privacy.

A variety of solutions are trying to solve the problem of privacy.Federal learning is trained at the source of data, and data is left locally, and model parameters are transmitted to ensure data privacy; zero -knowledge proof may become a rising star.

Case analysis: What are the high -quality projects in the market?

Genesyn

Gensyn is a distributed computing network for training AI models.The network uses a layer of blockchain based on Polkadot to verify whether the deep learning task has been executed correctly and triggers payment by command.Established in 2020, a round A financing of $ 43 million was disclosed in June 2023, and A16Z led.

Gensyn uses gradient -based metadata based on gradient -based optimization process to construct the certificate of the execution work, and is implemented by multi -grain, graphic -based accurate protocol and cross -evaluation device to allow re -operation and verification work and consistency. In the end, the chainIt is confirmed to ensure the effectiveness of calculation.In order to further strengthen the reliability of work verification, Gensyn introduced pledge to create incentives.

There are four types of participants in the system: submitters, solution, verifications and reporters.

• The submitters are the system of the system, providing tasks to be calculated, and pay for the completed work unit.

• The solution device is the main worker of the system. Execute model training and generate proof for verification.

• Verification device is the key to linked the non -confirmed training process with the linear calculation. The replication part of the solution device proves and compares the distance with the expected threshold.

• The reporter is the last line of defense. Check the work of the verification and propose challenges.

The solution person needs to pledge, the reporter inspect the work of the solution.

According to Gensyn’s forecast, the plan is expected to reduce the training cost to 1/5 of the centralized supplier.

>

From: Gensyn

Fedml

FEDML is a decentralized cooperation machine learning platform for decentralization and collaborative AI at any scale anywhere.More specifically, FEDML provides a MLOPS ecosystem that can train, deploy, monitor and continuously improve machine learning models, and at the same time cooperate in combination data, models and computing resources in the way of protecting privacy.Established in 2022, Fedml disclosed a $ 6 million seed round financing in March 2023.

Fedml is composed of two key components: Fedml-API and Fedml-Core, respectively, representing high-end APIs and underlying APIs, respectively.

Fedml-Core includes two independent modules of distributed communication and model training.The communication module is responsible for the underlying communication between different workers/clients, based on MPI; the model training module is based on PyTorch.

Fedml-API is built on Fedml-Core.With Fedml-Core, new distributed algorithms can be easily achieved by using a client programming interface.

The latest work of the Fedml team proves that using Fedml Nexus AI to conduct AI model reasoning on the consumer GPU RTX 4090, which is 20 times cheaper than the A100 and 1.88 times faster.

>

From: fedml

Future Outlook: DEPIN brings AI democracy

One day, AI further developed into AGI. At that time, computing power would become a de facto general currency. Depin made this process ahead of time.

The integration of AI and DEPIN has opened a new technological growth point, providing huge opportunities for the development of artificial intelligence.DEPIN provides AI with a large amount of distributed computing power and data, which helps to train a larger -scale model and achieve stronger intelligence.At the same time, DEPIN has also made AI develop in a more open, safe and reliable direction to reduce dependence on the infrastructure of a single center.

Looking forward to the future, AI and DEPIN will continue to develop.The distributed network will provide a strong foundation for training oversized models, and these models will play an important role in DEPIN applications.While protecting privacy and security, AI will also help the DEPIN network protocol and algorithm optimization.We look forward to AI and DEPIN brings more efficient, fair, and more credible digital worlds.