In 2025, Shengliang Lu and others published an article “AI Applications in Web3 SupTech and RegTech: A Regulatory Perspective”, which pointed out that the digital field is undergoing a transformative transformation driven by the rise of Web3 technology and virtual assets.This new phase of Internet technology utilizes distributed ledger technology and smart contracts, while promoting decentralization, increasing transparency and reducing dependence on intermediaries.These innovations are crucial in shaping decentralized finance (DeFi).However, the rapid popularity of Web3 technology also brings significant risks, highlighting these risks by a series of highly anticipated failures and systemic vulnerabilities.Through its Financial Services Regulatory Authority (FSRA), Abu Dhabi Global Markets (ADGM) has established an advanced regulatory framework that is transparent and in line with international standards, thus creating a favorable regulatory environment that protects the interests of stakeholders.This white paper explores the integration of artificial intelligence (AI) into regulatory technologies to enhance compliance monitoring and risk management.The white paper details the research and development work of the National University of Singapore’s Asian Digital Finance Institute, the ADGM Financial Services Regulatory Authority and the ADGM Institute Research Center.The white paper finally summarizes the main findings and proposes future cooperation directions in order to further improve the regulatory landscape.The Institute of Financial Technology of Renmin University of China compiled the core part of the research.

1. Introduction

As Web3 technology leads the advancement of Internet technology, the digital field is undergoing rapid transformation.Web3 technology is built on distributed ledger technology (DLT) and smart contracts, emphasizing decentralization, improving transparency, and reducing dependence on intermediaries.Distributed ledger technology, including blockchain, provides secure and tamper-free ledgers for transactions and data, while smart contracts promote automated protocols without intermediaries.This combination supports the development of decentralized applications (dApps), especially in the field of decentralized finance (DeFi), which are reshaping financial transactions through peer-to-peer interaction.The global cryptocurrency market capitalization has exceeded the $3 trillion mark, comparable to some of the world’s largest companies, including Apple and Microsoft.The cryptocurrency user base has expanded significantly, growing by 34% in 2023 alone, from 432 million in January to 580 million in December.This growth highlights the growing adoption and convergence of cryptocurrencies in the global financial landscape.In addition, data shows that the United Arab Emirates (UAE) leads the world in cryptocurrency adoption, with more than 30% of its population (about 3 million people) owning digital assets.This reflects the country’s forward-looking acceptance of fintech and its ambition to become a major fintech hub.

ADGM plays a key role in the rapidly evolving financial landscape.As an institution that oversees financial services in the international financial center and free zone, the ADGM Financial Services Regulatory Authority (FSRA) has been at the forefront, committed to creating a regulatory environment that not only supports the growth of DeFi and virtual assets (VA) but also supports the broader digital transformation in the financial services sector.Since its launch in 2018, FSRA has established a comprehensive regulatory framework for virtual assets and has continuously optimized them.The framework ensures strong oversight and is consistent with international standards while supporting innovation.By embracing digital transformation, ADGM works closely with technology ecosystem partners such as Hub71 and research institutions such as the National University of Singapore to promote the adoption of cutting-edge technology solutions within ADGM.This proactive approach helps position Abu Dhabi as the preferred destination for financial companies seeking to leverage advanced technology and digital financial models.

To further enhance its regulatory capabilities, the ADGM Financial Services Authority is leveraging advances in RegTech and SupTech to simplify regulatory and oversight processes.Through AI-powered compliance technology solutions, FSRA can provide more interactive and customized regulatory interactions, making compliance work more efficient and convenient for entities operating within ADGM.Implementing oversight technology tools empowered by artificial intelligence helps support FSRA’s oversight and risk management goals while reducing costs for financial institutions.Together, these initiatives demonstrate FSRA’s mission to provide a transparent, efficient and advanced financial environment that not only protects the interests of customers, investors and industry participants, but also promotes sustainable growth and innovation of ADGM.

Supervision Technology (SupTech) refers to the application of technology to enhance the supervision and supervision functions of regulatory authorities.It involves the use of advanced tools such as data analytics, artificial intelligence and automation to improve monitoring and oversight of regulated activities, as well as the implementation of regulatory frameworks.Oversight Technology aims to provide regulators with more effective, data-driven insights that enable them to better identify problems, assess risks and enforce regulations in real time.

Compliance Technology (RegTech) refers to the use of technology to simplify, automate and improve regulatory compliance processes for enterprises.It leverages innovative tools such as artificial intelligence, machine learning, automation and data analytics to help companies more effectively meet regulatory requirements, reduce compliance costs, and enhance transparency and reporting quality.Compliance technology aims to simplify complex compliance tasks such as monitoring transactions, identifying risks, and ensuring compliance with legal standards.

New risks caused by the characteristics of Web3 technology, such as the failure of blockchain protocols such as Terra (LUNA), and emerging vulnerabilities in smart contracts, highlight the need to establish an effective regulatory framework and risk management strategy.The innovative and decentralized nature of blockchain technology provides a breeding ground for new types of fraud and systemic failures, and these problems must be solved before they can be widely used.As one of the response strategies, ADGM is exploring the application of artificial intelligence to regulatory and oversight technology solutions to improve compliance monitoring and risk management.The NUS AIDF Institute of Digital Finance (NUS AIDF) of the National University of Singapore conducts financial technology research in the field of artificial intelligence technology, providing tools for predictive analysis, anomaly detection and automated compliance.FSRA is testing and validating these AI technologies to meet the emerging needs of effectively regulating and overseeing the Web3 and virtual asset ecosystem.This white paper summarizes the research and development work of NUS AIDF and ADGM (including the FSRA and ADGM Academy Research Center) in the application of artificial intelligence technologies to support regulatory and oversight activities in the Web3 and virtual assets sectors.

Since this article is intended to a wider audience and is not intended to provide specific definitions, readers should note that terms such as “virtual assets,” “Web3,” “blockchain,” “DLT” and “network” are used interchangeably in the text.Nevertheless, some terms are explained in the second part.

The rest of this article is structured as follows.The second part introduces the background and scope of this article, and the third part discusses the potential opportunities for regulators to exploit artificial intelligence technology.The fourth section explores the AI innovations that are shaping regulatory actions and activities.The fifth section examines pilot projects conducted by NUS AIDF and ADGM, demonstrating practical applications of these innovations such as smart contract assessment, security audits, and due diligence powered by artificial intelligence.The sixth part summarizes this article, summarizes the research findings, and explores future directions and potential areas that can be used to strengthen the regulatory landscape.

2. Background

This section aims to explain the key terms used in this article and lay the foundation for readers to better understand the discussion of subsequent chapters.

Virtual Asset.FSRA’s regulatory framework divides digital assets into different categories, including fiat currency reference tokens and digital securities.A virtual asset is a digital representation of value that can be traded digitally and acts as (1) a medium of exchange; and/or (2) a unit of account; and/or (3) a means of store of value, but does not have fiat currency status in any jurisdiction.Virtual Assets (a) are neither issued or guaranteed by any jurisdiction, and their above functions are realized only through agreements within the virtual Assets user community; and (b) are distinguished from fiat and electronic currencies.Web3 represents the next evolution of the Internet, transitioning from “read” (Web1) and “read-write” (Web2) to “read-write-own”.Unlike Web2’s centralized platform, Web3 utilizes blockchain technology to give users real ownership of their data, digital assets and online interactions.This decentralized paradigm reduces dependence on intermediaries, promotes greater user autonomy and privacy, while redefining the way individuals interact with digital platforms.

Distributed ledger technology (DLT) and blockchain networks.DLT is a digital system used to record asset transactions, and its data is stored on multiple sites or nodes simultaneously.Unlike traditional centralized databases, DLT is decentralized without central authority, thus enhancing transparency and security.Each participant in the network maintains a synchronous copy of the ledger, reducing the risk of a single point of failure.Blockchain is a specific type of DLT that organizes data into encrypted blocks and then links it to form a chain in chronological order.This structure ensures that the recorded data becomes tamper-free.Virtual assets are usually built on a blockchain network.In Web3, DLT and blockchain networks power DeFi platforms and decentralized applications (dApps) by enabling secure and transparent transactions.

Decentralized Finance (DeFi).DeFi refers to a financial ecosystem built on blockchain and DLT, which can enable peer-to-peer transactions and services without the need for traditional intermediaries such as banks or financial institutions.DeFi applications use smart contracts—a self-executing program on the blockchain network—to automate and execute financial operations such as lending, trading, and investment.

Artificial Intelligence (AI).Broadly speaking, artificial intelligence defines a collection of technologies that enable machines or systems to understand, learn, act, reason and perceive like humans.Artificial intelligence systems use algorithms, data and computing power to continuously adapt and improve.The surge in artificial intelligence tools in recent years has provided the possibility for the financial industry to integrate its capabilities into various use cases.Artificial intelligence brings significant benefits, including improved operational efficiency, enhanced regulatory compliance, provision of personalized financial products, and advanced data analytics capabilities.FSRA launched an initiative called OpenReg as early as 2022 to make regulatory content machine-readable.The project enables compliance technology companies and the data science community to leverage this AI training ground to build the next generation of AI-enabled compliance technology solutions.

In this article, as part of the ongoing process of FSRA’s incorporating AI technology into its oversight approach, we elaborate on the practice of adopting AI for compliance and oversight technology for Web3 regulatory actions/activities.In the process, we considered valuable insights provided in the recent report released by the Financial Stability Council (FSB), the regulatory principles outlined in the EU’s Artificial Intelligence Act, and the risk framework developed by the Project MindForge.

3.Opportunities to leverage artificial intelligence to regulate WEB3 activities

Due to unique features such as blockchain technology, smart contracts, and the speed of Web3 innovation, there are some subtle differences in the regulatory framework of Web3 compared with traditional regulations.Globally, the recent regulatory focus of Web3 has been mainly focused on virtual assets and its trading platforms.This includes the implementation of anti-money laundering (AML) measures such as the integration of “KYT” solutions and the implementation of “Travel Rule” requirements; the establishment of prudent guidance for stablecoin issuers; and the recent regulation of decentralized unowned entities such as the DLT Foundation and the Decentralized Autonomous Organization (DAO).These efforts to establish regulatory frameworks and impose safeguards to protect customers and investors demonstrate an increasing acceptance of virtual assets and Web3.When examining the inherent characteristics of Web3 and virtual assets from the perspective of financial regulators, the following must be considered (but not limited to):

» They are continuously operated 24/7 with minimal manual supervision through self-executing smart contracts on DLT;» Security risks are intensified due to vulnerabilities in smart contract encoding, potential exploitation of attacks, and dependence on decentralized networks;» Introduced “new” concepts that either use blockchain innovation to transform existing traditional financial frameworks or propose novel ideas that have no historical precedent at all.» The decentralized nature of Web3 ensures immutability of transactions and smart contracts, enhances trust and transparency, but also makes handling errors such as “fat finger” mistakes, hacking or unexpected consequences challenging.

Regulating Web3 activities presents some challenges, which makes it necessary to innovate regulatory approaches and develop new tools to enhance oversight and monitoring and execution capabilities.However, these challenges also provide important opportunities to shape a better future for the Web3 ecosystem.

Fast-paced innovation and risk identification.The innovative nature and rapid pace of Web3 technology make it challenging to identify and mitigate emerging risks in a timely manner.This dynamic environment requires a higher degree of responsiveness in regulatory processes and frameworks to ensure regulators remain agile and able to effectively identify, evaluate and respond to potential risks.

The gap in responsiveness increases the likelihood of fraud and market failure.However, these regulatory challenges also create opportunities for building frameworks “from scratch” that allow for the integration of forward-looking principles that can be adjusted over time.This can encourage the development of an efficient business model that adapts to the uniqueness of Web3 and ultimately cultivate a stable and vibrant market that meets regulatory goals and promotes industry growth.Artificial intelligence can quickly respond to the development of Web3 by quickly identifying improvement points in regulatory rules manuals, thereby playing a role in promoting the investigation of related issues and building regulatory frameworks.

Advanced real-time risk monitoring.Effective risk monitoring in the Web3 ecosystem requires advanced tools that can analyze massive amounts of blockchain data in real time.Given the continuous operation of DLT and smart contracts 24/7, traditional point-in-time regulatory methods often struggle to deal with the amount and complexity of data generated by exchanges.Therefore, regulators urgently need to develop more complex analytical tools.Implementing continuous monitoring systems and automated risk management tools helps monitor regulatory compliance and enables proactive response to potential threats.

The complexity of jurisdiction.The decentralized nature of Web3 activities often presents cross-jurisdictional challenges to regulatory approaches.Because each regulator may have different approaches to governance of virtual assets, companies may find it difficult and expensive to maintain compliance under multiple, sometimes conflicting regulatory requirements, increasing the tendency to conduct regulatory arbitrage.Compliance technology tools powered by artificial intelligence have the potential to help companies simplify and manage these complexities.By automating daily compliance tasks, identifying overlapping regulatory requirements, adapting more effectively to new rules, and assisting regulatory reporting processes, AI can reduce costs and operational burdens, ultimately making it easier for companies to meet different regulatory expectations.In the following chapters, we will explore the benefits of using artificial intelligence to regulate processes in a variety of scenarios.

4. Artificial Intelligence Innovation

The development of artificial intelligence technology has undergone significant progress, changing the operation and innovation pattern of all walks of life.In the fields of Web3 and virtual assets (VA), artificial intelligence has the potential to greatly improve regulatory supervision and compliance efficiency.This section provides an overview of emerging AI technologies and explores how AI innovation will reshape the regulatory environment for Web3.First, this section will briefly introduce the widely used AI models (we only briefly describe models with broad application potential in the regulatory field), and then explore the use cases of adopting these AI technologies in regulatory activities.Before considering possible directions for future development, we will also discuss the main challenges facing leveraging artificial intelligence.

4.1 Emerging artificial intelligence technologies

Machine Learning (ML).Machine learning is a subset of artificial intelligence that focuses on making predictions or decisions based on data.Machine learning algorithms are good at analyzing large amounts of transaction data to detect patterns and anomalies that predict fraudulent activity or compliance issues.By applying supervised, unsupervised and reinforcement learning techniques, machine learning models can be adapted and improved over time, providing regulators with powerful tools to improve monitoring efficiency and accuracy without continuous human supervision.

Natural Language Processing (NLP).Natural language processing focuses on enabling computers to understand and process human language (i.e. text).By automatically extracting and analyzing critical information from massive files and communications, natural language processing can bring efficiency to regulatory reviews and assessments.Advanced natural language processing models have made significant progress in understanding and generating human-like texts and can be used to respond to inquiries from regulators and the public in an automated way.However, natural language processing techniques may have potential risks of misunderstandings and biases, as models may not fully consider contexts or tone that vary by cultural or social norms.Such challenges can lead to incorrect regulatory responses or actions when these technologies are adopted without human intervention.

Generative AI.Generative AI refers to artificial intelligence technology that can generate new content (such as text, images, and other media) based on existing data.However, natural language processing techniques may have potential risks of misunderstandings and biases, as models may not fully consider contexts or tone that vary by cultural or social norms.Such challenges can lead to incorrect regulatory responses or actions when these technologies are adopted without human intervention.

Artificial Intelligence Agents (AI Agents).Artificial intelligence agents are specialized in generative AI models that are able to perform complex tasks through preset workflows, such as automating customer service interactions, generating legal and regulatory documents, and even conducting virtual negotiations on behalf of human operators.In the regulatory field, generative artificial intelligence and artificial intelligence agents have many potential applications.For example, regulated entities can use them to automatically generate detailed periodic or on-demand compliance reports.Regulators can also use such artificial intelligence technologies to analyze large amounts of regulatory filing data and generate a shortlist of potential violations and risk indicators.However, similar to the inherent limitations of natural language processing technology, the current generative artificial intelligence model, which is mainly based on large language models (LLM), has limitations on the accuracy and reliability of its output due to possible “illusions” and contextual misunderstandings.

General AI.General artificial intelligence refers to a highly autonomous system that can perform any cognitive tasks that humans can undertake.Unlike generative AI designed specifically for content-specific creative tasks, universal AI is characterized by its versatility and ability to adapt to a wide range of scenarios without pre-specific programming.Although still in the conceptual stage, general AI can promote highly adaptive regulatory oversight and compliance management systems that can autonomously adapt to new regulations and complex legal compliance requirements in a way that requires little or no human intervention.

4.2 Artificial intelligence solutions in the field of Web3 regulation

In this section we explore how different types of AI technologies can be applied in the Web3 regulatory field to address challenges in monitoring, law enforcement and compliance management.We divide these technologies into two categories: applications using weak artificial intelligence (Narrow AI) and applications using generative artificial intelligence.Note that weak AI refers to an AI system designed to perform specific tasks and operate under limited constraints.They are also called “specialized artificial intelligence” or “weak artificial intelligence.”

Regulatory reporting tools.The regulatory reporting tool powered by artificial intelligence can automatically collect, submit and analyze regulatory returns and certification reports.These systems utilize advanced data mining and processing algorithms to extract and organize information from large data sets to facilitate seamless regulatory reporting.In addition to reporting automation, AI tools that perform predictive analytics can help regulated entities identify risk factors, thereby reducing potential compliance failures.For example, artificial intelligence can be used to monitor and predict financial risks that may hinder compliance with liquidity and capital obligations.

Risk Profiling.An artificial intelligence system specifically used for risk portraits can be analyzed and classified according to the risk characteristics and applicable regulatory requirements of virtual assets or financial entities.These systems are able to assess historical performance, market behavior and external factors to maintain a dynamic risk profile.By constantly learning from new data and regulatory updates, these AI profile tools can keep profiles in sync with the ever-evolving financial landscape.

Know Your Transaction (KYT).Using graph analysis and graph neural networks (GNNs), KYT and anomaly detection systems powered by artificial intelligence can be specially designed to monitor and analyze accounts and transactions on blockchain networks.By leveraging artificial intelligence to examine complex blockchain transaction flows, regulated entities will be able to better identify high-risk transactions and accounts and improve measures to implement anti-money laundering (AML) requirements.While existing KYT solutions are primarily rules-based, industry players are integrating AI technologies such as using pattern recognition for wallet clustering and cross-chain asset flow analysis.

Financial risk assessment.In the traditional finance field, artificial intelligence models have been used in cash flow forecasting and liquidity management.In DeFi, platform operators and users can use artificial intelligence models to more effectively manage liquidity by analyzing and predicting liquidity risks within and between decentralized exchanges and lending platforms.These models can be used to monitor transaction volume, token reserves, and user behavior to identify potential liquidity shortages before they become severe.The warning and actionable insights provided by such models are useful not only to financial institutions that provide services to consumers, but also to regulators that monitor these services, helping to maintain the stability and confidence of the DeFi ecosystem.

Automatic compliance checks.Automatic compliance checks performed by generative artificial intelligence can revolutionize how businesses comply with regulations by interpreting various legal frameworks in different jurisdictions.Such AI tools will involve complex semantic analyses to understand the nuances of regulatory texts, court decisions, interpretive letters and other related regulatory publications.This technology can update its regulatory databases and algorithms in real time as new regulations pass, allowing companies to quickly adapt to regulatory changes.Implementing such AI regulatory tools will enable companies to achieve compliance with local and international regulations more efficiently and economically than ever before, significantly reducing their risk of penalties and legal challenges.Generative AI models are also valuable tools for Web3 and Virtual Asset Service Providers (VASPs), which can speed up manual tasks such as writing white papers, charters, and creating chatbots for customer service.Other emerging AI tools help speed up the maintenance of information disclosure updates and compliance, and ensure communications and marketing materials remain within the scope of permitted supervision.These developments represent the potential of the industry’s shift towards greater efficiency and greater regulatory compliance.

Smart contract audit.Smart contract audits utilize generative artificial intelligence to analyze and analyze the logic and functionality of smart contracts across multiple platforms and programming languages.Advanced Large Language Models (LLMs) can facilitate detailed review of complex code logic to identify inconsistencies, vulnerabilities, and compliance issues with existing legal frameworks.These AI systems can be learned from past audits to improve their diagnostic accuracy, providing strong support for developers and regulators to verify the security and legal compliance of smart contracts.The next section will further expand on pilot projects to explore such applications.

Market sentiment analysis.Generative AI can be used to analyze large amounts of unstructured data from social media, forums and news media to assess public sentiment about market conditions or specific assets.By interpreting language and detecting mood changes, such tools can predict potential market trends, providing warnings to traders and investors who want to respond to market trends, as well as regulators monitoring market manipulation.

4.3 Challenges in the implementation of artificial intelligence

To achieve effective and reliable results, deploying artificial intelligence systems for regulatory supervision requires a range of challenges.We examined some key issues such as ethics and privacy issues, mitigation of AI bias, and the need to improve transparency in model behavior.Addressing these challenges is critical to building trust in the use of AI in regulatory processes, especially in scenarios where supervisory actions and judgment are required.The deployment of artificial intelligence in the regulatory field has caused obvious ethical and bias issues that need careful attention.Ethical norms are crucial to ensure that AI decisions that can profoundly influence individual lives are kept fair and effective.Bias inherent in training data or algorithms can lead to biased results that put some groups at an unfair disadvantage, thus undermining the fairness and effectiveness of regulation.Clear disclosure of how data is used, processed and shared is necessary to promote accountability and build trust among stakeholders.Furthermore, regulators that rely on AI to interpret large amounts of data submitted by their regulated entities should ensure that there are measures that allow AI to explain what data is used and how to draw conclusions.Without transparency in data usage and sufficient traceability of decision-making processes, regulated entities may question the reliability of their decisions that affect their decisions and strain their relationship with their regulators.

Artificial intelligence systems require access to large amounts of data, which raises major privacy concerns.These systems may inadvertently expose sensitive information or misuse data, resulting in potential breaches or unauthorized access.The collection, storage and processing of such data must be subject to strict data protection measures to protect personal privacy.In the regulatory field, the integrity of AI responses is vulnerable to challenges brought about by “prompt hacking”.Users may provide misleading input consciously or unconsciously, affecting the decision matrix of the model, which in turn affects the quality and reliability of the output.Addressing these vulnerabilities requires advanced real-time monitoring tools to effectively analyze and mitigate potential malicious prompts.The accuracy and ability of AI to generate responses may contribute to over-dependence in users.Manual supervision remains necessary to prevent excessive dependence on AI systems and ensure prudent use of AI capabilities.

4.4 Future direction

The integration of advanced artificial intelligence technologies is expected to affect the formulation, monitoring and implementation of future regulations.We foresee potential advances in predictive analytics and decision-making, as well as emerging technologies that may change regulatory activities.Advances in predictive analytics may reshape AI-driven methods of regulation and supervision.These advances not only enable proactive, but also preventive regulatory approaches, i.e. predict potential compliance issues and regulatory violations before they occur.Machine learning algorithms can be trained to foresee exceptions before fraudulent activity or violations.This allows policy makers to address potential issues before they escalate, thereby improving the accuracy and timeliness of regulatory interventions.Technological innovations such as quantum computing and advanced neural networks are expected to expand the analytical capabilities of artificial intelligence systems, allowing them to process and interpret complex regulatory data at a higher level of complexity.For example, quantum computing may process large-scale computing at an unprecedented rate, facilitating more detailed and comprehensive evaluation.Advanced neural networks can learn from more diverse and complex datasets, providing nuanced insights that were previously unavailable.At the same time, theoretical advances in AI ethics and governance are informing the development of frameworks that guide these technologies to operate within recognized social values and legal standards.As these technologies and frameworks develop, they will help to spawn more efficient, more efficient and fairer regulatory tools driven by AI.

5. ADGM’s pilot in artificial intelligence innovation (joint work with the National University of Singapore AIDF)

Abu Dhabi Global Market (ADGM) and the Asian Institute of Digital Finance (NUS AIDF) share common goals in addressing the risks and regulatory challenges posed by the rapidly evolving Web3 sector.To this end, the two parties have launched a joint pilot project since 2022 to study artificial intelligence technologies that can be used to improve the security audit process of blockchain applications and virtual assets (VA).Piloting the use of innovative AI technologies to analyze audit logs and trace historical security events to identify patterns and provide insights into potential vulnerabilities.This section introduces three pilots that demonstrate AI’s potential in advancing regulatory assessments of VA and its service providers.

5.1 Pilot 1: AI-based smart contract adaptability assessment

5.1.1 Introduction

Smart contracts are the basic components of blockchain technology that can safely and automatically execute protocols and transactions on a decentralized platform.Given its importance in blockchain applications, it is necessary to conduct a comprehensive evaluation and verification of its code base to ensure it operates as expected and meets regulatory standards.This section introduces the first pilot project: a smart contract adaptability assessment platform empowered by AI.

5.1.2 Existing solutions and service providers

Current smart contract verification practices combine manual evaluation with advanced technology tools to troubleshoot potential vulnerabilities and improve efficiency.Leading service providers including CertiK, Trail of Bits, Halborn, Hacken and other leading service providers, comprehensively adopt static and dynamic analysis and artificially-led formal verification methods to evaluate and reinforce smart contracts in terms of cyber attacks and performance issues.As Web3 technology enters a regulated industry, the paradigm of smart contract verification needs to be expanded urgently.In addition to technical vulnerability, when smart contracts are used to automate regulated activities, their audits should also cover compliance verification of relevant regulatory requirements.

5.1.3 AI-driven evaluation

This pilot analyzes the consistency relationship between smart contract code and VA white paper through two methods.

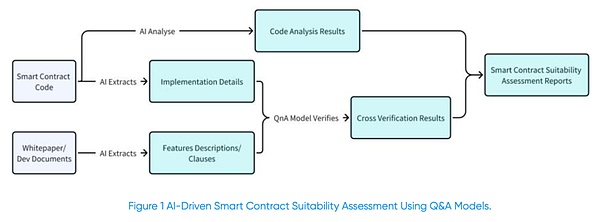

LLM-Based Validator Method.This method uses proprietary AI models to analyze the degree of alignment between the smart contract code and its corresponding VA white paper.Training data preparation first extracts terms and specifications from the widely used smart contract code base and classifies them according to different project types to form a knowledge base required for targeted analysis.Subsequently, a large language model (LLM) is used to extract evidence from the smart contract code to be evaluated and its white paper to verify whether the goals described in the white paper are implemented in the code.The model was verified item by item by item using Q&A (Figure 1) and reviewed the content of the white paper around the code base.

At the same time, the model also performs widely accepted technical checks in the industry to identify potential vulnerabilities, such as static code analysis; and compares implementation details with industry-wide practices and related standards to verify their consistency.The above verification helps ensure that smart contracts are executed as expected and meet the operational and compliance standards set in the white paper.

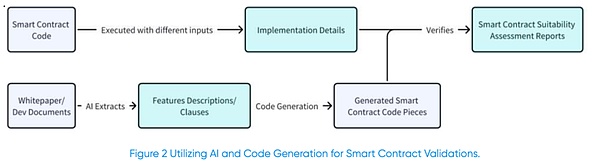

Code Generation Method.This method utilizes AI to generate code snippets based on the goals and functions described in the VA white paper (for example, issuing tokens with a maximum issuance of 100 million).These generated code snippets are then compared with the original smart contract code: run the original code and the AI generated code respectively under the same input conditions and compare the output results.The goal is to verify that the functional output is consistent when the code structure or style may be different.If the output matches, it can be confirmed that the original code is executed according to the white paper specifications; if there is a difference in the output, the code will be further reviewed, find the source of inconsistent, and adjust or re-evaluate as appropriate.Optionally, a direct comparison test can also be conducted between the AI-generated code and the original contract code (Figure 2).

The above two methods together form a verification framework for evaluating the implementation, positioning errors and omissions of smart contracts, and ensuring that the contract operates in a set and publicly declared manner.Such insights can provide regulators with valuable objective basis for verifying the verifiability of project statements.

5.2 Pilot II: Audit report evaluation

5.2.1 Introduction

To ensure that the business logic carried by smart contracts is safe and reliable, the project party usually hires a security audit company to evaluate the code and publish an audit report to the public.However, reviewing such reports often requires expertise in the fields of computer science and security, which regulators may not have.To fill this knowledge gap, this pilot tests an evaluation framework for leveraging LLM to assess the adequacy of such security audit reports.

5.2.2 Existing solutions and service providers

In traditional practice, security audit reports rely on automation tools, manual evaluation and expert analysis, the process is time-consuming and the conclusions are subjective.Audits usually require auditors to check the code base, configuration and operational processes to identify vulnerabilities and weak links.Since the assessment is mainly artificial and the work intensity is high; at the same time, the dependence on human professional judgments also brings error risks and subjective differences, and different auditors’ interpretations of discoveries and risks may not be consistent.The growth of Web3 project complexity and scale puts higher requirements on existing audit methods.The rapid development of technology, obvious open source characteristics, and the surge in the number of decentralized applications have caused auditors to face temporary pressures, which may affect the depth of analysis.Security audits often can only provide “snapshots” at a certain point in time, so they may ignore the threats and vulnerabilities that continue to emerge after the audit.Another significant challenge is technical complexity.Reports are usually highly technical and complex in detail, making it difficult for the public and regulators to fully understand and explain the conclusions.

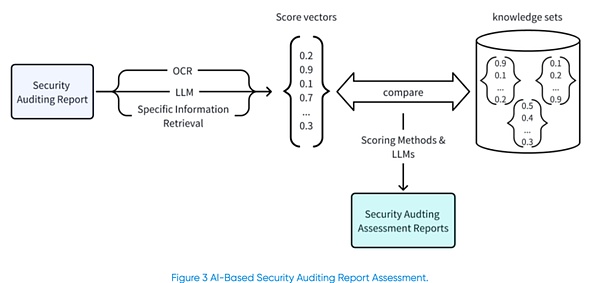

5.2.3 AI-based security audit report assisted evaluation

This evaluation tool uses AI to measure the quality of audit reports.The pilot first uses optical character recognition (OCR) and customized information retrieval technology to collect and organize the data required for evaluation, including the audit scope, evaluation methods, audit tools, and problem descriptions in the report.The report is then processed using an off-the-shelf LLM model to generate embedded representations and represented as vectors as shown in Figure 3.This process uses advanced natural language processing (NLP) technologies, such as entity recognition and dependency syntax analysis based on custom libraries to understand and classify report content.After the data processing is completed, the tool uses the stored vectors to compare and evaluates the predefined knowledge sets (the database shown in the figure below).The knowledge set covers five specific categories: (1) content quality and coverage, (2) vulnerability identification and priority ranking, (3) mitigation of strategies and reporting impacts, (4) presentation quality and audit methodology, and (5) reporting relevance and accessibility.The evaluation process is both speed and comprehensive, and usually takes about five minutes per report.Finally, call LLM again to generate the evaluation report.The report contains the total scores obtained by the weighted summary of the subdivision of the evaluations by each category mentioned above, reflecting the overall performance of the security audit report and pointing out the areas of strengths and areas to be improved.At the same time, the report will also be based

The detailed description generated by LLM is given in the intermediate evaluation results of each category, and its advantages and concerns are clarified. The schematic diagram is shown in Figure 3.

5.3 Pilot 3: Smart Due Diligence Based on AI

5.3.1 Introduction

Initial and due diligence for Web3 projects is crucial to regulators during licensing and ongoing supervision.Virtual Asset Service Providers (VASPs) as virtual asset intermediaries also need to perform their own due diligence on relevant blockchain projects and their tokens before providing virtual assets (VAs) to customers.Due to the decentralized characteristics of blockchain, pseudonym identity and new organizational form, Web3 due diligence faces unique challenges.Identifying and verifying true identities, understanding complex technical infrastructure, and responding to diverse organizational structures and evolutionary legal frameworks all make the process more complex.Meanwhile, publicly available data in the Web3 field can be used to improve your grasp of activities: on-chain data can provide verifiable real-time insights into transactions and smart contract operation; qualitative information off-chain (such as team qualifications, market sentiment, forums and DAO discussions, official social media channels) complements the evaluation.However, despite the data disclosure, ingestion of such massive and highly technical information is still challenging and requires mature processing and analysis tools.The introduction of artificial intelligence (AI) simplifies the due diligence process, allowing regulators and VASPs to review and evaluate Web3 projects more efficiently.

5.3.2 Existing solutions and service providers

To address the needs of complex data analysis and due diligence, many service providers emerge in the Web3 and VA fields.The tools and services provided by these companies can optimize compliance processes, verify identity, and handle some regulatory obligations under different legal jurisdictions.For example, Chainalysis and Elliptic provide blockchain analytics tools to help trace back the source of crypto asset transactions and support anti-Money Laundering (AML) and Combating the Financing of Terrorism (CFT) compliance.Other companies provide digital identity verification solutions, trying to identify users in a decentralized environment.Although the above tools are effective at specific links, they are not yet able to cover the full spectrum supervision required by regulators and VASPs.This pilot aims to further improve the overall due diligence process for regulators and VASPs.

5.3.3 AI-assisted due diligence

This pilot introduces AI technology from multiple aspects to improve the due diligence practices of regulators and VASPs.

Generative AI supports onboarding.When a project applies for a license from a regulatory agency, generative AI is used to customize the entry process according to the focus of the Web3 project.The model developed in this pilot can automatically generate personalized forms and list the required submission list.Such customization can avoid a “one-size-fits-all” general process and reduce submission requirements that are not related to the company’s specific business.

Generative AI reviews social media.Pilot the use of AI tools to monitor and analyze social media performance of businesses and their key personnel, identifying inconsistent public disclosures, reputational risks, and signs of misleading or fraudulent statements.The model used can understand the content context and emotions and output potential concerns for regulators to refer to.(Note: This paragraph of the original text has a duplicate, and is presented here.)

Regulatory Q&A Agent (Q&A Agent).The agent allows regulators to conduct retrieval questions on Web3 project data, covering enterprise self-reported documents, smart contract details, official announcements and disclosures, etc.Based on the latest data during query, the agent provides easy-to-understand insights to non-technical backgrounds on demand; all replies are classified and marked with source, accompanied by original data links.The system will continue to update with new data and will support regulators to access more data sources.

This pilot effectively replaces repetitive and redundant work by using AI in links such as enterprise entry, risk identification and real-time regulatory insights.Given that numerous regulators are actively exploring such innovations, the project has the potential to deploy and further evolve on a larger scale.

6. Conclusion and future work

6.1 Conclusion

The rapid evolution of Web3 and VA activities paves the way for innovation while bringing new and complex regulatory challenges.Integrating AI into regulatory processes promises to enhance regulators’ toolbox to better monitor, predict and mitigate risks arising from the Web3 and VA sectors.The pilot project introduced in this article gives practical examples of AI in this field and demonstrates its practical role in improving industry compliance practice.

6.2 Key Points

The potential for transformation of artificial intelligence in Web3 regulatory technology (SupTech) and regulatory technology (RegTech)

· AI-driven solutions can significantly improve the effectiveness of Web3 regulation, including real-time risk analysis, forward-looking vulnerability detection, and more efficient compliance monitoring.

· By using a variety of AI technologies (such as machine learning, natural language processing NLP, generative AI and autonomous agency), regulators can better maintain supervision, optimize reporting processes, discover abnormalities, and understand emotions and public opinion in the decentralized ecosystem.

· Integrating AI into Web3 regulation simplifies complexity across legal domains, adapts to 24/7, and makes compliance frameworks more accessible, flexible and innovative.

Challenges facing AI implementation

· Ethics and privacy, model bias, and the need for transparency and traceability are key issues.

· Human supervision is essential, which reduces excessive dependence on AI and ensures the reliability of applications.

Practical applications demonstrated by the pilot

· AI-enhanced smart contract evaluation helps ensure consistency with the white paper and regulatory standards.

· Automatic evaluation of audit reports and due diligence processes can significantly improve efficiency.

· Generative AI tools support enterprise inbound processes, social media analytics, and provide useful insights to regulators efficiently.

Future direction

· Advances in predictive analytics, adaptable AI systems and global collaboration will drive more effective regulatory practices.

· Establishing an AI governance framework and ethical standards will become the key to maintaining trust and accountability.

6.3 Future work

Looking to the future, several key directions will drive the continuous evolution and integration of Artificial Intelligence (AI) in the regulatory process:

Advanced AI ModelsWith the advancement of AI technology, model capabilities and result quality are expected to be further improved, while achieving lower costs and computing resource utilization.

· Enhanced Predictive AnalyticsFurther developments in predictive analysis will support more accurate predictions of risk and compliance violations.With larger and more specialized data sets, as well as more complex algorithms, AI systems can provide proactive early intervention by proactively identifying problems before they occur.

Advanced AI Governance and EthicsTo ensure that AI applications in regulatory scenarios are ethical, transparent and reduce deviations, it is imperative to establish a systematic AI governance framework.Developing AI ethical standards and guidelines will help build trust and accountability in AI-based regulatory systems.

Adaptive and Explainable AIFuture AI systems should have adaptive capabilities and be able to continuously learn and evolve with changes in the regulatory environment and Web3 activities.Improving the interpretability of algorithms and decisions will make regulatory decisions more transparent and understandable to the parties affected by them.

· Global CollaborationEstablishing and sharing best practices across legal levels will promote more consistent and effective regulation of the global Web3 ecosystem.