Written by: imToken

At the end of 2025, the Ethereum community ushered in the conclusion of the Fusaka upgrade relatively calmly.

Looking back on the past year, although discussions about underlying technology upgrades have gradually faded out of the market spotlight, I believe that many on-chain users have personally felt a significant change: Ethereum L2 is getting cheaper.

In today’s on-chain interactions, whether it is transfers or complex DeFi operations, gas fees often only cost a few cents or even be ignored. Behind this, the Dencun upgrade and the Blob mechanism are certainly indispensable. At the same time, with the official activation of PeerDAS (Peer Data Availability Sampling, point-to-point data availability sampling verification), the core feature of the Fusaka upgrade, Ethereum is also completely bidding farewell to the era of “full download” data verification.

It can be said thatWhat truly determines whether Ethereum can carry large-scale applications in a long-term and sustainable manner is not just the Blob itself, but the next step represented by PeerDAS.

1. What is PeerDAS?

To understand the revolutionary significance of PeerDAS, we cannot just talk about concepts. We must first go back to a key node on the road to Ethereum expansion, namely the Dencun upgrade in March 2024.

At that time, EIP-4844 introduced a Blob-carrying transaction model (embedding a large amount of transaction data into blobs), so that L2 could no longer rely on expensive calldata storage mechanisms and instead use temporary Blob storage.

This change is directlyReduce the cost of Rollup to one-tenth of the original, ensuring that the L2 platform can provide cheaper and faster transactions without affecting the security and decentralization based on Ethereum.It also allows our users to taste the sweetness of the “low gas consumption era”.

However, although Blobs are very useful, there is a hard upper limit on the number of Blobs that can be carried per block on the Ethereum mainnet (usually 3-6). The reason is very practical, that is, physical bandwidth and hard disks are limited.

Under the traditional verification model, every validator (Validator) in the network, whether it is a server operated by a professional organization or an ordinary computer at a retail investor’s home, still must download and disseminate the complete Blob data to confirm that the data is valid.

This creates a dilemma:

-

If the number of Blobs is increased (for capacity expansion): the amount of data surges, the bandwidth of home nodes will be filled up, and the hard disk will be filled up, causing them to be forced to go offline. The network will quickly become centralized and eventually become a giant chain that can only be run in large computer rooms;

-

If the number of blobs is limited (for decentralization): L2’s throughput is locked and cannot cope with future explosive growth demand.

To put it bluntly, Blob is just the first step to solve the problem of “where to store data”.When there is less data, everything is fine, but if the number of Rollups continues to increase in the future, each Rollup submits data at a high frequency, and the Blob capacity continues to expand, then the bandwidth and storage pressure of the node will become a new centralization risk.

If we continue to use the traditional full-volume download model and fail to solve the bandwidth pressure, Ethereum’s expansion path will hit the wall of physical bandwidth. PeerDAS is the key to unlocking this knot.

If you can sum it up in one sentence, PeerDAS is essentially a brand-new data verification architecture, which breaks the iron law that verification must be downloaded in full.Allows blobs to scale beyond current physical throughput levels (e.g. jump from 6 blobs/block to 48 or more).

2. Blob solves “where to put it”, and PeerDAS solves “how to store it”

As mentioned above,Blob has taken the first step in expansion and solved the problem of “where to store data” (moving from expensive Calldata to temporary Blob space), so what PeerDAS needs to solve is the problem of “how to store it more efficiently”.

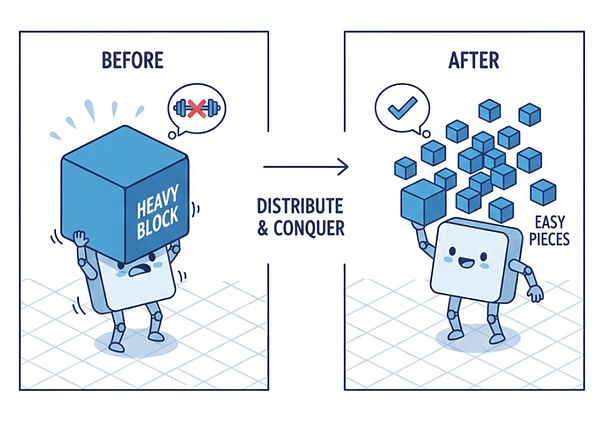

The core problem it wants to solve is how to increase the amount of data exponentially without overwhelming the physical bandwidth of the node?The idea is also very straightforward, that is, based on probability and distributed collaboration, “it does not require everyone to store the full amount of data, and it can confirm with a high probability that the data really exists.”

This can be seen from the full name of PeerDAS, “Peer-to-Peer Data Availability Sampling Verification.”

This concept sounds obscure, but we can use a popular metaphor to understand this paradigm shift. For example, full-volume verification in the past is like a library entering a thousands-page Encyclopedia Britannica (Blob data). In order to prevent loss, each administrator (node) is required to manually copy a complete book as a backup.

That means that only people with money and leisure (bandwidth/large hard drive) can be administrators, especially since the Encyclopedia Britannica (Blob data) will continue to expand with more and more content. If things go on like this, ordinary people will be eliminated and decentralization will disappear.

Now, based on PeerDAS sampling, technologies such as erasure coding (Erasure Coding) have been introduced, which allows the book to be torn into countless pieces and expanded with mathematical coding. Each administrator no longer needs to hold the entire book, but only needs to randomly select a few pages and save them in his hand.

Even when verifying, no one is required to produce the entire book. Theoretically, as long as the entire network collects any 50% of the fragments (no matter whether you have page 10 or page 100), we can use mathematical algorithms to instantly restore the entire book with 100% certainty.

This is also the magic of PeerDAS——The burden of downloading data is removed from a single node and distributed to a collaborative network composed of thousands of nodes across the entire network.

Source: @Maaztwts

Source: @Maaztwts

Just looking at the intuitive data dimension,Before the Fusaka upgrade, the number of blobs was stuck in single digits (3-6).The implementation of PeerDAS directly tore this upper limit open, allowing Blob targets to jump from 6 to 48 or more.

When a user initiates a transaction on Arbitrum or Optimism, and the data is packaged and transmitted back to the main network, there is no longer a need to broadcast the complete data packet to the entire network. This allows Ethereum to achieve a transition where expansion no longer linearly increases node costs.

Objectively speaking, Blob + PeerDAS is a complete DA (data availability) solution. From a roadmap perspective, this is also a key transition for Ethereum from Proto-Danksharding to complete Danksharding.

3. The new normal on the chain in the post-Fusaka era

As we all know, in the past two years, third-party modular DA layers such as Celestia once gained huge market space because the Ethereum mainnet was expensive. Their narrative logic is based on the premise that Ethereum’s native data storage is expensive.

With Blob and the latest PeerDAS, Ethereum is now both cheap and extremely secure:The cost of L2 publishing data to L1 has been cut by more than half. In addition, Ethereum has the largest set of validators in the entire network, and its security is far superior to that of third-party chains.

Objectively speaking, this is a dimensionality reduction blow to third-party DA solutions such as Celestia. It marks that Ethereum is regaining the sovereignty of data availability and greatly squeezing their living space.

You may ask, these sound very low-level, what does it have to do with my use of wallets, transfers, and DeFi?

The relationship is actually very direct.If PeerDAS can be successfully implemented, it means that L2 data costs can remain low for a long time, Rollup will not be forced to increase fees due to the rebound in DA costs, on-chain applications can also safely design high-frequency interactions, and wallets and DApps do not have to repeatedly compromise between “function vs cost”…

In other words,The fact that we can use cheap L2 today is due to Blob. If we can still afford it in the future, it will be inseparable from the silent contribution of PeerDAS.

This is why in Ethereum’s expansion roadmap, although PeerDAS is low-key, it is always regarded as a stop that cannot be bypassed. In essence, this is also the best form of technology in the eyes of the author – “It is difficult to benefit without realizing it, and it is difficult to survive if it is lost”, so that you cannot feel its existence.

Ultimately, PeerDAS proves that blockchain can carry Web2-level massive data without overly sacrificing the vision of decentralization through exquisite mathematical design (such as data sampling, etc.).

At this point, Ethereum’s data highway has been completely paved. What kind of cars will run on this road next is a question that the application layer should answer.

Let’s wait and see.