Author: Knower, encrypted KOL; Translation: Bit Chain Vision Xiaozou

1As well asMegaethBrief introduction

The main content of this article will be my personal ideas for Megaeth’s white paper. If I can do it, I may be further expanded from this.No matter what this article looks like, I hope you can learn some new things from it.

>

Megaeth’s website is cool, because there is a mechanical rabbit on it, the color matching is very conspicuous.Before that, there was only one github -one website made everything easier.

I browsed megaeth github and learned that they were developing a certain type of execution level, but I had to say honestly, maybe my idea was wrong.The fact is that my understanding of Megaeth is not deep enough, and now they have become a popular topic of ETHCC.

I need to know everything and make sure the technology I see is the same as those cool guys see it.

Megaeth White Paper said that they are a real -time blockchain compatible with EVM, which aims to bring a web2 -like performance to the encrypted world.Their purpose is to increase the experience of Ethereum L2 by providing attributes such as over 100,000 transactions per second, a block time of less than one millisecond, and a one -dollar trading fee.

Their white paper emphasizes that the number of L2 is growing (discussed this in a previous article, although this number has risen to more than 50, and more L2 is in “active development”) and they are encryptedLack of PMF in the world.Ethereum and Solana are the most popular blockchains. Users will be attracted by one of them. They will only choose other L2 if they have tokens.

I don’t think too much L2 is a bad thing, just like I don’t think this must be a good thing, but I admit that we need to take a step back.Come to examine why our industry has created so many L2.

Okham Razor will say that the venture capitalists enjoy this feeling very much, knowing that they are really likely to create the next L2 (or L1) king, and get satisfaction from the investment in these projects, but I also think that there may be many many maybe many many.Crypting developers actually want more L2.Both sides may be right, but the more correct conclusions about which party is more important. It is best to objectively look at the current infrastructure ecosystem and make good use of everything we are.

>

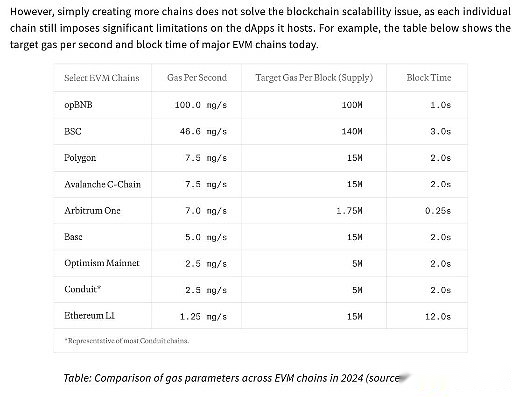

The L2 currently available for L2 is very high, but it is not enough.Megaeth’s white paper says that even if OPBNB (relative) has a higher 100 mgas/s, this can only mean that 650 times per second can be traded -modern or web2 infrastructure can be transactions per second.

We know that although the encryption advantage comes from decentralized characteristics and realized payment without permission, it is still quite slow.If a game development company like Blizzard wants to bring Overwatch to the chain, they can’t do it -we need higher click -through rates to provide real -time PVP and other web2 games.

One of the solutions for the L2 dilemma is to entrust security and resistance to Ethereum and EIGENDA, respectively, and transform Megaeth into the world’s highest performance L2 without any balance.

L1 usually requires homogeneous nodes. These nodes perform the same task and have no professional space.In this case, professionalization refers to work such as sorting or proof.L2 bypasses this problem and allows the use of heterogeneous nodes to separate tasks to improve scalability or reduce some of the burdens.This can be seen from the increasingly popular and professional ZK certification service (such as the Succinct or Axiom) of the shared sorter (such as Astria or ESPRESSO).

“The creation of real -time blockchain involves not only using the ready -made Ethereum to perform clients and add sorter hardware. For example, our performance experiments show that even if it is equipped512GB RAMPowerful server,EthIt can only reach about the real -time synchronization settings on the nearest Ethereum block1000 TPS, Equivalent to about100 mgas/sEssence“

MEGAETH expands this division by executing from a complete node abstract transaction, using only one “active” sorter to eliminate consensus overhead in typical transactions.”Most complete nodes passP2PThe network is different from the sorter receiving state and directly applies the difference to update the local state.It is worth noting that they will not re -execute transactions; on the contrary, they use proofers (Prover) The provided proof indirect verification block.“

Except for “it is fast” or “it is very cheap” comments, I have not read too much about how Megaeth’s analysis is, so I will try to carefully analyze its architecture and compare it with other L2.

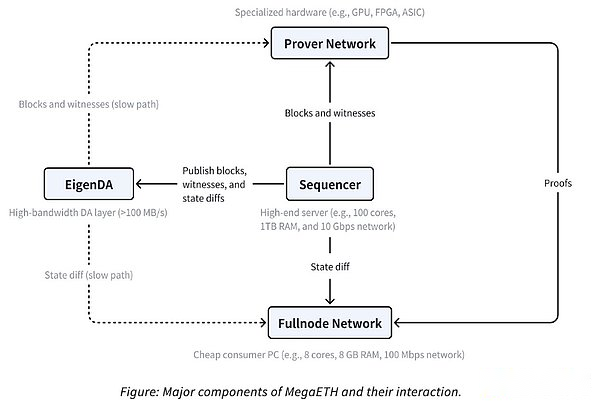

MEGAETH uses EIGENDA to process data availability, which is quite standard today.Rollup-as-A-Service (Rollup as a service) platform like Conduit allows you to choose Celestia, EIGENDA, and even Ethereum (if you want) as Rollup data availability suppliers.The difference between the two is quite technical and not completely related. It seems that one rather than the other decision is more based on resonance rather than anything else.

The sorter is sorted and the transaction is finally executed, but it is also responsible for publishing blocks, witness and status differences.In the context of L2, the witness is an additional data used by the proofer to verify the sorter block.

The state difference is the change of the state of the blockchain, which can basically be anything that occurs on the chain -the function of the blockchain is to continuously add and verify the new information added to its state.No need to re -execute the transaction and confirm the transaction.

Prover consists of special hardware to calculate encryption proof to verify the content of the block.They also allow nodes to avoid repeated execution.There are zero -knowledge proof and fraud proof (or Optimistic proof?), But the difference between them is not important now.

Putting all these together is the task of a complete node network. It acts as a polymer between the Prover, sorter and EIGENDA. (Hope) makes Megaeth magic a reality.

>

MEGAETH’s design is based on basic misunderstandings of EVM.Although L2 often blames its bad performance (throughput) on EVM, it has been found that REVM can reach 14000 TPS.What if it is not EVM, what is it?

2The current scalability problem

Three major EVM inefficient factors that lead to performance bottlenecks are lack of parallel execution, interpreter overhead and high -state access delay.

Due to RAM wealthy, MegaETH can store the state of the entire blockchain, with the exact RAM of the Taifang as 100GB.This setting is eliminatedSSDReading delay accelerated the status access significantly.

I don’t know much about SSD reading delay, but it is probably more intensive than other operating codes. If you invest more RAM on this issue, you can abstract it.Is this effective under large -scale situation?I’m not sure, but in this article, I will treat this as a fact.I still suspect that the chain can determine the throughput, transaction costs and delay at the same time, but I am trying to become a positive learner.

Another thing I should mention is that I don’t want to be too picky.My idea is never to support one agreement than another agreement, and even paying attention to them at the beginning -I do this just to better understand and help anyone who reads this article get the same understanding at the same time.

>

You may be familiar with the trend of parallel EVM, but it is said that there is a problem.Although it has made progress in the transplantation of the Block-STM algorithm into EVM, it is said that “the actual speed speed that can be achieved in production is essentially limited by parallelism in the workload.”Eventually deployed on the EVM chain on the main network, this technology is also subject to most transactions that may not require parallel implementation of this basic reality.

If trading B depends on the result of transaction A, you cannot execute two transactions at the same time.If 50%of block transactions are mutually dependent on this situation, then parallel execution is not as great as the claiming.Although this is a bit simplified (even a little incorrectly), I think it is important.

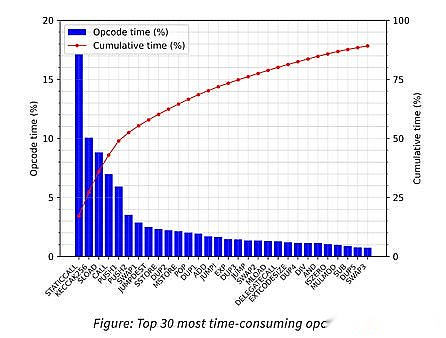

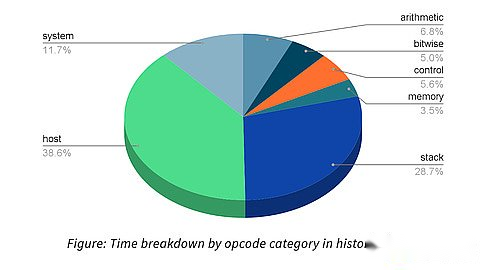

The gap between REVM and native execution is very obvious, especially the REVM is still slow 1-2 Ooms, which is not worthy of being an independent VM environment.It is also found that there is no sufficient computing dense contract to ensure the use of REVM.”For example, we analyze the time spent by each operating code during the history of history, and find out thatRevmMetropolitan50%The time spent on the “host““And” system“On the operating code.”

>

In terms of status synchronization, MegaetH has discovered more problems.The state synchronization is simply described as a process that enables the whole node and the sorter activity. This task can quickly consume the bandwidth of projects like Megaeth.Here is an example to illustrate this: If the target is to transfer 100,000 ERC20 per second, then this will consume a bandwidth of about 152.6 Mbps.This 152.6 Mbps is said to exceed the estimation (or performance) of Megaeth, which basically introduces an impossible task.

This only considers simple token transfer. If the transaction is more complicated, it will ignore the possibility of higher consumption.Considering the diversity of activities in the real world, this is a possible situation.MEGAETH wrote that the Uniswap transaction modified 8 storage slots (while ERC20 transfer only modified 3 storage slots), so that our total bandwidth consumption reached 476.1 Mbps, which is a more feasible goal.

Another problem to realize the 100K TPS high -performance blockchain is to solve the update of the chain status root. This is a task of managing the storage certificate to the light client.Even with professional nodes, complete nodes still need to use network sorter nodes to maintain the status root.The above article synchronizes the problem of 100,000 ERC20 transfer per second as an example, which will bring the cost of updating 300,000 keys per second.

Ethereum uses MPT (Merkle Patricia Trie: Merkel prefix tree) data structure to calculate the state after each block.In order to update 300,000 keys per second, Ethereum needs to “convert 6 million non -cache database reads”, which is much larger than any consumer SSD today.MEGAETH wrote that this estimation does not even include writing operations (or estimates on trading on chains such as Uniswap transactions), which makes the challenge more like a Sisyphus -style endless effort, rather than most of us may prefer preferred preferredClimbing.

There is another problem that we reach the limit of block GAS.The speed of the blockchain is actually limited by the block GAS limit. This is an obstacle to self -setting, which aims to improve the security and reliability of the blockchain.”Set blockgasThe rule of experience is that it must be ensured that any block within this limit must be processed securely within the block time.”The White Paper describes the block GAS limit as a” throttling mechanism “. When assuming the node meets the lowest hardware requirements, ensure that the node can keep up with the pace reliably.

Others say that the block GAS limit is a conservative choice to prevent the worst situation of the occurrence. This is another example of another modern blockchain architecture that attaches importance to security than scalability.When you consider how much money is transferred between the blockchain every day, and if you lose these money if you lose the scalability slightly, the idea of more important scalability than security will collapse.

Blockchain may not be outstanding in attracting high -quality consumer applications, but they are excellent in terms of point -to -point payment without permission.No one wants to mess this.

Then it must be mentioned that the parallel EVM speed depends on the workload, and their performance is restricted by the “long dependencies” of the “long -term dependency chain” of the minimized blockchain function.The only way to solve this problem is to introduce multi -dimensional GAS pricing (Megaeth refers to the local toll market of Solana), which is still difficult to implement.I’m not sure if there is a special EIP, or how this EIP works on EVM, but I want to be technically a solution.

Finally, users will not interact directly with the sorter node, and most users will not run a complete node at home.Therefore, the actual user experience of the blockchain depends to a large extent on its underlying infrastructure, such asRPCNodes and indexes.No matter how fast the blockchain is transported in real time, ifRPCThe node cannot effectively process a large number of read requests at the peak time, and quickly spread the transaction to the sorter node, or the indexer cannot update the application view quickly to follow the speed of the chain.It has nothing to do.“

Maybe I repeat too much, but it is very important.We all rely on Infura, Alchemy, QuickNode, etc. The infrastructure they run is likely to support all our transactions.The simplest explanation of this dependence comes from experience.If you have tried to apply for air investment within 2-3 hours after a L2 airdrop, you will understand how difficult it is for RPC management.

3,in conclusion

Having said so much, I just want to express projects like Megaeth that need to cross many obstacles to reach the height it wants to reach.There is a post saying that they have been able to achieve high -performance development through the use of heterogeneous blockchain architecture and an over -optimized EVM execution environment.”now,MegaethIt has a high -performance real -time development network and is moving steadily in the direction of becoming the fastest blockchain, which is limited by hardware.”

MEGAETH’s GitHub lists some major improvements, including but not limited to:EVMBytecode→Native code compiler, special execution engine for large memory discharge vessel nodes, as well as facing parallelEVMHigh -efficiency concurrent control protocol.EVM bytecode/native code compiler is now available, named EVMONE. Although I am not proficient in coding and I can’t know its core working mechanism, I have done my best to figure out it.

EVMONE is an EVM’s C ++ deployment. It has the EVMC API and converts it into the execution module of the Ethereum client.It refers to some other characteristics I don’t understand, such as its dual interpretation method (baseline and advanced), andintxandethashLibrary.In short, EVMON is provided for faster transaction processing (implementation through faster smart contracts), greater development flexibility and higher scalability (assuming that different EVM deployments can process more transactions per block) provideChance.

There are some other code libraries, but most of them are quite standard and are not particularly related to Megaeth (RETH, Geth).I think I have basically completed the research work of the white paper, so now I leave the question to anyone who reads this article: What is the next step of Megaeth?Is it really possible to achieve an effective expansion code?How long does it take to achieve this?

As a blockchain user, I am glad to witness whether it is feasible.I spent too much money on the main network trading fee, it is time to change, but this change still feels more and more difficult to achieve, and it is unlikely to happen quickly.

Although the content of this article mainly revolves around architecture improvement and scalability, internal ROLLUP sharing liquidity and cross -chain tools can still be required to make Rollup A’s experience consistent with ROLLUP B.We have not done this yet, but maybe by 2037, everyone will sit down and recall how we are addicted to “repair” scalability issues.