Author: Gerry Wang @ Arweave Oasis, the original first was first released on @Aarweaveoasis Twitter

in:

Bi = Arweave network block index block index;

800*n_p = Each checkpoint is unlocked at a maximum of 800 hash. N_P is the number of partitions stored in 3.6 TB.Essence

D = the difficulty of the network.

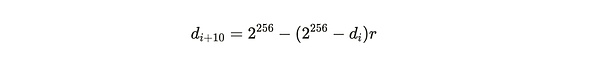

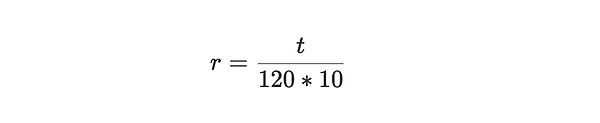

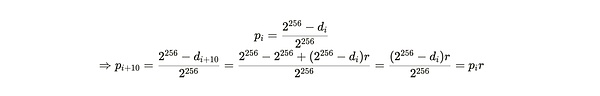

A successful and effective proof is those proofs greater than the difficulty, and this difficulty will be adjusted over time to ensure that an average of a block is dug out every 120 seconds.If the time difference between block i and block (i+10) is T, then the adjustment from old difficulty D_i to new difficulty D_ {i+10} is calculated as follows:

The difficulty of the new calculation determines that the spoa proof of each generation is based on the probability of the success of the block, which is as follows:

Similarly, the difficulty of VDF will be re -calculated, the purpose is to maintain the checkpoint cycle to occur once per second in time.

The incentive mechanism of a complete copy

Under the inspiration, whether it is individual miners or group cooperation with miners, it will be implemented by maintaining complete data copies as the best strategy for mining.

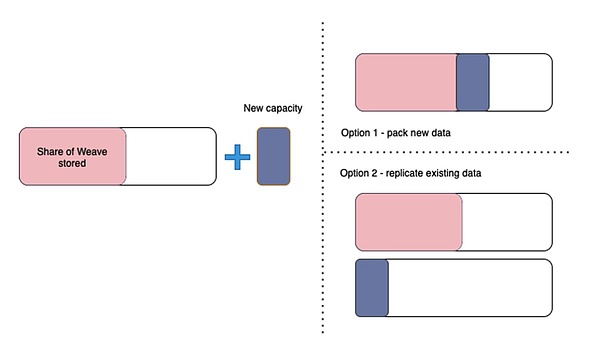

In the SPORES game introduced earlier, the number of SPOA hash release released by the two copies of the same part of the storage data set is the same as the complete copy of the storage of the entire data set, which leaves the possibility of speculative behavior for miners.So when Arweave was in actual deployment of this mechanism, it made some modifications. The agreement was divided into two parts through the number of SPOA challenges unlocked per second:

-

Part of the partition in the partition stored by miners to release a certain number of SPOA challenges;

-

Another part is to randomly specify a partition in all Arweave to release a SPOA challenge. If the miners do not store a copy of this partition, they will lose the number of challenges in this part.

You may feel a little puzzled here, what is the relationship between Spoa and Spores.The consensus mechanism is Spores, why is it the challenge of Spoa?In fact, they are a subordinate relationship.SPORES is the general name of this consensus mechanism, which contains a series of SPOA certification challenges that require miners.

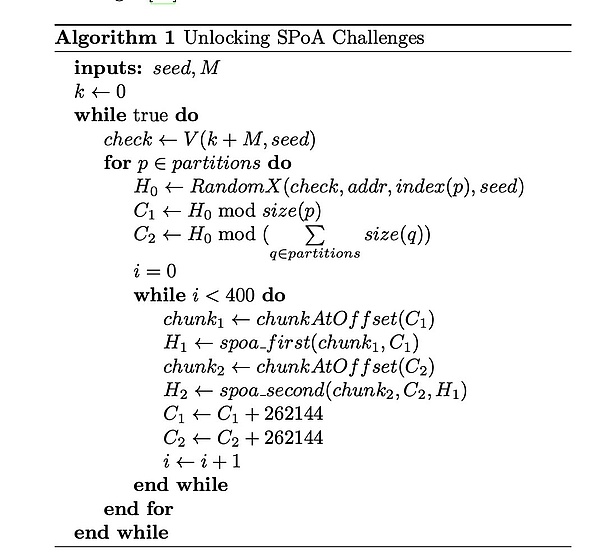

To understand this, we will check how the VDF described in the previous section is used to unlock the SPOA challenge.

-

At about a second, the VDF hash chain will output a checkpoint (Check);

-

This checkpoint Check will calculate a hash H0 with the mining address (ADDR), partition index (INDEX (P)), and the original VDF seed (SEED).number;

-

C1 is a retrospective offset. It is obtained by H0 except for the size of the partition.

-

The 400 256 kb data blocks in the range of 100 MB from this start offset are the first traceable SPOA challenge that was unlocked.

-

C2 is the starting offset of the second traceable range. It is obtained from the remaining number of H0 except for the sum of all partitions. It also unlocked the 400 SPOA challenges of the second traceable range.

-

The constraints of these challenges are the SPOA challenge in the corresponding position of the first range in the second range.

-

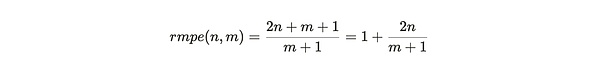

When the miners have a copy of the complete data set, the reward for a copy is the highest.Because if N approaches m and M tends to infinitely large, the value of RMPE is 3.This means that when it is close to a complete copy, the efficiency of finding new data is three times the efficiency of re -packaging existing data.

-

When the miners store half of the weaving network, for example, when n = 1/2 m, RMPE is 2.This means that the profit of the new data is twice the replication of existing data income.

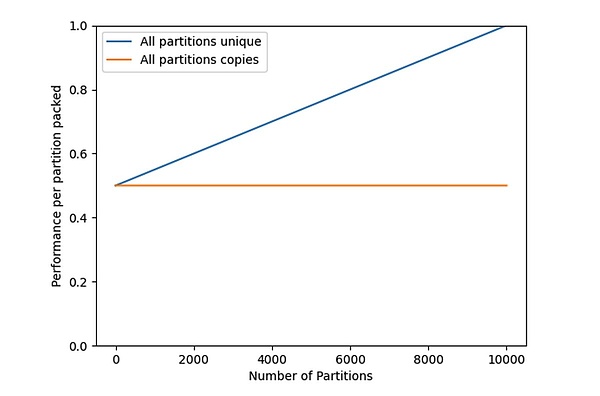

The performance of each packing partition

The concept of “only copy” here is very different from the concept of “backup”. For details, you can read the past article “>Arweave 2.6 may be more in line with Satoshi Nakamoto’s vision》 Content.

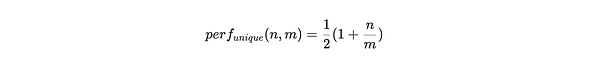

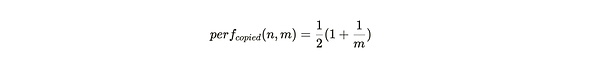

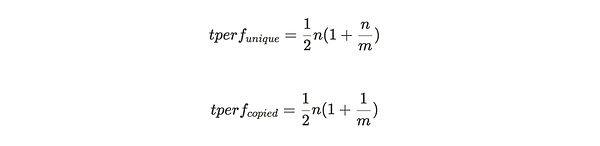

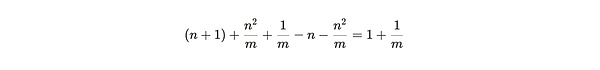

If the miners only deposit the only copy of the partition, each packaged partition will generate all the challenges of all the first traceability, and then generate the second round of tracers in the partition according to the quantity of the storage partition copy.If the entire ARWEAVE weaving network has the M part of the Communist Party of China, and the miners store the only copy of the n Division, then the performance of each packing partition is:

The blue thread in Figure 1 is the performance of the storage partition’s sole copy perf_ {unique} (n, m). The picture intuitively shows that when the miner only stores a small partition copy, the mining efficiency of each partition is the mining efficiency of each partition.Only 50%.When the storage and maintenance of all datasets, that is, n = m, the mining efficiency is 1 to maximize 1.

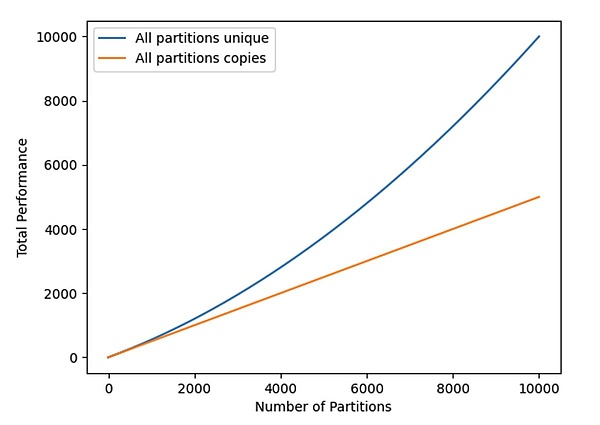

Total hash

The total hash rate (see Figure 2) is given by the following equations, and the value of each partition is obtained by N:

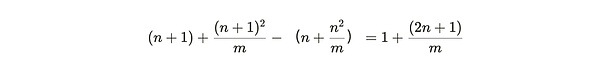

Marginal partition efficiency

The RMPE value can be regarded as a punishment for miners to copy the existing partition when adding new data.In this expression, we can handle M to infinity, and then consider the efficiency weighing at different n values:

For lower N values, the RMPE value tends to but always greater than 1.This means that the income of the only copy of the storage is always greater than the income of copying existing data.

With the growth of the network (M is infinite), the motivation for the construction of a complete copy will be enhanced.This promotes the creation of the cooperative mining team, which jointly stores the complete copy of at least one data set.

This article is mainly introducedDetails of the construction of arweave consensus protocolOf course, this is just the beginning of this part of the core content.From the introduction of mechanisms and code, we can understand the specific details of the agreement very intuitively.Hope to help everyone understand.